1、Docker的网络模型

1.bridge(默认)

--net=bridge 默认的网络模式,Docker启动后创建一个docker0的网桥,默认创建的容器也是添加到这个网络中。

[root@node1 ~]# docker run --rm -it hebye/alpine:latest /bin/sh2.None

--net=none 获取独立的network 和namespace,但是不为容器进行任何网络配置,需要我们手动配置。

[root@node1 ~]# docker run --rm -it --net=none hebye/alpine:latest /bin/sh3.Host

--net=host 容器不会获得一个独立的network和namespache,而是与宿主机共用一个,这就意味着容器不会有自己的网卡信息,而是使用宿主机的。容器除了网络,其他都是隔离的。

[root@node1 ~]# docker run --rm -it --net=host hebye/alpine:latest /bin/sh4.联合网络

--net=container:Name/ID 与指定的容器使用同一个newwork和namespache,具有同样的网络配置信息,两个容器除了网络,其他还是隔离的。

[root@node1 ~]# docker run -it -d --name zd -p 90:80 busybox

[root@node1 ~]# docker run -it -d --name nginx-zd --net container:zd nginx

#使用container将nginx网络使用zd网络代替

[root@node1 ~]# curl 10.4.7.99:902、理解docker0

问题:docker是如何处理容器网络访问的?

1 启动容器

[root@node1 ~]# docker run -d -P --name tomcat01 tomcat2 查看容器的内网的网络地址

[root@node1 ~]# docker run -d -P --name tomcat01 tomcat

78a6cc9c30c777229e781321909bb7d1bf3da1b293d9fa4fc51c7e360d3a1233

[root@node1 ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

38: eth0@if39: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:07:63:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.7.99.2/24 brd 172.7.99.255 scope global eth0

valid_lft forever preferred_lft forever

[root@node1 ~]# 3 宿主机可以ping通容器的地址

[root@node1 ~]# ping 172.7.99.2

PING 172.7.99.2 (172.7.99.2) 56(84) bytes of data.

64 bytes from 172.7.99.2: icmp_seq=1 ttl=64 time=0.329 ms

64 bytes from 172.7.99.2: icmp_seq=2 ttl=64 time=0.052 ms

64 bytes from 172.7.99.2: icmp_seq=3 ttl=64 time=0.057 ms

64 bytes from 172.7.99.2: icmp_seq=4 ttl=64 time=0.047 ms4 原理:

我们每启动一个docker容器,docker就会给docker容器分配一个ip,我们只要安装了docker,就会有一个网卡docker0,桥接模式,使用的技术是evth_pair技术。

[root@node1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:3a:c1:e1 brd ff:ff:ff:ff:ff:ff

inet 10.4.7.99/24 brd 10.4.7.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe3a:c1e1/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:49:9e:ac:ce brd ff:ff:ff:ff:ff:ff

inet 172.7.99.1/24 brd 172.7.99.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:49ff:fe9e:acce/64 scope link

valid_lft forever preferred_lft forever

39: veth9f12e27@if38: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether fa:09:a6:97:19:f0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::f809:a6ff:fe97:19f0/64 scope link

valid_lft forever preferred_lft forever

[root@node1 ~]#

#在启动一个容器测试,发现又多了一对一网卡

#我们来测试下Tomcat01和Tomcat02是否可以ping通

[root@node1 ~]# docker exec -it tomcat01 ping 172.7.99.3

PING 172.7.99.3 (172.7.99.3) 56(84) bytes of data.

64 bytes from 172.7.99.3: icmp_seq=1 ttl=64 time=0.277 ms

64 bytes from 172.7.99.3: icmp_seq=2 ttl=64 time=0.069 ms

#我们发现启动容器后,带来的网卡都是成对出现的。

#evth-pair就是一对的虚拟设置接口,他们都是成对出现的。

#正因为有这个特性,evth-pair充当一个桥梁,连接各种虚拟网络设备。

#容器和容器之间是可以互通的,他们是公用的一个路由器,docker0

#所有的容器不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用的IP

#Docker中的所有的网络接口都是虚拟的,虚拟的转发效率高,只要容器删除,对应的网桥也会被删除3、docker –link用法

思考一个场景:我们编写了一个微服务,database url=ip,项目重启后,发现数据库ip换掉了。我们希望可以处理这个问题,可以用名字来进行访问容器

[root@node1 ~]# docker exec -it tomcat02 ping tomcat01

ping: tomcat01: Temporary failure in name resolution

[root@node1 ~]#

# 通过 --link既可以解决网络连通问题

[root@node1 ~]# docker run -d -P --name tomcat03 --link tomcat02 tomcat

[root@node1 ~]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.7.99.2) 56(84) bytes of data.

64 bytes from tomcat02 (172.7.99.2): icmp_seq=1 ttl=64 time=0.063 ms

64 bytes from tomcat02 (172.7.99.2): icmp_seq=2 ttl=64 time=0.057 ms

#tomcat03容器可以ping通tomcat02容器

#反之:tomcat02容器 ping 不通 tomcat03容器

[root@node1 ~]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Temporary failure in name resolution

[root@node1 ~]#

#原理:

[root@node1 ~]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.7.99.2 tomcat02 5c5385bef5df

172.7.99.4 c212441b5be1

[root@node1 ~]#

#--link 就是在hosts配置中 增加host4、自定义网络

自定义网络:与默认的bridge原理一样,但是自定义网络具备内部DNS发现,可以通过容器名或者主机名容器之间网络通信。

1.查看网络

[root@node1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

eead7eb4f494 bridge bridge local

53a5ac2f63f1 host host local

b53e58e785e0 none null local

[root@node1 ~]#

#网络模式:

bridge:桥接 docker0,默认

none:不配置网络

host:和宿主机共享网络2.自定义网络

[root@node1 ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

[root@node1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

eead7eb4f494 bridge bridge local

53a5ac2f63f1 host host local

4feaa6474de2 mynet bridge local

b53e58e785e0 none null local

#查看自定义网络信息

[root@node1 ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "4feaa6474de2b9ba6d0960b36b8694ef99337ff969d7e8385e8c9f1e0d42324b",

"Created": "2021-03-18T15:43:24.5652325+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

[root@node1 ~]#

#用自定义网络启动容器

[root@node1 ~]# docker run -d -P --name tomcat-net-01 --network=mynet tomcat

[root@node1 ~]# docker run -d -P --name tomcat-net-02 --network=mynet tomcat

#查看网络

[root@node1 ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "4feaa6474de2b9ba6d0960b36b8694ef99337ff969d7e8385e8c9f1e0d42324b",

"Created": "2021-03-18T15:43:24.5652325+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"a6ec72902a4e57b10a7cff353e1210298decc38cd9a582153bf0192d961f2687": {

"Name": "tomcat-net-01",

"EndpointID": "8b25c1fa53a75c05311d2c8ba452072356de03dbca1b9e26b3ef7be36ef600b8",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"eda60ed12e5d726a938cc3b35bdedbaee99accf6d125c9f378d114a6420f199e": {

"Name": "tomcat-net-02",

"EndpointID": "ef5d512171369a04df69c236d139e8aeef7117ade1153c980f4b3a35966f7272",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@node1 ~]#

# 再次测试ping连接

[root@node1 ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.283 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.223 ms

[root@node1 ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.110 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.075 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.120 ms

#现在不适用 --link 也可以ping 名字了

#我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐我们平时这样使用网络3.网络连通

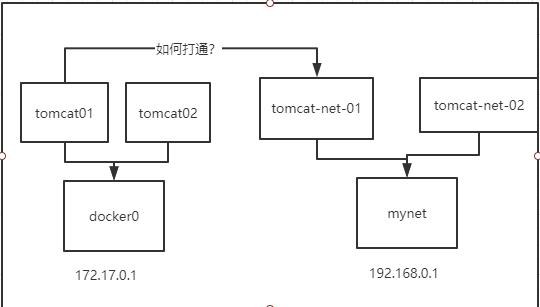

现在docker0 和我们自定义的网络mynet 是不通的

#测试:

# 在docker0启动两个容器

[root@node1 ~]# docker run -d -P --name tomcat01 tomcat

[root@node1 ~]# docker run -d -P --name tomcat02 tomcat

#测试两个容器是否ping通

[root@node1 ~]# docker exec -it tomcat01 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

[root@node1 ~]#

#容器 打通

[root@node1 ~]# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

#测试 打通 tomcat01---mynet

[root@node1 ~]# docker network connect mynet tomcat01

# 连通之后就是将tomcat01 放到了mynet网络下

# 一个容器 两个 ip

[root@node1 ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "4feaa6474de2b9ba6d0960b36b8694ef99337ff969d7e8385e8c9f1e0d42324b",

"Created": "2021-03-18T15:43:24.5652325+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"498022614de718301d427717e25aa7df532afbb98f3089c89458908afbbca5db": {

"Name": "tomcat01",

"EndpointID": "bbcfe9fd3bdb7a86ac61561a71aeea4e66100a850ffcc4b7bffab889b733e975",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"a6ec72902a4e57b10a7cff353e1210298decc38cd9a582153bf0192d961f2687": {

"Name": "tomcat-net-01",

"EndpointID": "8b25c1fa53a75c05311d2c8ba452072356de03dbca1b9e26b3ef7be36ef600b8",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"eda60ed12e5d726a938cc3b35bdedbaee99accf6d125c9f378d114a6420f199e": {

"Name": "tomcat-net-02",

"EndpointID": "ef5d512171369a04df69c236d139e8aeef7117ade1153c980f4b3a35966f7272",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@node1 ~]#

[root@node1 ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

15: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:07:63:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.7.99.2/24 brd 172.7.99.255 scope global eth0

valid_lft forever preferred_lft forever

19: eth1@if20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.0.4/16 brd 192.168.255.255 scope global eth1

valid_lft forever preferred_lft forever

[root@node1 ~]#

[root@node1 ~]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.200 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.064 m

[root@node1 ~]# docker exec -it tomcat01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.190 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.064 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.060 ms

# tomcat02 不能连通mynet

[root@node1 ~]# docker exec -it tomcat02 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

[root@node1 ~]# 文档更新时间: 2021-03-18 16:31 作者:xtyang