- k8s应用更新策略:灰度发布和蓝绿发布

- 1.生产环境如何实现蓝驱部署?

- 1.1 什么是蓝绿部署?

- 1.2 蓝绿部署的优势和缺点

- 2.通过k8s实现线上业务的蓝绿部署

- 2.1 创建蓝色部署环境(新上线的环境,要替代绿色环境)

- 2.2 创建绿色部署环境(原来的环境)

- 2.3 创建前端service

- 2.4 升级程序

- 3.通过k8s实现滚动更新-滚动更新流程和策略

- 3.1 滚动更新简介

- 3.2 在k8s中实现滚动更新

- 3.2 自定义滚动更新策略

- 4.通过k8s完成线上业务的金丝雀发布

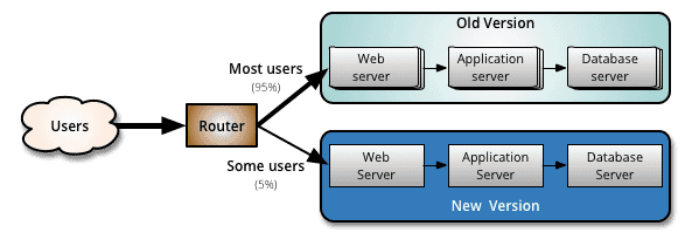

- 4.1 金丝雀发布简介

- 4.2 在k8s中实现金丝雀发布

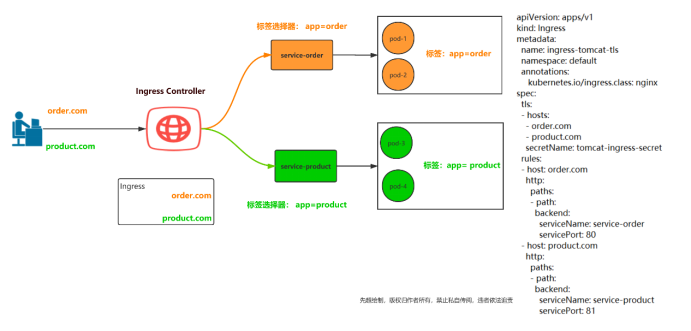

- 5.七层代理Ingress controller

- 5.1 Ingress介绍

- 5.2 Ingress Controller介绍

- 5.3 Ingress和Ingress Controller总结

- 5.4 使用Ingress Controller代理k8s内部应用的流程

- 5.5 安装Nginx Ingress Controller

- 6.通过Ingress-nginx实现灰度发布

k8s应用更新策略:灰度发布和蓝绿发布

1.生产环境如何实现蓝驱部署?

1.1 什么是蓝绿部署?

蓝绿部署中,一共有两套系统:一套是正在提供的服务系统,标记为”绿色”;另一套是准备发布的系统,标记为”蓝色”。两套系统都是功能完善的、正在运行的系统,只是系统版本和对外服务情况不同。

开发新版本,要用新版本替换线上的旧版本,在线上的系统之后,搭建了一个使用新版本代码的全新系统,这时候,一共有两套系统在运行,正在对外提供服务的老系统是绿色系统,新部署的系统是蓝色系统。

蓝色系统不对外提供服务,用来做什么呢?

- 用来做发布前测试,测试过程中发现任何问题,可以直接在蓝色系统上修改,不干扰用户正在使用的系统。(注意:两套系统没有耦合的时候才能百分百保证不干扰)

- 蓝色系统经过反复的测试、修改、验证,确定达到线上标准之后,直接将用户切到蓝色系统;

切换后的一段时间内,依旧是蓝绿两套系统并存,但是用户访问的是已经是蓝色系统。这段时间内观察蓝色系统(新系统)工作状态,如果出现问题,直接切回绿色系统。

当确信对外提供服务的蓝色系统工作正常,不对外提供服务的绿色系统已经不再需要的时候,蓝色系统成为对外提供服务系统,成为新的绿色系统。原有的绿色系统可以销毁,将资源释放出来,用于部署下一个蓝色系统。

1.2 蓝绿部署的优势和缺点

- 优点:

1、更新过程无需停机,风险较少

2、回滚方便,只需要更改路由或者切换DNS服务器,效率较高 - 缺点

1、成本较高,需要部署两套环境。如果新版本中基础服务出现问题,会瞬间影响全网用户;如果新版本有问题也会影响全网用户。

2、需要部署两套集群,费用开销大。

3、在非隔离的机器(Docker/VM)上操作时,可能会导致蓝绿环境被摧毁风险

4、负载均衡器/反向代理/路由/DNS处理不当,将导致流量没有切过来情况出现2.通过k8s实现线上业务的蓝绿部署

说明:

绿色环境 镜像 hebye/myapp:v2

蓝色环境 镜像 hebye/myapp:v1

Kubernetes不支持内置的蓝绿部署。目前最好的方式是创建新的deployment,然后更新应用程序的service以指向新的deployment部署的应用。2.1 创建蓝色部署环境(新上线的环境,要替代绿色环境)

# 创建名称空间 [root@k8s-master1 ~]# kubectl create ns blue-green namespace/blue-green created [root@k8s-master1 ~]# #创建控制器 [root@k8s-master1 blue-green]# cat lan.yaml apiVersion: apps/v1 kind: Deployment metadata: name: myapp-v1 namespace: blue-green spec: replicas: 3 selector: matchLabels: app: myapp version: v1 template: metadata: labels: app: myapp version: v1 spec: containers: - name: myapp image: hebye/myapp:v1 imagePullPolicy: IfNotPresent ports: - containerPort: 80 [root@k8s-master1 blue-green]# [root@k8s-master1 blue-green]# kubectl apply -f lan.yaml deployment.apps/myapp-v1 created [root@k8s-master1 blue-green]# kubectl get pods -n blue-green NAME READY STATUS RESTARTS AGE myapp-v1-9d8c596bd-44mrd 1/1 Running 0 53s myapp-v1-9d8c596bd-87kmq 1/1 Running 0 53s2.2 创建绿色部署环境(原来的环境)

[root@k8s-master1 blue-green]# cat lv.yaml apiVersion: apps/v1 kind: Deployment metadata: name: myapp-v2 namespace: blue-green spec: replicas: 3 selector: matchLabels: app: myapp version: v2 template: metadata: labels: app: myapp version: v2 spec: containers: - name: myapp image: hebye/myapp:v2 imagePullPolicy: IfNotPresent ports: - containerPort: 80 [root@k8s-master1 blue-green]# [root@k8s-master1 blue-green]# kubectl apply -f lv.yaml deployment.apps/myapp-v2 created [root@k8s-master1 blue-green]# [root@k8s-master1 blue-green]# kubectl get pods -n blue-green NAME READY STATUS RESTARTS AGE myapp-v1-9d8c596bd-44mrd 1/1 Running 0 24m myapp-v1-9d8c596bd-87kmq 1/1 Running 0 24m myapp-v1-9d8c596bd-lmmhx 1/1 Running 0 24m myapp-v2-58cf554868-84tkk 1/1 Running 0 33s myapp-v2-58cf554868-9h2rc 1/1 Running 0 33s myapp-v2-58cf554868-mm785 1/1 Running 0 33s [root@k8s-master1 blue-green]#2.3 创建前端service

指向绿色环境

[root@k8s-master1 blue-green]# cat service_lanlv.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-svc

namespace: blue-green

spec:

type: NodePort

ports:

- port: 80

nodePort: 30062

name: http

selector:

app: myapp

version: v2

[root@k8s-master1 blue-green]# kubectl apply -f service_lanlv.yaml

service/myapp-svc unchanged

[root@k8s-master1 blue-green]#

[root@k8s-master1 blue-green]# kubectl get svc -n blue-green

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myapp-svc NodePort 10.0.0.179 <none> 80:30062/TCP 20m

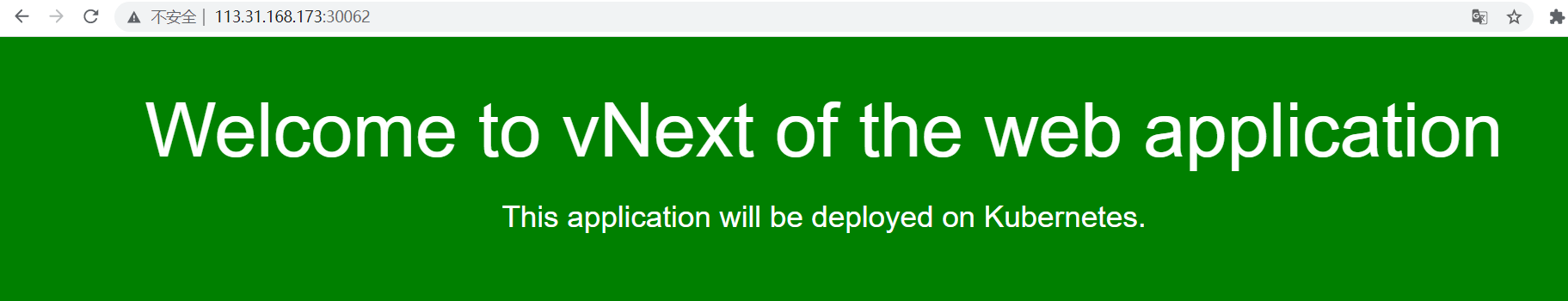

[root@k8s-master1 blue-green]# 在浏览器访问http://node节点ip:30062 显示如下:

2.4 升级程序

让其匹配到蓝色环境

[root@k8s-master1 blue-green]# cat service_lanlv.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-svc

namespace: blue-green

spec:

type: NodePort

ports:

- port: 80

nodePort: 30062

name: http

selector:

app: myapp

version: v1

[root@k8s-master1 blue-green]#

[root@k8s-master1 blue-green]# kubectl apply -f service_lanlv.yaml

service/myapp-svc configured在浏览器访问http://node节点ip:30062 显示如下:

3.通过k8s实现滚动更新-滚动更新流程和策略

3.1 滚动更新简介

滚动更新是一种自动化程序较高的发布方式,用户体验比较平滑,是目前成熟型技术组织所采用的主流发布方式,一次滚动发布一般由若干批次组成,每批的数量一般是可以配置的(可以通过发布模板地定义),例如第一批1台,第二批10%,第三批50%,第四批100%。每个批次之间留观察间隔,通过手工验证或监控反馈确保没有问题再发一下批次,所以总体上滚动发布过程是比较缓慢的。

3.2 在k8s中实现滚动更新

- 首先查看下Deployment资源对象的组成:

[root@master1 ~]# kubectl explain deployment

[root@master1 ~]# kubectl explain deployment.spec

KIND: Deployment

VERSION: apps/v1

RESOURCE: spec <Object>

DESCRIPTION:

Specification of the desired behavior of the Deployment.

DeploymentSpec is the specification of the desired behavior of the

Deployment.

FIELDS:

minReadySeconds <integer>

Minimum number of seconds for which a newly created pod should be ready

without any of its container crashing, for it to be considered available.

Defaults to 0 (pod will be considered available as soon as it is ready)

paused <boolean>

Indicates that the deployment is paused.

#暂停,当我们更新的时候创建pod先暂停,不是立即更新

progressDeadlineSeconds <integer>

The maximum time in seconds for a deployment to make progress before it is

considered to be failed. The deployment controller will continue to process

failed deployments and a condition with a ProgressDeadlineExceeded reason

will be surfaced in the deployment status. Note that progress will not be

estimated during the time a deployment is paused. Defaults to 600s.

replicas <integer>

Number of desired pods. This is a pointer to distinguish between explicit

zero and not specified. Defaults to 1.

revisionHistoryLimit <integer>

#保留的历史版本数,默认是10个

The number of old ReplicaSets to retain to allow rollback. This is a

pointer to distinguish between explicit zero and not specified. Defaults to

10.

selector <Object> -required-

Label selector for pods. Existing ReplicaSets whose pods are selected by

this will be the ones affected by this deployment. It must match the pod

template's labels.

strategy <Object>

#更新策略,支持的滚动更新策略

The deployment strategy to use to replace existing pods with new ones.

template <Object> -required-

Template describes the pods that will be created.

# 查看更新策略

kubectl explain deploy.spec.strategy

KIND: Deployment

VERSION: apps/v1

RESOURCE: strategy <Object>

DESCRIPTION:

The deployment strategy to use to replace existing pods with new ones.

DeploymentStrategy describes how to replace existing pods with new ones.

FIELDS:

rollingUpdate <Object>

Rolling update config params. Present only if DeploymentStrategyType =

RollingUpdate.

type <string>

Type of deployment. Can be "Recreate" or "RollingUpdate". Default is

RollingUpdate.

#支持两种更新,Recreate和RollingUpdate

#Recreate是重建式更新,删除一个更新一个

#RollingUpdate 滚动更新,定义滚动更新的更新方式的,也就是pod能多几个,少几个,控制更新力度的

kubectl explain deploy.spec.strategy.rollingUpdate

KIND: Deployment

VERSION: apps/v1

RESOURCE: rollingUpdate <Object>

DESCRIPTION:

Rolling update config params. Present only if DeploymentStrategyType =

RollingUpdate.

Spec to control the desired behavior of rolling update.

FIELDS:

maxSurge <string>

The maximum number of pods that can be scheduled above the desired number

of pods. Value can be an absolute number (ex: 5) or a percentage of desired

pods (ex: 10%). This can not be 0 if MaxUnavailable is 0. Absolute number

is calculated from percentage by rounding up. Defaults to 25%. Example:

when this is set to 30%, the new ReplicaSet can be scaled up immediately

when the rolling update starts, such that the total number of old and new

pods do not exceed 130% of desired pods. Once old pods have been killed,

new ReplicaSet can be scaled up further, ensuring that total number of pods

running at any time during the update is at most 130% of desired pods.

#我们更新的过程当中最多允许超出的指定的目标副本数有几个;

它有两种取值方式,第一种直接给定数量,第二种根据百分比,百分比表示原本是5个,最多可以超出20%,那就允许多一个,最多可以超过40%,那就允许多两个

maxUnavailable <string>

The maximum number of pods that can be unavailable during the update. Value

can be an absolute number (ex: 5) or a percentage of desired pods (ex:

10%). Absolute number is calculated from percentage by rounding down. This

can not be 0 if MaxSurge is 0. Defaults to 25%. Example: when this is set

to 30%, the old ReplicaSet can be scaled down to 70% of desired pods

immediately when the rolling update starts. Once new pods are ready, old

ReplicaSet can be scaled down further, followed by scaling up the new

ReplicaSet, ensuring that the total number of pods available at all times

during the update is at least 70% of desired pods.

#最多允许几个不可用

maxUnavailable: 1

假设有5个副本,最多一个不可用,就表示最少有4个可用deployment是一个三级结构,deployment控制replicaset,replicaset控制pod

例子:用deployment创建一个pod

[root@k8s-master1 blue-green]# cat deploy-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-v1

namespace: blue-green

spec:

replicas: 2

selector:

matchLabels:

app: myapp

version: v1

template:

metadata:

labels:

app: myapp

version: v1

spec:

containers:

- name: myapp

image: hebye/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@k8s-master1 blue-green]#

# 更新资源清单文件

[root@k8s-master1 blue-green]# kubectl apply -f deploy-demo.yaml

deployment.apps/myapp-v1 created

[root@k8s-master1 blue-green]#

#查看deploy状态

#创建的控制器名字是myapp-v1

[root@k8s-master1 blue-green]# kubectl get deploy -n blue-green

NAME READY UP-TO-DATE AVAILABLE AGE

myapp-v1 2/2 2 2 56s

[root@k8s-master1 blue-green]#

# 创建deploy的时候也会创建一个rs(replicaset),9d8c596bd 这个随机数字是我们引用pod的模板template的名字的hash值

[root@k8s-master1 blue-green]# kubectl get rs -n blue-green

NAME DESIRED CURRENT READY AGE

myapp-v1-9d8c596bd 2 2 2 98s

[root@k8s-master1 blue-green]#

#查看相关的pods

[root@k8s-master1 blue-green]# kubectl get pods -n blue-green

NAME READY STATUS RESTARTS AGE

myapp-v1-9d8c596bd-rpd9w 1/1 Running 0 4m21s

myapp-v1-9d8c596bd-tg42d 1/1 Running 0 4m21s

[root@k8s-master1 blue-green]#

通过deployment管理应用,在更新的时候,可以直接编辑配置文件实现,比方说想要修改副本数,把2个变成3个

[root@k8s-master1 blue-green]# cat deploy-demo.yaml

直接修改replicas数量,如下,变成3

spec:

replicas: 3

修改之后保存退出,执行

[root@k8s-master1 blue-green]# kubectl apply -f deploy-demo.yaml

#apply不同于create,apply可以执行多次,create执行一次,再执行就会报错有重复

[root@k8s-master1 blue-green]# kubectl get pods -n blue-green

NAME READY STATUS RESTARTS AGE

myapp-v1-9d8c596bd-dhxmr 1/1 Running 0 6s

myapp-v1-9d8c596bd-rpd9w 1/1 Running 0 7m40s

myapp-v1-9d8c596bd-tg42d 1/1 Running 0 7m40s

[root@k8s-master1 blue-green]# kubectl get rs -n blue-green

NAME DESIRED CURRENT READY AGE

myapp-v1-9d8c596bd 3 3 3 7m49s

[root@k8s-master1 blue-green]#

#上面可以看到pod副本数变成了3个,rs没有变化

#查看myapp-v1 这个控制器的详细信息

[root@k8s-master1 blue-green]# kubectl describe deploy myapp-v1 -n blue-green

Name: myapp-v1

Namespace: blue-green

CreationTimestamp: Tue, 09 Nov 2021 15:47:19 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=myapp,version=v1

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

#最多允许多25%个pod,25%表示不足一个,可以补一个

#例子:测试滚动更新

在终端执行如下:

[root@k8s-master1 blue-green]# kubectl get pods -l app=myapp -n blue-green -w

NAME READY STATUS RESTARTS AGE

myapp-v1-9d8c596bd-dhxmr 1/1 Running 0 5m18s

myapp-v1-9d8c596bd-rpd9w 1/1 Running 0 12m

myapp-v1-9d8c596bd-tg42d 1/1 Running 0 12m

打开一个新的终端创建更改镜像版本,按如下操作

[root@k8s-master1 blue-green]# vim deploy-demo.yaml

把image: hebye/myapp:v1 变成 image: hebye/myapp:v2

保存退出,执行

[root@k8s-master1 blue-green]# kubectl apply -f deploy-demo.yaml

再回到刚才监测的那个窗口,可以看到信息如下:

NAME READY STATUS RESTARTS AGE

myapp-v1-9d8c596bd-dhxmr 1/1 Running 0 5m18s

myapp-v1-9d8c596bd-rpd9w 1/1 Running 0 12m

myapp-v1-9d8c596bd-tg42d 1/1 Running 0 12m

myapp-v1-fc4767449-bgr4z 0/1 Pending 0 0s

myapp-v1-fc4767449-bgr4z 0/1 Pending 0 0s

myapp-v1-fc4767449-bgr4z 0/1 ContainerCreating 0 0s

myapp-v1-fc4767449-bgr4z 1/1 Running 0 2s

myapp-v1-9d8c596bd-rpd9w 1/1 Terminating 0 15m

myapp-v1-fc4767449-ts5m6 0/1 Pending 0 0s

myapp-v1-fc4767449-ts5m6 0/1 Pending 0 0s

myapp-v1-fc4767449-ts5m6 0/1 ContainerCreating 0 0s

myapp-v1-9d8c596bd-rpd9w 0/1 Terminating 0 15m

myapp-v1-fc4767449-ts5m6 1/1 Running 0 1s

myapp-v1-9d8c596bd-dhxmr 1/1 Terminating 0 7m43s

myapp-v1-fc4767449-nbrb8 0/1 Pending 0 0s

myapp-v1-fc4767449-nbrb8 0/1 Pending 0 0s

myapp-v1-fc4767449-nbrb8 0/1 ContainerCreating 0 0s

myapp-v1-fc4767449-nbrb8 1/1 Running 0 1s

myapp-v1-9d8c596bd-rpd9w 0/1 Terminating 0 15m

myapp-v1-9d8c596bd-rpd9w 0/1 Terminating 0 15m

myapp-v1-9d8c596bd-tg42d 1/1 Terminating 0 15m

myapp-v1-9d8c596bd-dhxmr 0/1 Terminating 0 7m44s

myapp-v1-9d8c596bd-tg42d 0/1 Terminating 0 15m

myapp-v1-9d8c596bd-tg42d 0/1 Terminating 0 15m

myapp-v1-9d8c596bd-tg42d 0/1 Terminating 0 15m

myapp-v1-9d8c596bd-dhxmr 0/1 Terminating 0 7m55s

myapp-v1-9d8c596bd-dhxmr 0/1 Terminating 0 7m55s

pending表示正在进行调度,ContainerCreating表示正在创建一个pod,running表示运 行一个pod,running起来一个pod之后再Terminating(停掉)一个pod,以此类推,直 到所有pod完成滚动升级

在另外一个窗口执行

[root@k8s-master1 blue-green]# kubectl get rs -n blue-green

NAME DESIRED CURRENT READY AGE

myapp-v1-9d8c596bd 0 0 0 20m

myapp-v1-fc4767449 3 3 3 4m52s

[root@k8s-master1 blue-green]#

上面可以看到rs有两个,下面那个是升级之后的,上面那个是升级之前的,但是可以随时回滚

#查看myapp-v1这个控制器的滚动历史,显示如下:

[root@k8s-master1 blue-green]# kubectl rollout history deployment myapp-v1 -n blue-green

deployment.apps/myapp-v1

REVISION CHANGE-CAUSE

1 <none>

2 <none>

#回滚操作如下:

[root@k8s-master1 blue-green]# kubectl rollout undo deployment/myapp-v1 --to-revision=1 -n blue-green

deployment.apps/myapp-v1 rolled back

[root@k8s-master1 blue-green]#

3.2 自定义滚动更新策略

maxSurge和maxUnavailable 用来控制滚动更新的更新策略

取值范围:

数值

1.maxUnavailable:[0,副本数]

2.maxSurge: [0,副本数]注意:两者不能同时为0

- 比例

1、maxUnavailable: [0%,100%],向下取整,比如10个副本,5%的话==0.5个,但计算按照0个;

2、maxSurge: [0%,100%],向上取整,比如10个副本,5%的话==0.5个,但计算按照1个。 - 建议配置

1.maxUnavailable == 0

1.maxSurge == 1

这是我们生成环境提供给用户的默认配置。既”一上一下,先上后下”最平滑原则;

1个新版本pod ready(结合readiness)后,才销毁旧版本pod。此配置适用场景是平滑更新,保证服务平稳,但也有缺点,就是太慢了。 - 总结:

maxUnavailable: 和期望的副本数比,不可用副本数最大比例(或最大值),这个值越小,越能保证服务稳定,更新越平滑;

maxSurage: 和期望的副本数比,超过期望副本数最大比例(或最大值),这个值调的越大,副本更新速度越快。 - 自定义策略

修改更新策略: maxUnavailable=1,maxSurge=1

[root@k8s-master1 blue-green]# kubectl patch deployment myapp-v1 -p '{"spec":{"strategy":{"rollingUpdate": {"maxSurge":1,"maxUnavailable":1}}}}' -n blue-green

[root@k8s-master1 blue-green]# kubectl describe deployment myapp-v1 -n blue-green

Name: myapp-v1

Namespace: blue-green

CreationTimestamp: Tue, 09 Nov 2021 15:47:19 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 3

Selector: app=myapp,version=v1

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 1 max unavailable, 1 max surge

上面可以看到RollingUpdateStrategy: 1 max unavailable, 1 max surge

这个rollingUpdate 更新策略变成了刚才设定的,因为我们设定的pod副本数是3,1和1表示最少不能少于2个pod,最多不能超过4个pod

这个就是通过控制RollingUpdateStrategy 这个字段来设置滚动更新策略的4.通过k8s完成线上业务的金丝雀发布

4.1 金丝雀发布简介

金丝雀发布的由来:17 世纪,英国矿井工人发现,金丝雀对瓦斯这种气体十分敏感。空气中哪怕有极其微量的瓦斯,金丝雀也会停止歌唱;当瓦斯含量超过一定限度时,虽然人类毫无察觉,金丝雀却早已毒发身亡。当时在采矿设备相对简陋的条件下,工人们每次下井都会带上一只金丝雀作为瓦斯检测指标,以便在危险状况下紧急撤离。

金丝雀发布(又称灰度发布、灰度更新):金丝雀发布一般先发1台,或者一个小比例,例如2%的服务器,主要做流量验证用,也称为金丝雀 (Canary) 测试 (国内常称灰度测试)。

简单的金丝雀测试一般通过手工测试验证,复杂的金丝雀测试需要比较完善的监控基础设施配合,通过监控指标反馈,观察金丝雀的健康状况,作为后续发布或回退的依据。 如果金丝测试通过,则把剩余的V1版本全部升级为V2版本。如果金丝雀测试失败,则直接回退金丝雀,发布失败。

优点:灵活,策略自定义,可以按照流量或具体的内容进行灰度(比如不同账号,不同参数),出现问题不会影响全网用户

缺点:没有覆盖到所有的用户导致出现问题不好排查

4.2 在k8s中实现金丝雀发布

打开一个标签1监测更新过程

[root@k8s-master1 blue-green]# kubectl get pods -l app=myapp -n blue-green -w打开另一个标签2执行如下操作:

[root@k8s-master1 blue-green]# kubectl set image deployment myapp-v1 myapp=hebye/myapp:v2 -n blue-green && kubectl rollout pause deployment myapp-v1 -n blue-green

deployment.apps/myapp-v1 image updated

error: deployments.apps "myapp-v1" is already paused回到标签1观察,显示如下:

myapp-v1-9d8c596bd-cbjcw 0/1 Pending 0 0s

myapp-v1-9d8c596bd-cbjcw 0/1 Pending 0 0s

myapp-v1-fc4767449-c8q52 1/1 Terminating 0 2m1s

myapp-v1-9d8c596bd-cbjcw 0/1 ContainerCreating 0 0s

myapp-v1-9d8c596bd-j29cw 0/1 Pending 0 0s

myapp-v1-9d8c596bd-j29cw 0/1 Pending 0 0s

myapp-v1-9d8c596bd-j29cw 0/1 ContainerCreating 0 0s

myapp-v1-fc4767449-c8q52 0/1 Terminating 0 2m2s

myapp-v1-9d8c596bd-j29cw 1/1 Running 0 2s

myapp-v1-9d8c596bd-cbjcw 1/1 Running 0 2s

myapp-v1-fc4767449-c8q52 0/1 Terminating 0 2m13s

myapp-v1-fc4767449-c8q52 0/1 Terminating 0 2m13s注:上面的解释说明把myapp这个容器的镜像更新到hebye/myapp:v2 更新镜像之后,创建一个新的pod就立即暂停,这就是我们说的金丝雀发布;如果暂停几个小时之后没有问题,那么取消暂停,就会依次执行后面步骤,把所有pod都升级。

解除暂停:

[root@k8s-master1 blue-green]# kubectl rollout resume deployment myapp-v1 -n blue-green

回到标签1继续观察:

在标签1可以看到如下一些信息,下面过程是把余下的pod里的容器都更的版本:

myapp-v1-fc4767449-78qpj 1/1 Terminating 0 19m

myapp-v1-fc4767449-d6v9d 1/1 Terminating 0 19m

myapp-v1-9d8c596bd-p4mrj 0/1 Pending 0 0s

myapp-v1-9d8c596bd-p4mrj 0/1 Pending 0 0s

myapp-v1-9d8c596bd-p4mrj 0/1 ContainerCreating 0 0s

myapp-v1-fc4767449-d6v9d 0/1 Terminating 0 19m

myapp-v1-9d8c596bd-p4mrj 1/1 Running 0 2s

myapp-v1-fc4767449-78qpj 0/1 Terminating 0 19m

myapp-v1-fc4767449-d6v9d 0/1 Terminating 0 19m

myapp-v1-fc4767449-d6v9d 0/1 Terminating 0 19m

myapp-v1-fc4767449-78qpj 0/1 Terminating 0 19m

myapp-v1-fc4767449-78qpj 0/1 Terminating 0 19m

#可以看到replicaset 控制器有2个

[root@k8s-master1 blue-green]# kubectl get rs -n blue-green

NAME DESIRED CURRENT READY AGE

myapp-v1-9d8c596bd 3 3 3 179m

myapp-v1-fc4767449 0 0 0 164m

[root@k8s-master1 blue-green]# 回滚:如果发现刚才升级的这个版本有问题可以回滚

查看当前有哪几个版本

[root@master1 ~]# kubectl rollout history deployment myapp-v1 -n blue-green

显示如下:

deployment.apps/myapp-v1

REVISION CHANGE-CAUSE

1 <none>

2 <none>上面说明一共有两个版本,回滚的话默认回滚到上一版本,可以指定参数回滚:

[root@master1 ~]# kubectl rollout undo deployment myapp-v1 -n blue-green --to-revision=15.七层代理Ingress controller

5.1 Ingress介绍

Ingress官网定义:Ingress可以把进入到集群内部的请求转发到集群中的一些服务中,从而可以把服务映射到集群外部。Ingress能把集群内Service配置成外网能够访问的URL,流量负载均衡,提供基于域名访问的虚拟主机等。

Ingress简单的理解就是你原来需要更改Nginx配置,然后配置各种域名对应哪个Service,现在把这个动作抽象出来,变成一个Ingress对象,你可以用yaml创建,每次不要去改Nginx了,直接改yaml然后创建/更新就行了;那么问题来了:”Nginx”该怎么处理?

Ingress Controller这东西就是解决”Nginx的处理方式”的;Ingress Controller通过与Kubernetes API交互,动态的去感知集群中Ingress规则变化,然后读取他,按照他自己的模板生成一段Nginx的配置,再写到Ingress Controller Nginx里,最后reload一下,工作流程如下图:

实际上Ingress也是Kubernetes API的标准资源类型之一,它其实就是一组基于DNS名称(host)或URL路径把请求转发到指定的Service资源的规则。用于将集群外部的请求流量转发到集群内部完成的服务发布。我们需要明白的是,Ingress资源自身不能进行“流量穿透”,仅仅是一组规则的集合,这些集合规则还需要其他功能的辅助,比如监听某套接字,然后根据这些规则的匹配进行路由转发,这些能够为Ingress资源监听套接字并将流量转发的组件就是Ingress Controller。

** 注:Ingress控制器不同于Deployment控制器的是,Ingress控制器不直接运行,它不由kube-controller-manager进行控制,它仅仅是Kubernetes集群的一个附件,类似于CoreDNS,需要在集群上单独部署。**

5.2 Ingress Controller介绍

Ingress Controller是一个七层负载均衡调度器,客户端的请求先到达这个七层负载均衡调度器,由七层负载均衡器在反向代理到后端pod,常见的七层负载均衡器有nginx、traefik,以我们熟悉的nginx为例,假如请求到达nginx,会通过upstream反向代理到后端pod应用,但是后端pod的ip地址是一直在变化的,因此在后端pod前需要加一个service,这个service只是起到分组的作用,那么我们upstream只需要填写service地址即可。

5.3 Ingress和Ingress Controller总结

Ingress Controller 可以理解为控制器,它通过不断的跟 Kubernetes API 交互,实时获取后端Service、Pod的变化,比如新增、删除等,结合Ingress 定义的规则生成配置,然后动态更新上边的 Nginx 或者trafik负载均衡器,并刷新使配置生效,来达到服务自动发现的作用。

Ingress 则是定义规则,通过它定义某个域名的请求过来之后转发到集群中指定的 Service。它可以通过 Yaml 文件定义,可以给一个或多个 Service 定义一个或多个 Ingress 规则。

5.4 使用Ingress Controller代理k8s内部应用的流程

(1)部署Ingress controller,我们ingress controller使用的是nginx

(2)创建Pod应用,可以通过控制器创建pod

(3)创建Service,用来分组pod

(4)创建Ingress http,测试通过http访问应用

(5)创建Ingress https,测试通过https访问应用

客户端通过七层调度器访问后端pod的方式

使用七层负载均衡调度器ingress controller时,当客户端访问kubernetes集群内部的应用时,数据包走向如下图流程所示:

5.5 安装Nginx Ingress Controller

#把defaultbackend.tar.gz和nginx-ingress-controller.tar.gz镜像上传到k8s-node1、部署在k8s-node1上这个节点

导入镜像

[root@k8s-node1 ~]# docker load -i defaultbackend.tar.gz

[root@k8s-node1 ~]# docker load -i nginx-ingress-controller.tar.gz 准备yaml文件

[root@k8s-master1 ingress-v1]# cat default-backend.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: default-http-backend

labels:

k8s-app: default-http-backend

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

k8s-app: default-http-backend

template:

metadata:

labels:

k8s-app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: registry.cn-hangzhou.aliyuncs.com/hachikou/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz #这个URI是 nginx-ingress-controller中nginx里配置好的localtion

port: 8080

scheme: HTTP

initialDelaySeconds: 30 #30s检测一次/healthz

timeoutSeconds: 5

ports:

- containerPort: 8080

# resources:

# limits:

# cpu: 10m

# memory: 20Mi

# requests:

# cpu: 10m

# memory: 20Mi

nodeName: k8s-node1

---

apiVersion: v1

kind: Service #为default backend 创建一个service

metadata:

name: default-http-backend

namespace: kube-system

labels:

k8s-app: default-http-backend

spec:

ports:

- port: 80

targetPort: 8080

selector:

k8s-app: default-http-backend

[root@k8s-master1 ingress-v1]#

[root@k8s-master1 ingress-v1]# cat nginx-ingress-controller-rbac.yml

#apiVersion: v1

#kind: Namespace

#metadata: #这里是创建一个namespace,因为此namespace早有了就不用再创建了

# name: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount #创建一个serveerAcount

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole #这个ServiceAcount所绑定的集群角色

rules:

- apiGroups:

- ""

resources: #此集群角色的权限,它能操作的API资源

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role #这是一个角色,而非集群角色

namespace: kube-system

rules: #角色的权限

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

- create

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding #角色绑定

metadata:

name: nginx-ingress-role-nisa-binding

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount #绑定在这个用户

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding #集群绑定

metadata:

name: nginx-ingress-clusterrole-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount #集群绑定到这个serviceacount

namespace: kube-system #集群角色是可以跨namespace,但是这里只指明给这个namespce来使用

[root@k8s-master1 ingress-v1]#

[root@k8s-master1 ingress-v1]# cat nginx-ingress-controller-rbac.yml

#apiVersion: v1

#kind: Namespace

#metadata: #这里是创建一个namespace,因为此namespace早有了就不用再创建了

# name: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount #创建一个serveerAcount

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole #这个ServiceAcount所绑定的集群角色

rules:

- apiGroups:

- ""

resources: #此集群角色的权限,它能操作的API资源

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role #这是一个角色,而非集群角色

namespace: kube-system

rules: #角色的权限

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

- create

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding #角色绑定

metadata:

name: nginx-ingress-role-nisa-binding

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount #绑定在这个用户

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding #集群绑定

metadata:

name: nginx-ingress-clusterrole-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount #集群绑定到这个serviceacount

namespace: kube-system #集群角色是可以跨namespace,但是这里只指明给这个namespce来使用

[root@k8s-master1 ingress-v1]# cat nginx-ingress-controller.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-controller

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

k8s-app: nginx-ingress-controller

template:

metadata:

labels:

k8s-app: nginx-ingress-controller

spec:

# hostNetwork makes it possible to use ipv6 and to preserve the source IP correctly regardless of docker configuration

# however, it is not a hard dependency of the nginx-ingress-controller itself and it may cause issues if port 10254 already is taken on the host

# that said, since hostPort is broken on CNI (https://github.com/kubernetes/kubernetes/issues/31307) we have to use hostNetwork where CNI is used

# like with kubeadm

# hostNetwork: true #注释表示不使用宿主机的80口,

terminationGracePeriodSeconds: 60

hostNetwork: true #表示容器使用和宿主机一样的网络

serviceAccountName: nginx-ingress-serviceaccount #引用前面创建的serviceacount

containers:

- image: registry.cn-hangzhou.aliyuncs.com/peter1009/nginx-ingress-controller:0.20.0 #容器使用的镜像

name: nginx-ingress-controller #容器名

readinessProbe: #启动这个服务时要验证/healthz 端口10254会在运行的node上监听。

httpGet:

path: /healthz

port: 10254

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10 #每隔10做健康检查

timeoutSeconds: 1

ports:

- containerPort: 80

hostPort: 80 #80映射到80

# - containerPort: 443

# hostPort: 443

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

# - --default-ssl-certificate=$(POD_NAMESPACE)/ingress-secret #这是启用Https时用的

# nodeSelector: #指明运行在哪,此IP要和default backend是同一个IP

# kubernetes.io/hostname: 10.3.1.17 #上面映射到了hostport80,确保此IP80,443没有占用.

#

nodeName: k8s-node1

[root@k8s-master1 ingress-v1]# 应用yaml文件

[root@k8s-master1 ingress-v1]# kubectl apply -f default-backend.yaml

[root@k8s-master1 ingress-v1]# kubectl apply -f nginx-ingress-controller-rbac.yml

[root@k8s-master1 ingress-v1]# kubectl apply -f nginx-ingress-controller.yaml 安装Ingress conrtroller需要的yaml所在的github地址:

https://github.com/kubernetes/ingress-nginx/

[root@k8s-master1 ingress-v1]# kubectl get pods -n kube-system|grep ingress

nginx-ingress-controller-86d8667cb7-5p9xl 1/1 Running 0 6m3s

[root@k8s-master1 ingress-v1]# 部署成功了;

注意:

default-backend.yaml和nginx-ingress-controller.yaml文件指定了nodeName:k8s-node1,表示default和nginx-ingress-controller部署在k8s-node2节点,大家的node节点如果主机名不是k8s-node2,需要自行修改成自己的主机名,这样才会调度成功,一定要让default-http-backend和nginx-ingress-controller这两个pod在一个节点上。

default-backend.yaml:这是官方要求必须要给的默认后端,提供404页面的。它还提供了一个http检测功能,检测nginx-ingress-controll健康状态的,通过每隔一定时间访问nginx-ingress-controll的/healthz页面,如是没有响应就返回404之类的错误码。

6.通过Ingress-nginx实现灰度发布

Ingress-Nginx是一个K8S ingress工具,支持配置Ingress Annotations来实现不同场景下的灰度发布和测试。 Nginx Annotations 支持以下几种Canary规则:

假设我们现在部署了两个版本的服务,老版本和canary版本

- nginx.ingress.kubernetes.io/canary-by-header:基于Request Header的流量切分,适用于灰度发布以及 A/B 测试。当Request Header 设置为 always时,请求将会被一直发送到 Canary 版本;当 Request Header 设置为 never时,请求不会被发送到 Canary 入口

- nginx.ingress.kubernetes.io/canary-by-header-value:要匹配的 Request Header 的值,用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务。当 Request Header 设置为此值时,它将被路由到 Canary 入口。

- nginx.ingress.kubernetes.io/canary-weight:基于服务权重的流量切分,适用于蓝绿部署,权重范围 0 - 100 按百分比将请求路由到 Canary Ingress 中指定的服务。权重为 0 意味着该金丝雀规则不会向 Canary 入口的服务发送任何请求。权重为60意味着60%流量转到canary。权重为 100 意味着所有请求都将被发送到 Canary 入口。

- nginx.ingress.kubernetes.io/canary-by-cookie:基于 Cookie 的流量切分,适用于灰度发布与 A/B 测试。用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务的cookie。当 cookie 值设置为 always时,它将被路由到 Canary 入口;当 cookie 值设置为 never时,请求不会被发送到 Canary 入口。

部署两个版本的服务,这里以简单的nginx为例 - 部署一个v1版本:

[root@k8s-master1 v1]# cat v1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

version: v1

template:

metadata:

labels:

app: nginx

version: v1

spec:

containers:

- name: nginx

image: "openresty/openresty:centos"

ports:

- name: http

protocol: TCP

containerPort: 80

volumeMounts:

- mountPath: /usr/local/openresty/nginx/conf/nginx.conf

name: config

subPath: nginx.conf

volumes:

- name: config

configMap:

name: nginx-v1

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: nginx

version: v1

name: nginx-v1

data:

nginx.conf: |-

worker_processes 1;

events {

accept_mutex on;

multi_accept on;

use epoll;

worker_connections 1024;

}

http {

ignore_invalid_headers off;

server {

listen 80;

location / {

access_by_lua '

local header_str = ngx.say("nginx-v1")

';

}

}

}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-v1

spec:

type: ClusterIP

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

version: v1

[root@k8s-master1 v1]#

[root@k8s-master1 v1]# kubectl apply -f v1.yaml

[root@k8s-master1 v1]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-v1-79bc94ff97-7vbpj 1/1 Running 0 6m51s

[root@k8s-master1 v1]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 33d

nginx-v1 ClusterIP 10.0.0.120 <none> 80/TCP 6m55s

[root@k8s-master1 v1]# - 再部署一个v2版本

[root@k8s-master1 v1]# cat v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v2

spec:

replicas: 1

selector:

matchLabels:

app: nginx

version: v2

template:

metadata:

labels:

app: nginx

version: v2

spec:

containers:

- name: nginx

image: "openresty/openresty:centos"

ports:

- name: http

protocol: TCP

containerPort: 80

volumeMounts:

- mountPath: /usr/local/openresty/nginx/conf/nginx.conf

name: config

subPath: nginx.conf

volumes:

- name: config

configMap:

name: nginx-v2

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: nginx

version: v2

name: nginx-v2

data:

nginx.conf: |-

worker_processes 1;

events {

accept_mutex on;

multi_accept on;

use epoll;

worker_connections 1024;

}

http {

ignore_invalid_headers off;

server {

listen 80;

location / {

access_by_lua '

local header_str = ngx.say("nginx-v2")

';

}

}

}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-v2

spec:

type: ClusterIP

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

version: v2

[root@k8s-master1 v1]# kubectl apply -f v2.yaml

deployment.apps/nginx-v2 created

configmap/nginx-v2 created

service/nginx-v2 created

[root@k8s-master1 v1]# - 部署一个Ingress,对外暴露服务,指向v1版本的服务

[root@k8s-master1 v1]# [root@k8s-master1 v1]# cat ingress-v1.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: nginx annotations: kubernetes.io/ingress.class: nginx spec: rules: - host: canary.hebye.com http: paths: - backend: serviceName: nginx-v1 servicePort: 80 path: / [root@k8s-master1 v1]# kubectl apply -f ingress-v1.yaml [root@k8s-master1 v1]# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE nginx <none> canary.hebye.com 80 4s [root@k8s-master1 v1]#

访问验证一下:

[root@k8s-node2 ~]# while sleep 0.2;do curl http://canary.hebye.com && echo "";done

nginx-v1

nginx-v1

nginx-v1- 基于 Header 的流量切分

创建 Canary Ingress,指定v2版本的后端服务,且加上一些 annotation,当你请求头加入 Regin:always的时候就会访问v2,当标头设置never为时,就会访问v1。

#header方式 ,通过header方式引入流量:

[root@k8s-master1 v1]# cat ingress-header.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "Region"

name: nginx-canary

spec:

rules:

- host: canary.hebye.com

http:

paths:

- backend:

serviceName: nginx-v2

servicePort: 80

path: /

[root@k8s-master1 v1]# kubectl apply -f ingress-header.yaml

#查看当前的ingress

[root@k8s-master1 v1]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-canary <none> canary.hebye.com 80 23s

web-canary-v1 <none> canary.hebye.com 80 65m

[root@k8s-master1 v1]#

#测试访问 带header

[root@k8s-node2 ~]# while sleep 0.2;do curl -H 'Region:always' http://canary.hebye.com&& echo "";done

nginx-v2

nginx-v2

nginx-v2

nginx-v2

#测试访问 不带header

[root@k8s-node2 ~]# while sleep 0.2;do curl http://canary.hebye.com&& echo "";done

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1- 基于 Cookie 的流量切分

与前面 Header 类似,不过使用 Cookie 就无法自定义 value 了,这里以模拟灰度成都地域用户为例,仅将带有名为 user_from_cd 的 cookie 的请求转发给当前 Canary Ingress 。先删除前面基于 Header 的流量切分的 Canary Ingress,然后创建下面新的 Canary Ingress:

避免线上用户访问,可以先让测试人员测试一下,带上cookie访问

[root@k8s-master1 v1]# kubectl delete -f ingress-header.yaml

[root@k8s-master1 v1]# cat ingress-cookie.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-cookie: "user_from_cd"

name: nginx-canary

spec:

rules:

- host: canary.hebye.com

http:

paths:

- backend:

serviceName: nginx-v2

servicePort: 80

path: /

[root@k8s-master1 v1]#

[root@k8s-master1 v1]# kubectl apply -f ingress-cookie.yaml

#测试访问

[root@k8s-node2 ~]# while sleep 0.2;do curl --cookie "user_from_cd=always" http://canary.hebye.com&& echo "";done

nginx-v2

nginx-v2

nginx-v2

nginx-v2

nginx-v2

nginx-v2

可以看到,只有cookie user_from_cd为 always的请求才由 v2 版本的服务响应。- 基于服务权重的流量切分

基于服务权重的 Canary Ingress就简单了,直接定义需要导入的流量比例,这里以导入 10% 流量到 v2 版本为例 (如果有,先删除之前的 Canary Ingress):

[root@k8s-master1 v1]# kubectl delete -f ingress-cookie.yaml

[root@k8s-master1 v1]# cat ingress-weight.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "10"

name: nginx-canary

spec:

rules:

- host: canary.hebye.com

http:

paths:

- backend:

serviceName: nginx-v2

servicePort: 80

path: /

[root@k8s-master1 v1]# kubectl apply -f ingress-weight.yaml

#测试访问

[root@k8s-node2 ~]# while sleep 0.2;do curl http://canary.hebye.com&& echo "";done

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v2

nginx-v1

nginx-v1

nginx-v2

nginx-v1

nginx-v1

nginx-v1

nginx-v2

可以看到,大概只有十分之一的几率由 v2 版本的服务响应,符合 10% 服务权重的设置。

#如果程序没有问题,将权重设置为90,%90的流量会转发到程序,10%的流量还转发到v1程序

[root@k8s-master1 v1]# cat ingress-weight.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "90"

name: nginx-canary

spec:

rules:

- host: canary.hebye.com

http:

paths:

- backend:

serviceName: nginx-v2

servicePort: 80

path: /

[root@k8s-master1 v1]#

如果还没有问题,就可以设置100%,全部流量转发到v程序- 组合方式,优先级从高到底

[root@k8s-master1 v1]# cat ingress-compose.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "Region"

nginx.ingress.kubernetes.io/canary-by-cookie: "user_from_cd"

nginx.ingress.kubernetes.io/canary-weight: "90"

name: nginx-canary

spec:

rules:

- host: canary.hebye.com

http:

paths:

- backend:

serviceName: nginx-v2

servicePort: 80

path: /

[root@k8s-master1 v1]#