- 课程介绍

- 前期回顾

- 关于cfssl工具

- 关于kubeconfig文件

- 第一章:kubectl命令行工具使用详解

- 1.陈述式资源管理方法

- 1.管理名称空间

- 2.管理deployment资源

- 3.管理service资源

- 4.kubectl用法总结

- 2.声明式资源管理方法

- 1.陈述式资源管理方法的局限性

- 2.查看资源配置清单

- 3.解释资源配置清单

- 4.创建资源配置清单

- 5.应用资源配置清单

- 6.修改资源配置清单并应用

- 7.删除资源配置清单

- 8.声明式资源管理方法小结:

- 第二章:kubenetes的CNI网络插件-flannel

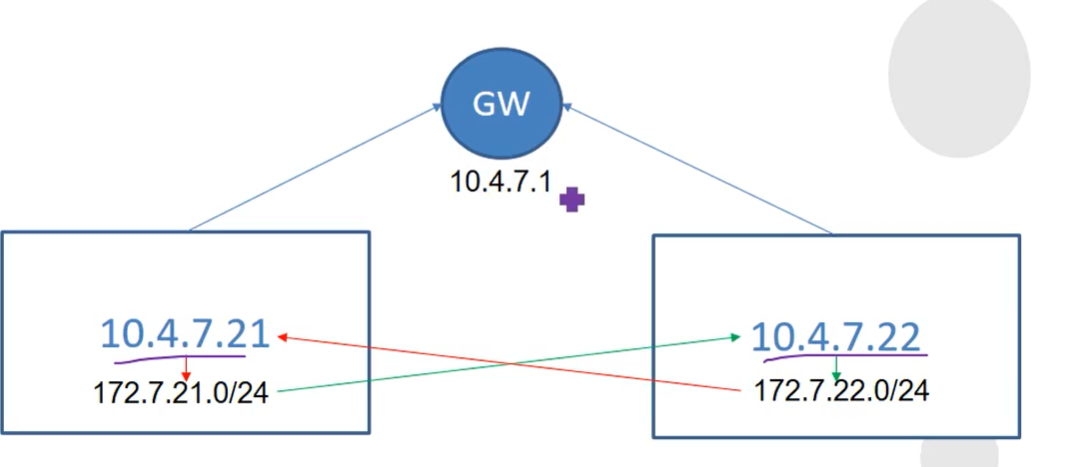

- 0.flannel的网络模型

- 1.集群规划

- 2.下载软件,解压,做软连接

- 3.拷贝证书

- 4.操作etcd,增加host-gw(只在一台运算节点操作即可)

- 5.配置子网信息

- 6.创建启动脚本

- 7.检查配置,权限,创建日志目录

- 8.创建supervisor配置

- 9.启动服务并检查

- 10.其他运算节点安装部署

- 11.验证pod网络互通

- 12.在各个节点上优化iptables规则

- 1.优化原因说明

- 1.制作nginx的镜像

- 2.创建nginx-ds.yaml

- 3.应用资源配置清单

- 4.可以发现,pod之间访问nginx显示的是代理的IP地址

- 5.优化pod之间不走原地址nat

- 6.把默认禁止规则删掉

- 7.在运算节点保存iptables规则

- 8.优化SNAT规则,各运算节点之间的各pod之间的网络通信不再出网

- 第三章:kubernetes的服务发现插件–coredns

- 1.部署K8S的内网资源配置清单http服务

- 2.部署coredns

- 1.准备coredns-v1.6.1镜像

- 2.准备资源配置清单

- 3.创建资源

- 4.验证

- 第四章:kubernetes的服务暴露插件–traefik

- 1.了解知识

- 1.使用NodePort型Service暴露服务

- 2.修改nginx-ds的service资源配置清单

- 3.查看service

- 4.浏览器访问

- 2.部署traefik(ingress控制器)

- 1.准备traefik进行

- 2.准备资源配置清单

- 3.依次创建资源

- 4.解析域名

- 5.配置反向代理

- 6.检查

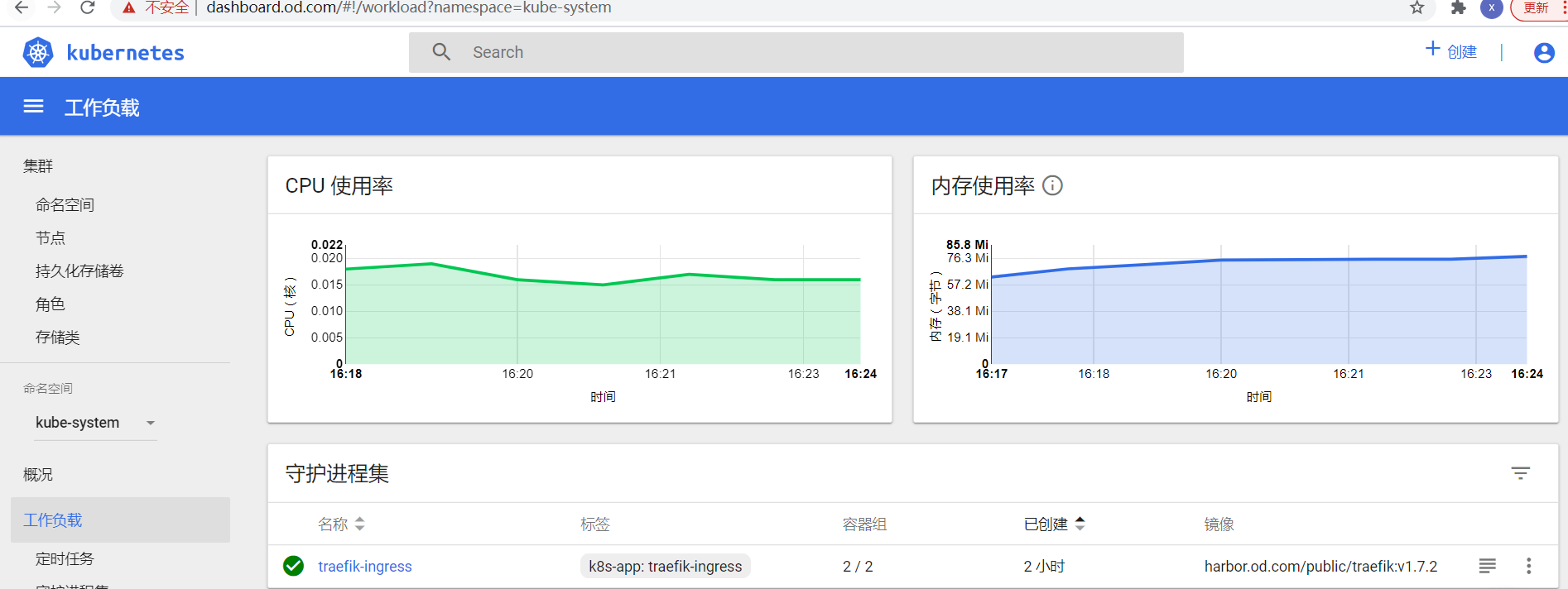

- 7.浏览器访问

- 第五章:前情回顾

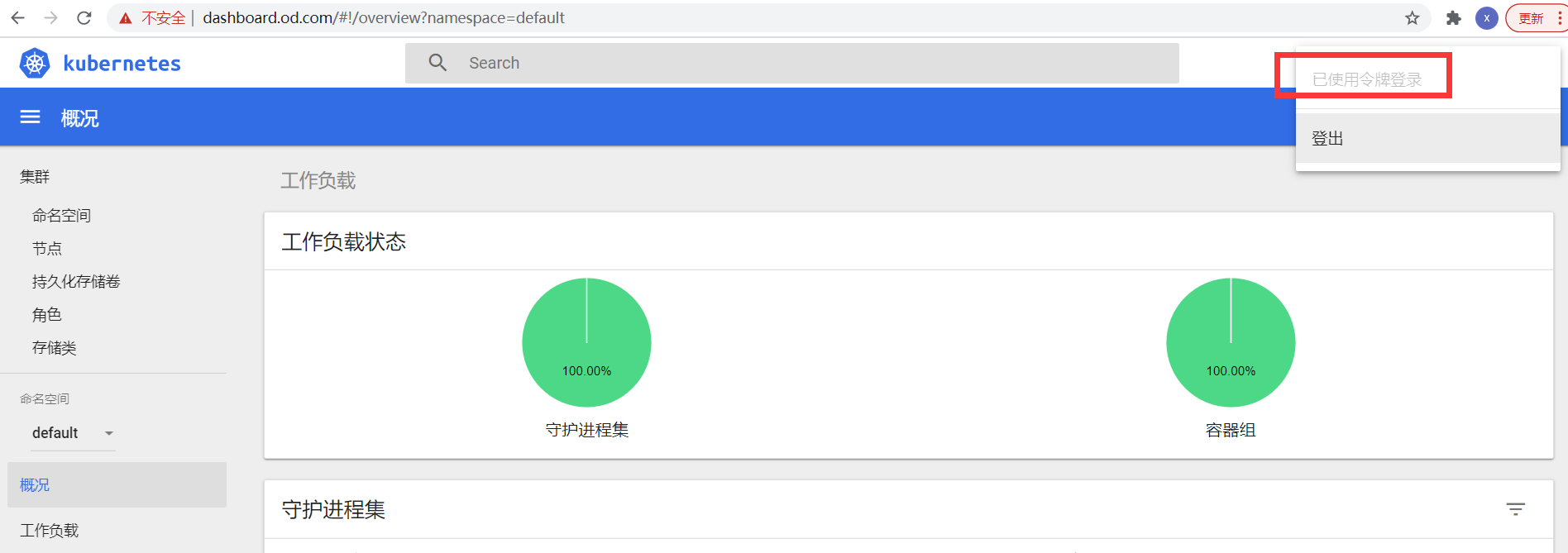

- 第六章:K8S的GUI资源管理插件-仪表盘

- 1.部署kubenetes-dashborad

- 1.准备dashboard镜像

- 2.准备资源配置清单

- 3.依次创建资源配置清单

- 4.解析域名

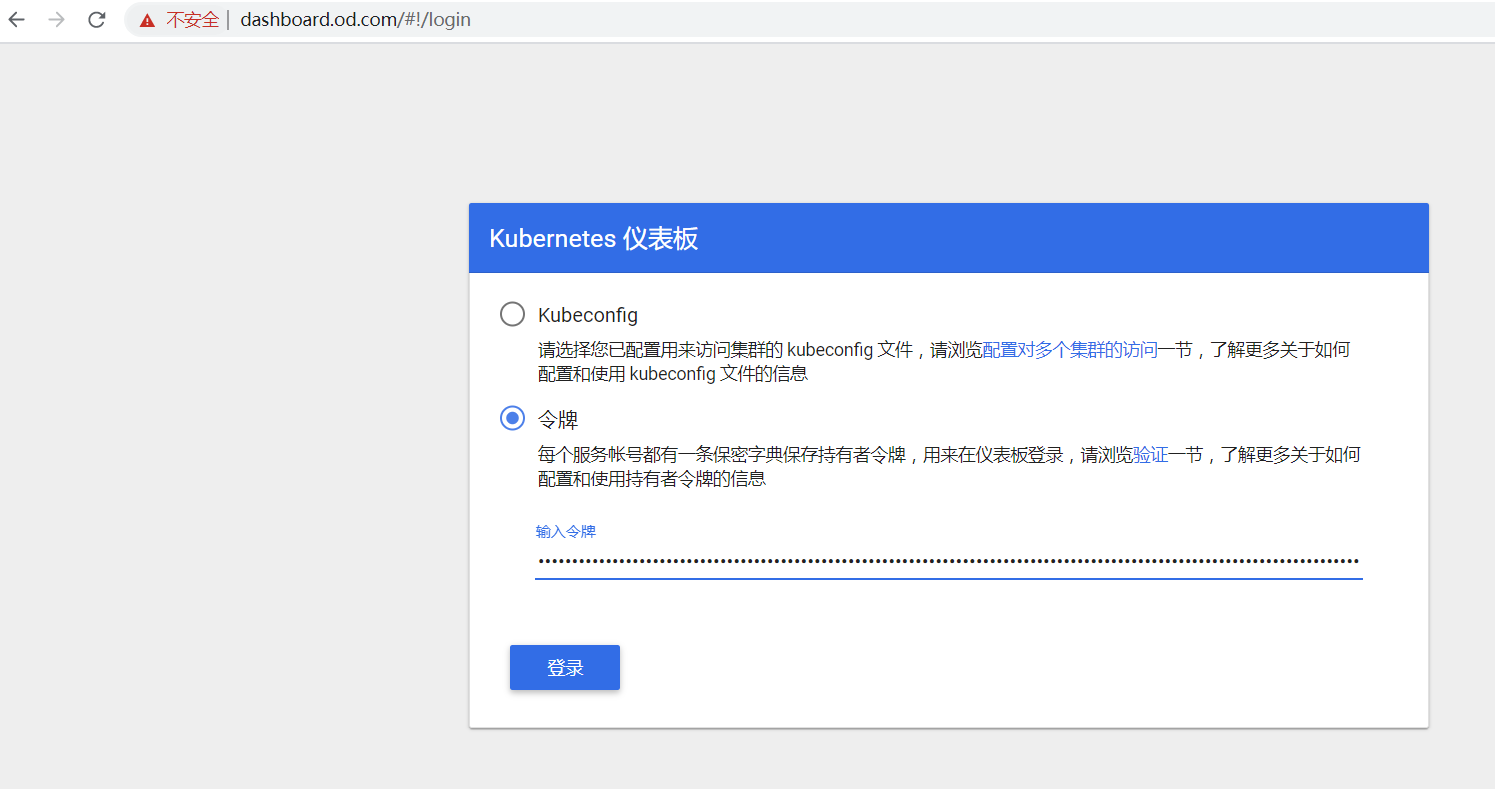

- 5.浏览器访问

- 6.配置SSL证书

- 1.签发dashboard.od.com证书

- 2.检查证书

- 3.配置nginx

- 4.获取kubernetes-dashboard-admin-token

- 5.验证token登录

- 6.升级dashboard为v1.10.1

- 第七章:部署heapster

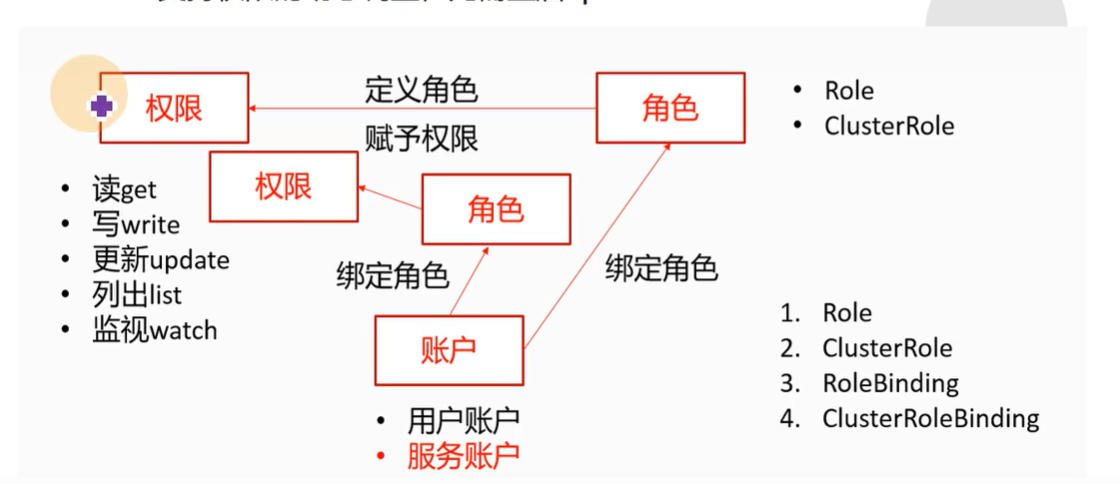

- 第八章:K8S的RBAC鉴权

- 第九章:K8S集群平滑升级

课程介绍

由于本套课程是要实现一整套K8S生态的搭建,并实战交付一套dubbo(java)微服务,我们要一步步实现以下功能:

- 持续集成

- 配置中心

- 监控日志

- 日志收集分析系统

- 自动化运维平台(最终实现基于K8S的开源的Pass平台)

前期回顾

使用二进制安装部署K8S的要点: - 基础实施环节准备好

- Centos7.6系统(内核在3.8.X以上)

- 关闭SELinux,关闭firewalld

- 时间同步

- 调整base源,epel源

- 内核优化

- 安装部署bind9内网DNS系统

- 安装部署docker的私有仓库harbor

- 准备证书签发环境cfssl

- 安装部署主控节点服务(4个)

- Etcd

- Apiserver

- Controller-manager

- scheduler

- 安装部署运算节点服务(2个)

- cfssl:证书签发的主要工具

- cfssl-json:将cfssl生成的证书(json格式)变为文件承载式证书

- cfssl-certinfo:验证证书的信息

把证书apiserver.pem的信息列出来:

[root@hdss7-200 ~]# cd /opt/certs/

[root@hdss7-200 certs]# ls apiserver.pem

apiserver.pem

[root@hdss7-200 certs]# cfssl-certinfo -cert apiserver.pem

{

"subject": {

"common_name": "apiserver",

"country": "CN",

"organization": "od",

"organizational_unit": "ops",

"locality": "beijing",

"province": "beijing",

"names": [

"CN",

"beijing",

"beijing",

"od",

"ops",

"apiserver"

]

},

"issuer": {

"common_name": "OldboyEdu",

"country": "CN",

"organization": "od",

"organizational_unit": "ops",

"locality": "beijing",

"province": "beijing",

"names": [

"CN",

"beijing",

"beijing",

"od",

"ops",

"OldboyEdu"

]

},

"serial_number": "27716320331827529174259696028778658707078846977",

"sans": [

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"127.0.0.1",

"192.168.0.1",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23",

"10.4.7.24"

],

"not_before": "2021-03-22T10:40:00Z",

"not_after": "2041-03-17T10:40:00Z",

"sigalg": "SHA256WithRSA",

"authority_key_id": "BE:4C:7A:A:2A:88:2E:C7:7B:F8:B:7D:B4:6F:AC:4C:66:AD:B9:26",

"subject_key_id": "D:E5:BB:7C:A2:6C:12:B7:57:72:32:A:44:36:70:BC:19:5E:E7:E1",

"pem": "-----BEGIN CERTIFICATE-----\nMIIEcTCCA1mgAwIBAgIUBNrXwPBpim9tebgWAWdZb1mjGgEwDQYJKoZIhvcNAQEL\nBQAwYDELMAkGA1UEBhMCQ04xEDAOBgNVBAgTB2JlaWppbmcxEDAOBgNVBAcTB2Jl\naWppbmcxCzAJBgNVBAoTAm9kMQwwCgYDVQQLEwNvcHMxEjAQBgNVBAMTCU9sZGJv\neUVkdTAeFw0yMTAzMjIxMDQwMDBaFw00MTAzMTcxMDQwMDBaMGAxCzAJBgNVBAYT\nAkNOMRAwDgYDVQQIEwdiZWlqaW5nMRAwDgYDVQQHEwdiZWlqaW5nMQswCQYDVQQK\nEwJvZDEMMAoGA1UECxMDb3BzMRIwEAYDVQQDEwlhcGlzZXJ2ZXIwggEiMA0GCSqG\nSIb3DQEBAQUAA4IBDwAwggEKAoIBAQDU5kvbSNfU0qnvKXG4c2hYAEHTzwjL68PI\nJjvm6rlii2X8AOjk2ea4x5zgk4J4xQ4pqpFBIzLbf9uWjcIcTLJD4MIiSVNYhsR9\nC5pYC/huImNjc6EWEfFra7p+e9O8Gj/jx8dX32Ct8JKphhlVsEKKYBDUPwqaVzQj\nHD+8Q/Mao+6r4hcDQjxtHLYVSsCJrq5WiGOUx3diZa585EEBD45k/ig2O08y66pU\nswZWBGjuynnPTXhs1kre0yHBMXhdF7KwSIT637TCU7fIqJa8hp8Wl4iWIlli7wRq\nwN52M773T4908MnCi6MmzoXMXmvJQ3o/VyYDhjAf+wOl6Ia4UMabAgMBAAGjggEh\nMIIBHTAOBgNVHQ8BAf8EBAMCBaAwEwYDVR0lBAwwCgYIKwYBBQUHAwEwDAYDVR0T\nAQH/BAIwADAdBgNVHQ4EFgQUDeW7fKJsErdXcjIKRDZwvBle5+EwHwYDVR0jBBgw\nFoAUvkx6CiqILsd7+At9tG+sTGatuSYwgacGA1UdEQSBnzCBnIISa3ViZXJuZXRl\ncy5kZWZhdWx0ghZrdWJlcm5ldGVzLmRlZmF1bHQuc3Zjgh5rdWJlcm5ldGVzLmRl\nZmF1bHQuc3ZjLmNsdXN0ZXKCJGt1YmVybmV0ZXMuZGVmYXVsdC5zdmMuY2x1c3Rl\nci5sb2NhbIcEfwAAAYcEwKgAAYcECgQHCocECgQHFYcECgQHFocECgQHF4cECgQH\nGDANBgkqhkiG9w0BAQsFAAOCAQEAGRnQNU6jhycNoSQxvYrIoceWkhs85THBkGG0\nksYhDjMI5q4z2j6wwc/0E0Vw23GNfeXBLFE8YpR/f3N81u9hDGrrk0EoVaCd3kmM\nfwOZN7ImOumQN/Y0Wq2KkrpdnuCN4+xfb5geE1sCxfKHW6v3/XW4L+6kOoxoatPI\n0IhDi4pr7ZD0uNSQHlWhqRIyBtaMy7v8XAA4G5UVNA8Z5cfwHGi+d+4Kcq1ts5r3\njmzje/aJSGLRrwZrtOsfkyk8LqEm+MWzjSC/D1TGt78o9dy8VpRjPjv81YOfJd/2\nafwRibZcG+blL0s6UEEPDO9eHsQy4sugXuhSx/HGr5GO3oOo7w==\n-----END CERTIFICATE-----\n"

}

[root@hdss7-200 certs]# 检查hebye.com域名证书的信息,查看证书的颁发情况,包括到期时间,及颁发机构

[root@hdss7-200 ~]# cfssl-certinfo -domain hebye.com

{

"subject": {

"common_name": "hebye.com",

"names": [

"hebye.com"

]

},

"issuer": {

"common_name": "R3",

"country": "US",

"organization": "Let's Encrypt",

"names": [

"US",

"Let's Encrypt",

"R3"

]

},

"serial_number": "394207557402313198909215062594528728388186",

"sans": [

"blog.hebye.com",

"docs.hebye.com",

"hebye.com",

"www.hebye.com"

],

"not_before": "2021-03-15T13:42:14Z",

"not_after": "2021-06-13T13:42:14Z",

"sigalg": "SHA256WithRSA",

"authority_key_id": "14:2E:B3:17:B7:58:56:CB:AE:50:9:40:E6:1F:AF:9D:8B:14:C2:C6",

"subject_key_id": "8D:E7:95:3A:68:65:85:54:95:F8:B8:3A:C1:6:28:13:71:5E:14:97",

"pem": "-----BEGIN CERTIFICATE-----\nMIIFRjCCBC6gAwIBAgISBIZ4zekVpOr58XoCkaoq2RJaMA0GCSqGSIb3DQEBCwUA\nMDIxCzAJBgNVBAYTAlVTMRYwFAYDVQQKEw1MZXQncyBFbmNyeXB0MQswCQYDVQQD\nEwJSMzAeFw0yMTAzMTUxMzQyMTRaFw0yMTA2MTMxMzQyMTRaMBQxEjAQBgNVBAMT\nCWhlYnllLmNvbTCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBANlzoJqs\nzrnvgSGi96gSvtBW707lnR1n4KhRZuQuht9KtGW5rR1+S7qfNqwDDCqPpNFDcsdT\ng786OYZCOpyWKvaJGu/Ct2yYsHnsYOJCnOHF4LinJTrXznf63zjB93iIFMfzVkYI\nRfFcDtE4RgqiSjzpE6g/+ojZSuD+BsUMKuh3dVOEluBsOZlB8IAc0uzAk1H0Bjhr\nlnGSiJT3aFYrfinkegNjM2/MjBbtCytWXcRt70KN7wr5noByiGqOh/vu9F/CNR7F\n8+sZehXuwH2jFEf8CpFrGQ9Ptt3dRLB0tQiLoZRsEGfsP/nRCWFMd3sDAhQz1exD\nwHQQWC1xGrGcCyUCAwEAAaOCAnIwggJuMA4GA1UdDwEB/wQEAwIFoDAdBgNVHSUE\nFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwDAYDVR0TAQH/BAIwADAdBgNVHQ4EFgQU\njeeVOmhlhVSV+Lg6wQYoE3FeFJcwHwYDVR0jBBgwFoAUFC6zF7dYVsuuUAlA5h+v\nnYsUwsYwVQYIKwYBBQUHAQEESTBHMCEGCCsGAQUFBzABhhVodHRwOi8vcjMuby5s\nZW5jci5vcmcwIgYIKwYBBQUHMAKGFmh0dHA6Ly9yMy5pLmxlbmNyLm9yZy8wQwYD\nVR0RBDwwOoIOYmxvZy5oZWJ5ZS5jb22CDmRvY3MuaGVieWUuY29tggloZWJ5ZS5j\nb22CDXd3dy5oZWJ5ZS5jb20wTAYDVR0gBEUwQzAIBgZngQwBAgEwNwYLKwYBBAGC\n3xMBAQEwKDAmBggrBgEFBQcCARYaaHR0cDovL2Nwcy5sZXRzZW5jcnlwdC5vcmcw\nggEDBgorBgEEAdZ5AgQCBIH0BIHxAO8AdgBElGUusO7Or8RAB9io/ijA2uaCvtjL\nMbU/0zOWtbaBqAAAAXg2VmI3AAAEAwBHMEUCIQCgE1Yc9PY7JGyOzDpNcUlue/Ml\nOhz8c9q+Li3qTInpAgIgd5h16P0qnZSW+P7T32eIQhUelMBx9rj5Obl5RW9bg70A\ndQB9PvL4j/+IVWgkwsDKnlKJeSvFDngJfy5ql2iZfiLw1wAAAXg2VmJbAAAEAwBG\nMEQCIE1yshgOztW15OwcfOe7TngaNcPKaBDZsJBqeB4pjrzwAiBuBAyd3E3Sez2w\n3/7W3O9LNdpGrTyurz4D0cFGRk652zANBgkqhkiG9w0BAQsFAAOCAQEAWGc+Tct9\nALSx2NZkgTKWxaANnCXCXJZk/AOFSDDFpq7ZucnrFejJoJnRQY8fvAsAKbf/9uY1\nLAQg3p7QgturmKcdA2qncou/omrqkAOXDf/xLHot83wEnPSYZ3J+QmfQMRYN6pxj\nOWwWMLOb6+PI4A+GYBMtxzHF6h1IxH61M38nOeOphv200jA3ondacOmwQoMm/pgE\nb8xi1s2OXefB/gWGLSQ1NT34RUp6l3E3TedKdkBWRX8bsriYjrVJudVoV00KpOH7\nuNl05W/iuKKQAfNNBbwT/olFRKlV5IVcbAp8k4A3Y/+haW8CHz3K17nspdJaoz/X\nICUfbwU7C0OOng==\n-----END CERTIFICATE-----\n"

}

[root@hdss7-200 ~]#关于kubeconfig文件

- 这是一个K8S用户的配置文件

- 它里面含有证书信息

- 证书过期或更换,需要同步替换该文件

根据 MD5sum 计算kubelet.kubeconfig是否一样

[root@hdss7-21 ~]# cd /opt/kubernetes/server/bin/conf/

[root@hdss7-21 conf]# md5sum kubelet.kubeconfig

2c3c286b5552745fdb735432d8ceae2b kubelet.kubeconfig

[root@hdss7-21 conf]#

[root@hdss7-22 ~]# cd /opt/kubernetes/server/bin/conf/

[root@hdss7-22 conf]# md5sum kubelet.kubeconfig

2c3c286b5552745fdb735432d8ceae2b kubelet.kubeconfig

[root@hdss7-22 conf]# 由 kubelet.kubeconfig 找到证书信息

[root@hdss7-22 conf]# cat kubelet.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR0RENDQXB5Z0F3SUJBZ0lVVndMOFVsMTdCOStCZ3VXS0hMclc4RStiV1Vjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lERUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjJKbGFXcHBibWN4RURBT0JnTlZCQWNUQjJKbAphV3BwYm1jeEN6QUpCZ05WQkFvVEFtOWtNUXd3Q2dZRFZRUUxFd052Y0hNeEVqQVFCZ05WQkFNVENVOXNaR0p2CmVVVmtkVEFlRncweU1UQXpNVGt4TXpFMU1EQmFGdzAwTVRBek1UUXhNekUxTURCYU1HQXhDekFKQmdOVkJBWVQKQWtOT01SQXdEZ1lEVlFRSUV3ZGlaV2xxYVc1bk1SQXdEZ1lEVlFRSEV3ZGlaV2xxYVc1bk1Rc3dDUVlEVlFRSwpFd0p2WkRFTU1Bb0dBMVVFQ3hNRGIzQnpNUkl3RUFZRFZRUURFd2xQYkdSaWIzbEZaSFV3Z2dFaU1BMEdDU3FHClNJYjNEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUUM3V2RNb1RLV2lIUUZWMVE3WWpPU1FReW9ra0FXMGtiWWMKeG5jQ3hLRDFNYUhHSXZVVEsyK2VtcjRqQW0wSFhqSlpmRVdtYk45dnprMk9hSy90eXNhaXk5S1hxRUdaem9IeQpuUzdlWmJDMHk1c1ZlWmRzUHQ2TnVvY3NOdUZXVGVxbFJ0ZXZUNGZQTjE2OUdNUVpJdnFJcnJJUjMyUzEvRzB5Cm12QmFKWjV1eWxIRUdXSmhTTXhmeWRNRWcxMTNEWm81Zk1MRXRTNTNZbGFmNW5KTFM1clFiTzV5MnM4SXNidzkKMnJQbFNJZG9UYU4zazFHa0ZoYWdjQmlIZE14bDY2d1VJYzdDVExBV09QZTVBQk4zNE1jWW9PazBRMHppMjA1Zwp3WUtOeEdMSVBwdmZlSzhyZU1vVDU2NlZJQ055K2RhMVBnMzNVMUF4aExjOFNUVmR0ZmY5QWdNQkFBR2paakJrCk1BNEdBMVVkRHdFQi93UUVBd0lCQmpBU0JnTlZIUk1CQWY4RUNEQUdBUUgvQWdFQ01CMEdBMVVkRGdRV0JCUysKVEhvS0tvZ3V4M3Y0QzMyMGI2eE1acTI1SmpBZkJnTlZIU01FR0RBV2dCUytUSG9LS29ndXgzdjRDMzIwYjZ4TQpacTI1SmpBTkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQXM3MzVVSWtvNG5vZ1c3QllmTFZsNWJuQS9PZi8zZGlxCnp6L2c3MXJCRUNDV0xwWi8wVE1MZnBHdlVJMGhSaU5vZ1BxRXg0U0ZsZkpWb0ZncktXd1lETjBOSmFUcm9mY1oKUGFqeGJGQlV0U3pXZGkvOHR2TDc5R1JzNVFibkh2MjdhV1dPMGtTQmQ1eGpxUFpCZ1JnR1J5dXRtRFpOczBUbQpYcmdhTWdadk5jUFJpK3pleUIzbnRhNzhSclRsNlhrQnQ2YzBzZmtrS3ZCNVQ2QXM3azBZSkpVN2x5Ly9heFkzCnAzSDNtMXVkMVlyK29BWW9ESVNNNUd3UTh2c1ZDdFpRWlBkYnZNMmhzQks2R29iZHFlZ2dwcjcza25vZFRCSWcKaTdzbDBSZDd4OEdsdlNYS3oxTzJWeDIvMG1hZEYzckd2L1hNZXhTUjJXWVM4VnRBTXU2ZFl3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://10.4.7.10:7443

name: myk8s

contexts:

- context:

cluster: myk8s

user: k8s-node

name: myk8s-context

current-context: myk8s-context

kind: Config

preferences: {}

users:

- name: k8s-node

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR3akNDQXFxZ0F3SUJBZ0lVYkZlR3F2bWNsZmhxNkowV01yVVVvYTJ0bSs4d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lERUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjJKbGFXcHBibWN4RURBT0JnTlZCQWNUQjJKbAphV3BwYm1jeEN6QUpCZ05WQkFvVEFtOWtNUXd3Q2dZRFZRUUxFd052Y0hNeEVqQVFCZ05WQkFNVENVOXNaR0p2CmVVVmtkVEFlRncweU1UQXpNakl4TURNeE1EQmFGdzAwTVRBek1UY3hNRE14TURCYU1GOHhDekFKQmdOVkJBWVQKQWtOT01SQXdEZ1lEVlFRSUV3ZGlaV2xxYVc1bk1SQXdEZ1lEVlFRSEV3ZGlaV2xxYVc1bk1Rc3dDUVlEVlFRSwpFd0p2WkRFTU1Bb0dBMVVFQ3hNRGIzQnpNUkV3RHdZRFZRUURFd2hyT0hNdGJtOWtaVENDQVNJd0RRWUpLb1pJCmh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTCtIR240a2N2R284YXBBL09STllLVEc1dGFick1raGVnUjUKd1RQOUorNUlqZjBCWmVmVHI5OEFaUzZ5VTU5MDExYWdpNkplWHlzYVFSaDBaaWNDODNuaVUxUmpOUzgzNlY5cApGMEh4RkdCa2kzaUlVcXV4ZGNyU3J5cXhDNk82MDR4U2QxWTdjYldIdmtTZ1lFUFRaWDczWDUxUXZYSkVSYnZPCmVJR2dqR3RaVTJvYmxLTUR5VWNDelBncFU3d1FpM2Ewa1g0V1N6VnpnRXN5dkdacGlhbXBRUHlwTldkOVAweHcKSVBlV2ZLVXNXd0xuSG45ZWFyQ2VCRFRBTW5KdVFhYit6T2ZzVEJkSWVSVUpEMkVCMkZibjdBRG9VN1JMQ09TTwppY0wwcFFDbEg2MDR1MDVaamxUTHJyeUdRcDh1VnpPekFhckFXS1JXcVdyU0paSWwrbU1DQXdFQUFhTjFNSE13CkRnWURWUjBQQVFIL0JBUURBZ1dnTUJNR0ExVWRKUVFNTUFvR0NDc0dBUVVGQndNQ01Bd0dBMVVkRXdFQi93UUMKTUFBd0hRWURWUjBPQkJZRUZFcE5CWTdYdVRVYkNIRDZ5M0c4cktnNnJheTFNQjhHQTFVZEl3UVlNQmFBRkw1TQplZ29xaUM3SGUvZ0xmYlJ2ckV4bXJia21NQTBHQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUJRbFdTaGZyZmcwZTVQCjc2NHlvcU5hbWlVMllaRERHTnRqQUlhUWZBc05NdWdBRFJBeFZBZUdWMzdSUUY5K3kzNGVVSmVETnVJVnl6WTkKRGNKbmYrQlpqdTNEdUp4QitUR1dsazA1NERLQWhDd3FsWExldkJldlo4WTBTQTZTU2hFRjFqT252WFlsMXJxdwpBT1RnbFRlU3NRVGF5a1h6RXQ3R0xhUVIxdk1XVzlUaWFYWE81aXVjMEErcVBwM25lVkNBVmYrOVdLcmxBOHluCjNhMFFXN05pZmlzUTBjb3VDUm9mY01kTmc5OG5QTis5ZlgxdDRwdTVkRVNXbDM5OHFUbW1qTkN2d1lHSHNYOU0KaDVJeUhXc3lETXorSU9XemxrSHNtR1RhNlZ5SzdPTkhiMHd1V01UNnlnMTFVRzIzb2xWSlgwRHQrV284VmdvSQp3MmFndGZ6eAotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdjRjYWZpUnk4YWp4cWtEODVFMWdwTWJtMXB1c3lTRjZCSG5CTS8wbjdraU4vUUZsCjU5T3Yzd0JsTHJKVG4zVFhWcUNMb2w1Zkt4cEJHSFJtSndMemVlSlRWR00xTHpmcFgya1hRZkVVWUdTTGVJaFMKcTdGMXl0S3ZLckVMbzdyVGpGSjNWanR4dFllK1JLQmdROU5sZnZkZm5WQzlja1JGdTg1NGdhQ01hMWxUYWh1VQpvd1BKUndMTStDbFR2QkNMZHJTUmZoWkxOWE9BU3pLOFptbUpxYWxBL0trMVozMC9USEFnOTVaOHBTeGJBdWNlCmYxNXFzSjRFTk1BeWNtNUJwdjdNNSt4TUYwaDVGUWtQWVFIWVZ1ZnNBT2hUdEVzSTVJNkp3dlNsQUtVZnJUaTcKVGxtT1ZNdXV2SVpDbnk1WE03TUJxc0JZcEZhcGF0SWxraVg2WXdJREFRQUJBb0lCQURSbTk2V3B2VlZIUHcreApCa0JFdDN0OS9DeWRuVkhPZWY3OWZSSUhZc1I4VDNUNHkvUDQ1RGFrZWdxanVlTjM2VzhITUEwMXV0NGxLY2hTCkdKejEzcjNyWVpkR0tZZ0l5UzVVcDR0Z05ZNEdGRzdmQlpkNlQxczE5NzA3Z1k3RGtCdmxlRWM4cm1qWGdnUnUKeU1mbDcvQWtLS0gzTkU0dVJkSVR0TDdxdVdDcEw0NmpvVy91SkZXZkl1L0Y5V3lsaUdhOW1ZOVVYdm1UVU5IUgpPdk9QL3RDbGQwckFuODR5U25xZ0NBQ1Y1RHRBa2Vwb3hybFNxSFlVV0J2ejQwZHNNaEY2bXE2Q1Y5cTJnQ25KCkYwRVIwT3hJYXNQUGhNQVVua216bkw4RE55V2V5elkyWDd6eXNXMStxMFdZRFk4UVkwaGs3d2JFdEZkR1BReisKRWpGbzA5a0NnWUVBN0d2NEJsR2oxQnVSbGNpMUdNdWR2TUhBdFdqL21BS294TDZXaGV5aUlwenVPQklmMnFvMgoxaXh3ZHVOelBkK2pjamRXS0FVV09mUWtzVGVVYUFzWUpiZTJIcUdhSzA2TnhIOUFieTdiWmtaOUlucUIyVmxUClBabElsTWxzNjZwVmFsSmtnc2FtZlFVS3VLc0FkLzBxY2ZvdG5Sc0hwWEFQVHpSRzRkZUFYUDBDZ1lFQXoyTm4KRWc2OGJQdzR2Z1NuT1lSbXI0V25MSVVNckZpaXp3SEFlc1ByZlVGb2xoa0pxdTkvL29Vc0VpMW96aHd5MzhJWApQRkd2Nmw1dk5xRjB1bkFHTlZMaWRKdkVuQmZqMHlYZ21LQjNtSW9DVW5PMUxjUGw5UHBvK001cUVZYzVuQzg0CkJIVWEwQmQ4cGpwZFJYaFhjRzJXQlB0Q1lxZHAvM2NvNU10M0F0OENnWUVBMjFDeExWTndrYVBkWXNCa1ZwTVUKU1hUSENzSlRVRFV2VUF1bzRLV0tKbWZEaUpvdm1JNEwvcFFNNUF6TTY5blk2bXd3N1VFQ3hGSVo4NWVtZ1BuQgp0Y045RzE4My8vS0lDbjh0UzdhQTZwaDdIby9jZ3I2ZFBHaEViMW1IUS9xbjc5QnMwdS9xbzlFWWlBU0JrODF6CklYWTkrQjZKOGt0SXVHVzdWMmVzK1JVQ2dZRUF1OW5iUzlVRFFtajRjTTVBTnU1Q0lTMGNMMHhaSFdld0dYZ0oKeEduZ3pmVklhZVZHQjRxblVvR0lXUEsrNHl0UnZiTE9Yem5TOGFVV2NkS3ZyQXk2NHVRdjhkWUNzaTFGbFVYUworZzBvSjRpaTc5S1ZRMTRWMXVCWDR6NzlmdUVSQXZNV2Q1c25iV1JJNlQwbXJUMkRYbmcxRWxBUll0RW9SMW5GCk5mRkMzTnNDZ1lBZWRBNXZuclk5bVFva0FUUWJLeWlhUTdVc1l1WUZFa1NDMDR6Z3ZEdUg5NzluUjhaRjZ0bHMKSVRUS0hTcXpwanNmc3U1d0NXeXpZL1dTazhPV0Q1K1VxaVZMdk1INkRQQnhUSVdtMjc0akU3dHBnTWhRcGN6ago2QmJTRVF3R2JSSVZRMzN4SDhFZm5taUZzVGFqU0VlUG5DZ3FuWVdHVm5PaWtYNVJHdFZSS3c9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

[root@hdss7-22 conf]#

#查看k8s-node的client-certificate-data字段,生成pem文件

[root@hdss7-22 conf]# echo "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR3akNDQXFxZ0F3SUJBZ0lVYkZlR3F2bWNsZmhxNkowV01yVVVvYTJ0bSs4d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lERUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjJKbGFXcHBibWN4RURBT0JnTlZCQWNUQjJKbAphV3BwYm1jeEN6QUpCZ05WQkFvVEFtOWtNUXd3Q2dZRFZRUUxFd052Y0hNeEVqQVFCZ05WQkFNVENVOXNaR0p2CmVVVmtkVEFlRncweU1UQXpNakl4TURNeE1EQmFGdzAwTVRBek1UY3hNRE14TURCYU1GOHhDekFKQmdOVkJBWVQKQWtOT01SQXdEZ1lEVlFRSUV3ZGlaV2xxYVc1bk1SQXdEZ1lEVlFRSEV3ZGlaV2xxYVc1bk1Rc3dDUVlEVlFRSwpFd0p2WkRFTU1Bb0dBMVVFQ3hNRGIzQnpNUkV3RHdZRFZRUURFd2hyT0hNdGJtOWtaVENDQVNJd0RRWUpLb1pJCmh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTCtIR240a2N2R284YXBBL09STllLVEc1dGFick1raGVnUjUKd1RQOUorNUlqZjBCWmVmVHI5OEFaUzZ5VTU5MDExYWdpNkplWHlzYVFSaDBaaWNDODNuaVUxUmpOUzgzNlY5cApGMEh4RkdCa2kzaUlVcXV4ZGNyU3J5cXhDNk82MDR4U2QxWTdjYldIdmtTZ1lFUFRaWDczWDUxUXZYSkVSYnZPCmVJR2dqR3RaVTJvYmxLTUR5VWNDelBncFU3d1FpM2Ewa1g0V1N6VnpnRXN5dkdacGlhbXBRUHlwTldkOVAweHcKSVBlV2ZLVXNXd0xuSG45ZWFyQ2VCRFRBTW5KdVFhYit6T2ZzVEJkSWVSVUpEMkVCMkZibjdBRG9VN1JMQ09TTwppY0wwcFFDbEg2MDR1MDVaamxUTHJyeUdRcDh1VnpPekFhckFXS1JXcVdyU0paSWwrbU1DQXdFQUFhTjFNSE13CkRnWURWUjBQQVFIL0JBUURBZ1dnTUJNR0ExVWRKUVFNTUFvR0NDc0dBUVVGQndNQ01Bd0dBMVVkRXdFQi93UUMKTUFBd0hRWURWUjBPQkJZRUZFcE5CWTdYdVRVYkNIRDZ5M0c4cktnNnJheTFNQjhHQTFVZEl3UVlNQmFBRkw1TQplZ29xaUM3SGUvZ0xmYlJ2ckV4bXJia21NQTBHQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUJRbFdTaGZyZmcwZTVQCjc2NHlvcU5hbWlVMllaRERHTnRqQUlhUWZBc05NdWdBRFJBeFZBZUdWMzdSUUY5K3kzNGVVSmVETnVJVnl6WTkKRGNKbmYrQlpqdTNEdUp4QitUR1dsazA1NERLQWhDd3FsWExldkJldlo4WTBTQTZTU2hFRjFqT252WFlsMXJxdwpBT1RnbFRlU3NRVGF5a1h6RXQ3R0xhUVIxdk1XVzlUaWFYWE81aXVjMEErcVBwM25lVkNBVmYrOVdLcmxBOHluCjNhMFFXN05pZmlzUTBjb3VDUm9mY01kTmc5OG5QTis5ZlgxdDRwdTVkRVNXbDM5OHFUbW1qTkN2d1lHSHNYOU0KaDVJeUhXc3lETXorSU9XemxrSHNtR1RhNlZ5SzdPTkhiMHd1V01UNnlnMTFVRzIzb2xWSlgwRHQrV284VmdvSQp3MmFndGZ6eAotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg=="|base64 -d >/tmp/1.pem

# 验证

[root@hdss7-200 ~]# cfssl-certinfo -cert 1.pem

{

"subject": {

"common_name": "k8s-node",

"country": "CN",

"organization": "od",

"organizational_unit": "ops",

"locality": "beijing",

"province": "beijing",

"names": [

"CN",

"beijing",

"beijing",

"od",

"ops",

"k8s-node"

]

},

"issuer": {

"common_name": "OldboyEdu",

"country": "CN",

"organization": "od",

"organizational_unit": "ops",

"locality": "beijing",

"province": "beijing",

"names": [

"CN",

"beijing",

"beijing",

"od",

"ops",

"OldboyEdu"

]

},

"serial_number": "618522899307354876284159939419899716688778468335",

"not_before": "2021-03-22T10:31:00Z",

"not_after": "2041-03-17T10:31:00Z",

"sigalg": "SHA256WithRSA",

"authority_key_id": "BE:4C:7A:A:2A:88:2E:C7:7B:F8:B:7D:B4:6F:AC:4C:66:AD:B9:26",

"subject_key_id": "4A:4D:5:8E:D7:B9:35:1B:8:70:FA:CB:71:BC:AC:A8:3A:AD:AC:B5",

"pem": "-----BEGIN CERTIFICATE-----\nMIIDwjCCAqqgAwIBAgIUbFeGqvmclfhq6J0WMrUUoa2tm+8wDQYJKoZIhvcNAQEL\nBQAwYDELMAkGA1UEBhMCQ04xEDAOBgNVBAgTB2JlaWppbmcxEDAOBgNVBAcTB2Jl\naWppbmcxCzAJBgNVBAoTAm9kMQwwCgYDVQQLEwNvcHMxEjAQBgNVBAMTCU9sZGJv\neUVkdTAeFw0yMTAzMjIxMDMxMDBaFw00MTAzMTcxMDMxMDBaMF8xCzAJBgNVBAYT\nAkNOMRAwDgYDVQQIEwdiZWlqaW5nMRAwDgYDVQQHEwdiZWlqaW5nMQswCQYDVQQK\nEwJvZDEMMAoGA1UECxMDb3BzMREwDwYDVQQDEwhrOHMtbm9kZTCCASIwDQYJKoZI\nhvcNAQEBBQADggEPADCCAQoCggEBAL+HGn4kcvGo8apA/ORNYKTG5tabrMkhegR5\nwTP9J+5Ijf0BZefTr98AZS6yU59011agi6JeXysaQRh0ZicC83niU1RjNS836V9p\nF0HxFGBki3iIUquxdcrSryqxC6O604xSd1Y7cbWHvkSgYEPTZX73X51QvXJERbvO\neIGgjGtZU2oblKMDyUcCzPgpU7wQi3a0kX4WSzVzgEsyvGZpiampQPypNWd9P0xw\nIPeWfKUsWwLnHn9earCeBDTAMnJuQab+zOfsTBdIeRUJD2EB2Fbn7ADoU7RLCOSO\nicL0pQClH604u05ZjlTLrryGQp8uVzOzAarAWKRWqWrSJZIl+mMCAwEAAaN1MHMw\nDgYDVR0PAQH/BAQDAgWgMBMGA1UdJQQMMAoGCCsGAQUFBwMCMAwGA1UdEwEB/wQC\nMAAwHQYDVR0OBBYEFEpNBY7XuTUbCHD6y3G8rKg6ray1MB8GA1UdIwQYMBaAFL5M\negoqiC7He/gLfbRvrExmrbkmMA0GCSqGSIb3DQEBCwUAA4IBAQBQlWShfrfg0e5P\n764yoqNamiU2YZDDGNtjAIaQfAsNMugADRAxVAeGV37RQF9+y34eUJeDNuIVyzY9\nDcJnf+BZju3DuJxB+TGWlk054DKAhCwqlXLevBevZ8Y0SA6SShEF1jOnvXYl1rqw\nAOTglTeSsQTaykXzEt7GLaQR1vMWW9TiaXXO5iuc0A+qPp3neVCAVf+9WKrlA8yn\n3a0QW7NifisQ0couCRofcMdNg98nPN+9fX1t4pu5dESWl398qTmmjNCvwYGHsX9M\nh5IyHWsyDMz+IOWzlkHsmGTa6VyK7ONHb0wuWMT6yg11UG23olVJX0Dt+Wo8VgoI\nw2agtfzx\n-----END CERTIFICATE-----\n"

}

[root@hdss7-200 ~]#

第一章:kubectl命令行工具使用详解

管理K8S核心资源的三种基本方法:

- 陈述式管理方法-主要依赖命令行CLI工具进行管理

- 声明式管理方法-主要依赖统一资源配置清单进行管理

- GUI式管理方法-主要依赖图形化操作界面(web页面)进行管理

1.陈述式资源管理方法

1.管理名称空间

- 查看名称空间

[root@hdss7-21 ~]# kubectl get namespace

NAME STATUS AGE

default Active 43h

kube-node-lease Active 43h

kube-public Active 43h

kube-system Active 43h

[root@hdss7-21 ~]# kubectl get ns

NAME STATUS AGE

default Active 43h

kube-node-lease Active 43h

kube-public Active 43h

kube-system Active 43h

[root@hdss7-21 ~]# - 查看名称空间内的所有资源

[root@hdss7-21 ~]# kubectl get all -n default

NAME READY STATUS RESTARTS AGE

pod/nginx-ds-fw5mt 1/1 Running 0 16h

pod/nginx-ds-sjtwx 1/1 Running 0 16h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 43h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/nginx-ds 2 2 2 2 2 <none> 16h

- 查看名称空间内的pods的资源信息

[root@hdss7-21 ~]# kubectl get pods -n default

NAME READY STATUS RESTARTS AGE

nginx-ds-fw5mt 1/1 Running 0 16h

nginx-ds-sjtwx 1/1 Running 0 16h

[root@hdss7-21 ~]# - 创建名称空间

[root@hdss7-21 ~]# kubectl create namespace app

namespace/app created

[root@hdss7-21 ~]# kubectl get namespace

NAME STATUS AGE

app Active 7s

default Active 43h

kube-node-lease Active 43h

kube-public Active 43h

kube-system Active 43h

[root@hdss7-21 ~]# - 删除名称空间

[root@hdss7-21 ~]# kubectl delete ns app

namespace "app" deleted2.管理deployment资源

- 创建deployment

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.7.9 -n kube-public

deployment.apps/nginx-dp created- 查看deployment

[root@hdss7-21 ~]# kubectl get deployment -n kube-public

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-dp 1/1 1 1 2m2s

[root@hdss7-21 ~]# - 扩展查看deployment

[root@hdss7-21 ~]# kubectl get deployment -n kube-public -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-dp 1/1 1 1 2m51s nginx harbor.od.com/public/nginx:v1.7.9 app=nginx-dp

[root@hdss7-21 ~]# - 详细查看deployment

[root@hdss7-21 ~]# kubectl describe deployment -n kube-public

Name: nginx-dp

Namespace: kube-public

CreationTimestamp: Wed, 24 Mar 2021 15:37:46 +0800

Labels: app=nginx-dp

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx-dp

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx-dp

Containers:

nginx:

Image: harbor.od.com/public/nginx:v1.7.9

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-dp-5dfc689474 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 4m46s deployment-controller Scaled up replica set nginx-dp-5dfc689474 to 1

[root@hdss7-21 ~]# - 查看pod资源

[root@hdss7-21 ~]# kubectl get pods -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-5dfc689474-7nttv 1/1 Running 0 5m38s

[root@hdss7-21 ~]# kubectl get pods -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-5dfc689474-7nttv 1/1 Running 0 5m45s 172.7.21.3 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# - 进入pod资源

[root@hdss7-21 ~]# kubectl exec -it nginx-dp-5dfc689474-7nttv /bin/bash -n kube-public

root@nginx-dp-5dfc689474-7nttv:/# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:07:15:03 brd ff:ff:ff:ff:ff:ff

inet 172.7.21.3/24 brd 172.7.21.255 scope global eth0

valid_lft forever preferred_lft forever

root@nginx-dp-5dfc689474-7nttv:/# - 删除pod资源(重新启动pod)

[root@hdss7-21 ~]# kubectl get pods -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-5dfc689474-7nttv 1/1 Running 0 22m 172.7.21.3 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# kubectl delete pods nginx-dp-5dfc689474-7nttv -n kube-public

pod "nginx-dp-5dfc689474-7nttv" deleted

[root@hdss7-21 ~]# kubectl get pods -n kube-public -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dp-5dfc689474-cz4p5 1/1 Running 0 8s 172.7.21.3 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# - 扩容deployment的pod的数量

[root@hdss7-21 ~]# kubectl scale deployment nginx-dp --replicas=2 -n kube-public

deployment.extensions/nginx-dp scaled

[root@hdss7-21 ~]# kubectl get pods -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-5dfc689474-cz4p5 1/1 Running 0 2m1s

nginx-dp-5dfc689474-qrc7f 1/1 Running 0 5s

[root@hdss7-21 ~]# - 缩减deployment的pod的数量

[root@hdss7-21 ~]# kubectl scale deployment nginx-dp --replicas=1 -n kube-public

deployment.extensions/nginx-dp scaled

[root@hdss7-21 ~]# kubectl get pods -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-5dfc689474-cz4p5 1/1 Running 0 5m34s

nginx-dp-5dfc689474-qrc7f 0/1 Terminating 0 3m38s

[root@hdss7-21 ~]# - 删除deployment

[root@hdss7-21 ~]# kubectl get deployment -n kube-public

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-dp 1/1 1 1 42m

[root@hdss7-21 ~]# kubectl delete deployment nginx-dp -n kube-public

deployment.extensions "nginx-dp" deleted

[root@hdss7-21 ~]# 3.管理service资源

- 创建deployment

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.7.9 -n kube-public

deployment.apps/nginx-dp created

[root@hdss7-21 ~]# kubectl get all -n kube-public

NAME READY STATUS RESTARTS AGE

pod/nginx-dp-5dfc689474-95tfl 1/1 Running 0 13s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-dp 1/1 1 1 13s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-dp-5dfc689474 1 1 1 13s

- 创建service

[root@hdss7-21 ~]# kubectl expose deployment nginx-dp --port=80 -n kube-public

service/nginx-dp exposed

[root@hdss7-21 ~]# - 查看service

[root@hdss7-21 ~]# kubectl get service -n kube-public

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-dp ClusterIP 192.168.189.118 <none> 80/TCP 46s

[root@hdss7-21 ~]# - 扩容deployment资源,查看ipvs变化

[root@hdss7-21 ~]# kubectl get service -n kube-public

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-dp ClusterIP 192.168.189.118 <none> 80/TCP 2m53s

[root@hdss7-21 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.189.118:80 nq

-> 172.7.21.3:80 Masq 1 0 0

[root@hdss7-21 ~]# kubectl scale deployment nginx-dp --replicas=2 -n kube-public

deployment.extensions/nginx-dp scaled

[root@hdss7-21 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.189.118:80 nq

-> 172.7.21.3:80 Masq 1 0 0

-> 172.7.22.3:80 Masq 1 0 0

[root@hdss7-21 ~]# 陈述式有局限性,不能给daemonset创建service,有局限性

4.kubectl用法总结

陈述式资源管理方法小结:

- kubernetes集群管理资源的唯一入口是通过相应的方法调用apiserver的接口

- kubectl是官方的CLI命令行工具,用于与apiserver进行通信,将用户在命令行输入的命令,组织并转化为apiserver能识别的信息,进而实现管理k8s各种资源的一种有效途径。

- kubectl的命令大全

- kubectl –help

- http://docs.kubernetes.org.cn/

- 陈述式资源管理方法可以满足90%以上的资源需求,但它的确定也很明显

- 命令冗长、复杂、难以记忆

- 特定场景下,无法实现管理需求

- 对资源的增、删、查操作比较容易,改就很痛苦了

# 陈述式有局限性,不能给daemonset创建service

[root@hdss7-21 ~]# kubectl get daemonset

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

nginx-ds 2 2 2 2 2 <none> 2d22h

[root@hdss7-21 ~]# kubectl expose daemonset nginx-ds --port=880

error: cannot expose a DaemonSet.extensions

[root@hdss7-21 ~]# 2.声明式资源管理方法

- 声明式资源管理方法依赖于—资源配置清单(yaml/json)

- 查看资源配置清单的方法

- ~]# kubectl get svc nginx-dp -o yaml -n kube-public

- 解释资源配置清单

- ~]# kubectl explain service.metadata

- 创建资源配置清单

- ~]# vi /root/nginx-ds-svc.yaml

- 应用资源配置清单

- ~]# kubectl apply -f nginx-ds-svc.yaml

- 修改资源配置清单并应用

- 在线修改

- 离线修改

- 删除资源配置清单

- 命令冗长、复杂、难以记忆

- 特定场景下,无法实现管理需求

- 对资源的增、删、查操作比较容易,改就很痛苦了

2.查看资源配置清单

[root@hdss7-21 ~]# kubectl get svc nginx-dp -o yaml -n kube-public apiVersion: v1 kind: Service metadata: creationTimestamp: "2021-03-24T09:15:04Z" labels: app: nginx-dp name: nginx-dp namespace: kube-public resourceVersion: "97570" selfLink: /api/v1/namespaces/kube-public/services/nginx-dp uid: 541a02df-b003-47d1-ad0f-d00afaf91551 spec: clusterIP: 192.168.189.118 ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx-dp sessionAffinity: None type: ClusterIP status: loadBalancer: {} [root@hdss7-21 ~]#3.解释资源配置清单

[root@hdss7-21 ~]# kubectl explain service.metadata

KIND: Service

VERSION: v1

RESOURCE: metadata <Object>

.................

[root@hdss7-21 ~]# kubectl explain service.kind

[root@hdss7-21 ~]# kubectl explain service.spec4.创建资源配置清单

[root@hdss7-21 ~]# more /root/nginx-ds-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-ds

sessionAffinity: None

type: ClusterIP

[root@hdss7-21 ~]# 5.应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f nginx-ds-svc.yaml

service/nginx-ds created6.修改资源配置清单并应用

- 离线修改

修改nginx-ds-svc.yaml文件,并用kubelet apply -f nginx-ds-svc.yaml文件使之生效。 - 在线修改

直接使用kubectl edit service nginx-ds在线编辑资源配置清单并保存生效

7.删除资源配置清单

- 陈述式删除

kubectl delete service nginx-ds -n kube-public - 声明式删除

kubectl delete -f nginx-ds-svc.yaml8.声明式资源管理方法小结:

- 声明式资源管理方法,依赖于统一资源配置清单文件

- 对资源的管理,是通过事先定义再统一资源配置清单内,再通过陈述式命令应用到K8S集群里

- 语法格式:kubectl create/apply/delete -f /path/yaml

- 资源配置清单的学习方法:

- 多学别人(官方)写的,能读懂

- 能照着现成的文件改着用

- 遇到不懂的,善用kubectl expplain….查

- 初学切记上来就无中生有,自己憋着写

第二章:kubenetes的CNI网络插件-flannel

kubernetes设计了网络模型,但却将它的实现交给了网络插件,CNI网络插件最主要的功能就是实现POD资源能够跨宿主机进行通信。

常见的CNI网络插件:

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

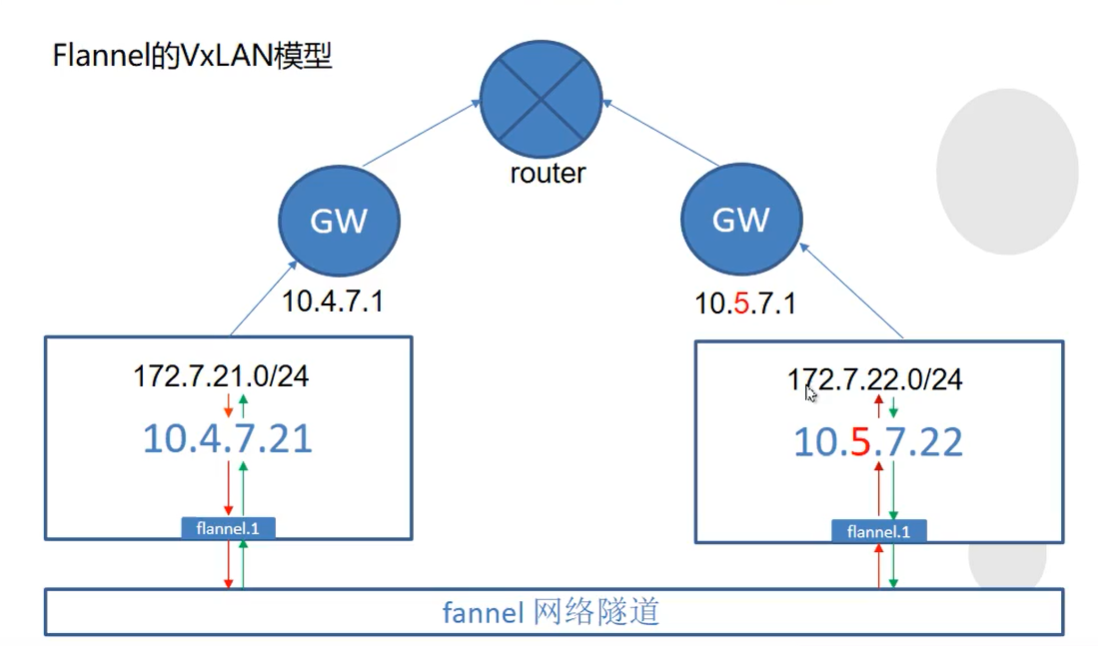

#主要用于宿主机在同网段的情况下POD间的通信,既不跨网段通信。此时flannel的功能很简单,就是在每个宿主机上创建一条通往其他宿主机的网关路由,完全没有性能损耗,效率极高。- vxlan隧道模型

{"Network": "172.7.0.0/16", "Backend": {"Type": "vxlan"}}

#主要用于宿主机不在同网段的情况下POD间通信,既跨网段通信。

#此时flannel会在宿主机上创建一个flannel.1的虚拟网卡,用于和其他宿主机间建立VXLAN隧道,跨宿主机通信时,需要经由flannel.1设备封包、解包,因此效率不高- 混合模型

'{"Network": "172.7.0.0/16", "Backend": {"Type": "VxLAN","Directrouting": true}}'

#在既有同网段宿主机,又有跨网段主机的情况下,选择混合模式,flannel会根据通信双方的网段情况,自动选择是走网关路由通信还是VXLAN隧道通信1.集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| HDSS7-21.host.com | flannel | 10.4.7.21 |

| HDSS7-22.host.com | flannel | 10.4.7.22 |

注意:这里部署文档以HDSS7-21.host.com主机为例,另外一台运算节点安装部署方法类似

2.下载软件,解压,做软连接

[root@hdss7-21 ~]# cd /opt/src/

[root@hdss7-21 src]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@hdss7-21 src]# mkdir /opt/flannel-v0.11.0

[root@hdss7-21 src]# tar xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-v0.11.0/

[root@hdss7-21 src]# ln -s /opt/flannel-v0.11.0/ /opt/flannel3.拷贝证书

[root@hdss7-21 ~]# mkdir /opt/flannel/cert/

[root@hdss7-21 ~]# cd /opt/flannel/cert/

[root@hdss7-21 cert]# scp 10.4.7.200:/opt/certs/ca.pem .

[root@hdss7-21 cert]# scp 10.4.7.200:/opt/certs/client.pem .

[root@hdss7-21 cert]# scp 10.4.7.200:/opt/certs/client-key.pem .

[root@hdss7-21 cert]# ls

ca.pem client-key.pem client.pem

[root@hdss7-21 cert]# 4.操作etcd,增加host-gw(只在一台运算节点操作即可)

[root@hdss7-21 flannel]# cd /opt/etcd

[root@hdss7-21 etcd]# ./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}5.配置子网信息

[root@hdss7-21 flannel]# pwd

/opt/flannel

[root@hdss7-21 flannel]# vim subnet.env

[root@hdss7-21 flannel]# more subnet.env

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.21.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false

[root@hdss7-21 flannel]#注意:flannel集群各主机的配置略有不同,部署其他节点时之一修改。

(FLANNEL_SUBNET=172.7.22.1/24)

6.创建启动脚本

/opt/flannel/flanneld.sh

#!/bin/sh

./flanneld \

--public-ip=10.4.7.21 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=eth0 \

--subnet-file=./subnet.env \

--healthz-port=2401注意:flannel集群各主机的启动脚本略有不同,部署其他节点时注意修改。

7.检查配置,权限,创建日志目录

[root@hdss7-21 flannel]# chmod +x /opt/flannel/flanneld.sh

[root@hdss7-21 flannel]# mkdir -p /data/logs/flanneld8.创建supervisor配置

/etc/supervisord.d/flannel.ini

[program:flanneld-7-21]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

killasgroup=true ; kill all process in a group

stopasgroup=true ; stop all process in a group

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

killasgroup=true

stopasgroup=true9.启动服务并检查

[root@hdss7-21 ~]# supervisorctl update

flanneld-7-21: added process group

[root@hdss7-21 ~]# supervisorctl status

etcd-server-7-21 RUNNING pid 99428, uptime 3 days, 4:00:24

flanneld-7-21 RUNNING pid 109551, uptime 0:24:5110.其他运算节点安装部署

[root@hdss7-22 flannel]# supervisorctl update

flanneld-7-22: added process group

[root@hdss7-22 flannel]#

[root@hdss7-22 flannel]# supervisorctl status

etcd-server-7-22 RUNNING pid 21749, uptime 1 day, 0:30:30

flanneld-7-22 RUNNING pid 103289, uptime 0:11:31

kube-apiserver-7-22 RUNNING pid 20968, uptime 1 day, 0:41:39

kube-controller-manager-7-22 RUNNING pid 20965, uptime 1 day, 0:41:39

kube-kubelet-7-22 RUNNING pid 21098, uptime 1 day, 0:32:39

kube-proxy-7-22 RUNNING pid 70141, uptime 20:24:01

kube-scheduler-7-22 RUNNING pid 20966, uptime 1 day, 0:41:39

[root@hdss7-22 flannel]# 11.验证pod网络互通

[root@hdss7-21 ~]# ping 172.7.22.2

PING 172.7.22.2 (172.7.22.2) 56(84) bytes of data.

64 bytes from 172.7.22.2: icmp_seq=1671 ttl=63 time=10.4 ms

64 bytes from 172.7.22.2: icmp_seq=1672 ttl=63 time=0.425 ms

64 bytes from 172.7.22.2: icmp_seq=1673 ttl=63 time=0.634 ms

64 bytes from 172.7.22.2: icmp_seq=1674 ttl=63 time=0.582 ms

64 bytes from 172.7.22.2: icmp_seq=1675 ttl=63 time=0.539 ms

64 bytes from 172.7.22.2: icmp_seq=1676 ttl=63 time=0.394 ms

64 bytes from 172.7.22.2: icmp_seq=1677 ttl=63 time=0.789 ms

64 bytes from 172.7.22.2: icmp_seq=1678 ttl=63 time=0.506 ms

[root@hdss7-22 ~]# ping 172.7.21.2

PING 172.7.21.2 (172.7.21.2) 56(84) bytes of data.

64 bytes from 172.7.21.2: icmp_seq=1663 ttl=63 time=1.24 ms

64 bytes from 172.7.21.2: icmp_seq=1664 ttl=63 time=0.497 ms

64 bytes from 172.7.21.2: icmp_seq=1665 ttl=63 time=0.696 ms为什么172.7.22.2和172.7.21.2容器能通信呢?

[root@hdss7-21 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.4.7.254 0.0.0.0 UG 0 0 0 eth0

10.4.7.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.7.21.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

172.7.22.0 10.4.7.22 255.255.255.0 UG 0 0 0 eth0

[root@hdss7-21 ~]#

[root@hdss7-22 flannel]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.4.7.254 0.0.0.0 UG 0 0 0 eth0

10.4.7.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.7.21.0 10.4.7.21 255.255.255.0 UG 0 0 0 eth0

172.7.22.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

[root@hdss7-22 flannel]# 本质就是:

[root@hdss7-21 ~]# route add -net 172.7.22.0/24 gw 10.4.7.22

[root@hdss7-22 ~]# route add -net 172.7.21.0/24 gw 10.4.7.21Flannel的host-gw模型

附:flannel的其他网络模型

- VxLAN模型:

'{"Network": "172.6.0.0/16", "Backend": {"Type": "VxLAN"}}'

1、更改为VxLAN模型

1.1拆除现有的host-gw模式

1.1.1查看当前flanned工作模式

[root@hdss7-21 ~]# cd /opt/etcd

[root@hdss7-21 etcd]# ./etcdctl get /coreos.com/network/config

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

[root@hdss7-21 etcd]#

1.1.2关闭flanneld

[root@@hdss7-21 flannel]# supervisorctl stop flanneld-7-21

flanneld-7-21: stopped

[root@@hdss7-22 flannel]# supervisorctl stop flanneld-7-22

flanneld-7-22: stopped

1.1.3检查关闭情况

[root@hdss7-21 flannel]# ps -aux | grep flanneld

root 12784 0.0 0.0 112660 964 pts/0 S+ 10:30 0:00 grep --color=auto flanneld

[root@hdss7-22 flannel]# ps -aux | grep flanneld

root 12379 0.0 0.0 112660 972 pts/0 S+ 10:31 0:00 grep --color=auto flanneld

1.1.4 拆除host-gw静态路由规则:

[root@hdss7-21 flannel]# route del -net 172.7.22.0/24 gw 10.4.7.22 dev eth0 # 删除规则的方法

[root@hdss7-22 flannel]# route del -net 172.7.21.0/24 gw 10.4.7.21 dev eth0 # 删除规则的方法

1.1.5 删除host-gw模型

[root@hdss7-22 flannel]# cd /opt/etcd

[root@hdss7-22 etcd]# ./etcdctl rm /coreos.com/network/config

1.1.6 更改后的模式为VxLAN

[root@hdss7-22 etcd]#./etcdctl set /coreos.com/network/config '{"Network": "172.6.0.0/16", "Backend": {"Type": "VxLAN"}}'

1.1.7 查看模式

[root@hdss7-22 etcd]# etcd]# ./etcdctl get /coreos.com/network/config

{"Network": "172.6.0.0/16", "Backend": {"Type": "VxLAN"}}

1.1.8 重新启动flanneld服务

1.1.9 查看ifconfig

1.1.10 查看静态路由信息- Directrouting模型(直接路由),智能判断

'{"Network": "172.6.0.0/16", "Backend": {"Type": "VxLAN","Directrouting": true}}'12.在各个节点上优化iptables规则

1.优化原因说明

我们使用的是gw网络模型,而这个网络模型只是创建了一条到其他宿主机想POD网络的路由信息

因此我们可以猜想:

1.从外网访问到B宿主机中的POD,源IP应该是外网IP

2.从A宿主机访问B宿主机中的POD,源IP应该是A宿主机的IP

3.从A的POD-A01中访问B中的POD,源IP应该是POD-A01的容器的IP

此情形可以想象是一个路由器下的2个不同网段的交换机下的设备通过路由器(gw)通信

然后遗憾的是:

- 前两条毫无疑问成立

- 第三条路由成立,但实际不成立

不成立的原因是:

- Docker容器的跨网络隔离与通信,借助了iptables的机制

- 因此虽然K8S我们使用了ipvs调度,但是宿主机上还有iptables规则

- 而docker默认生成的iptables规则为

若数据出网前,先判断出网设备是不是本机docker0设备(容器网络)

如果不是的话,则进行SNAT转换后再出网,具体规则如下[root@hdss7-21 ~]# iptables-save |grep -i postrouting|grep docker0 -A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE [root@hdss7-21 ~]# - 修改iptables规则,增加过滤目标:过滤目的是宿主机网段的流量

为什么要优化?一起看下面的案例:

1.制作nginx的镜像

[root@hdss7-200 ~]# docker run --rm -it nginx bash

root@c24c96f988d3:/# apt-get update && apt-get install curl -y

[root@hdss7-200 ~]# docker commit -p bf26a5f92a91 harbor.od.com/public/nginx:curl

[root@hdss7-200 ~]# docker push harbor.od.com/public/nginx:curl2.创建nginx-ds.yaml

[root@hdss7-21 ~]# cat nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:curl

command: ["nginx","-g","daemon off;"]

ports:

- containerPort: 80

[root@hdss7-21 ~]# 3.应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f nginx-ds.yaml 4.可以发现,pod之间访问nginx显示的是代理的IP地址

[root@hdss7-21 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-b4mgw 1/1 Running 0 3m19s 172.7.22.2 hdss7-22.host.com <none> <none>

nginx-ds-sk79f 1/1 Running 0 3m19s 172.7.21.2 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# kubectl exec -it nginx-ds-sk79f /bin/sh

# curl 172.7.22.2

[root@hdss7-22 ~]# kubectl log -f nginx-ds-b4mgw

log is DEPRECATED and will be removed in a future version. Use logs instead.

10.4.7.21 - - [24/Mar/2021:13:35:24 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.64.0" "-"

10.4.7.21 - - [24/Mar/2021:13:35:44 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"

10.4.7.21 - - [24/Mar/2021:13:36:24 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"5.优化pod之间不走原地址nat

在所有需要优化的机器上安装iptables

~]# yum install iptables-services -y

~]# systemctl start iptables.service

~]# systemctl enable iptables.service- 优化hdss7-21

[root@hdss7-21 ~]# iptables-save|grep -i postrouting

:POSTROUTING ACCEPT [2:120]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

[root@hdss7-21 ~]# iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERAD10.4.7.21主机上的,来源是172.7.21.0/24段的docker的ip,目标ip不是172.7.0.0/16段,网络发包不从docker0桥设备出站的,才进行SNAT转换

- 优化hdss7-22

[root@hdss7-22 ~]# iptables-save|grep -i postrouting

:POSTROUTING ACCEPT [12:720]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.7.22.0/24 ! -o docker0 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

[root@hdss7-22 ~]# iptables -t nat -D POSTROUTING -s 172.7.22.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-22 ~]# iptables -t nat -I POSTROUTING -s 172.7.22.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE10.4.7.22主机上的,来源是172.7.22.0/24段的docker的ip,目标ip不是172.7.0.0/16段,网络发包不从docker0桥设备出站的,才进行SNAT转换

6.把默认禁止规则删掉

[root@hdss7-21 ~]# iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 ~]# iptables-save | grep -i reject

[root@hdss7-21 ~]#

[root@hdss7-22 ~]# iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-22 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-22 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-22 ~]# iptables-save | grep -i reject7.在运算节点保存iptables规则

[root@hdss7-21 ~]# iptables-save > /etc/sysconfig/iptables

[root@hdss7-22 ~]# iptables-save > /etc/sysconfig/iptables

# 上面这中保存重启系统失效,建议用下面的方式

[root@hdss7-21 ~]# service iptables save

[root@hdss7-22 ~]# service iptables save

# 永久生效8.优化SNAT规则,各运算节点之间的各pod之间的网络通信不再出网

[root@hdss7-21 ~]# kubectl exec -it nginx-ds-sk79f /bin/sh

# curl 172.7.22.2

# curl 172.7.22.2

# curl 172.7.22.2

[root@hdss7-22 ~]# kubectl log -f nginx-ds-b4mgw

log is DEPRECATED and will be removed in a future version. Use logs instead.

10.4.7.21 - - [24/Mar/2021:13:35:24 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.64.0" "-"

10.4.7.21 - - [24/Mar/2021:13:35:44 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"

10.4.7.21 - - [24/Mar/2021:13:36:24 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"

172.7.21.2 - - [24/Mar/2021:13:54:13 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"

172.7.21.2 - - [24/Mar/2021:13:54:16 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"第三章:kubernetes的服务发现插件–coredns

- 简单来说,服务发现就是服务(应用)之间相互定位的过程。

- 服务器发现并非云计算时代独有的,传统的单体架构也会用到。以下应用场景下,更需要服务发现

- 服务(应用)的动态性强

- 服务(应用)更新发布频繁

- 服务(应用)支持自动伸缩

- 在K8S集群里,POD的IP是不断变化的,如何”以不变应万变呢”?

- 抽象出了service资源,通过标签选择器,关联一组POD

- 抽象出了集群网络,通过相对固定的”集群IP”,使服务接入点固定

- 那么如何自动关联service资源的”名称”和”集群网络IP”,从而达到服务被集群自动发现的目的呢?

- 考虑传统的NDS模型:hdss7-21.host.com -> 10.4.7.21

- 能否在K8S里建立这样的模型:nginx-ds -> 192.168.0.5

- K8S里服务发现的方式-DNS

- 实现K8S里DNS功能的插件

- kube-dns-kubernetes-v1.2至kubernetes-v1.10

- coredns-kubernetes-v1.11至今

- 注意:

- K8S里的DNS不是万能的。它应该只负责自动维护”服务名”->”集群网络IP”之间的关系

- service资源解决POD服务发现:

- 为了解决POD地址变化的问题,需要部署service资源,用service资源代理后端POD,通过暴露service资源的固定地址(集群IP),来解决以上POD资源变化产生的IP变动问题。

- 那么service资源的服务发现?

- service资源提供了一个不变的集群IP供外部访问,但是

1.IP地址毕竟难以记忆

2.service资源可能也会被销毁和创建

3.能不能将service资源名称和service暴露的集群网络IP对应

4.类似域名与IP关系,则只需记服务名就能自动匹配服务IP

5.岂不就达到了service服务的自动发现

- service资源提供了一个不变的集群IP供外部访问,但是

1.部署K8S的内网资源配置清单http服务

在运维主机HDSS7-200.host.com上,配置一个nginx虚拟主机,用以提供k8s统一的资源配置清单访问入口。

- 配置nginx

/etc/nginx/conf.d/k8s-yaml.od.com.conf

[root@hdss7-200 ~]# vim /etc/nginx/conf.d/k8s-yaml.od.com.conf

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

[root@hdss7-200 ~]# mkdir -p /data/k8s-yaml/coredns

[root@hdss7-200 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-200 ~]# nginx -s reload- 配置内网DNS解析

在hdss7-11.host.com主机上

[root@hdss7-11 ~]# vim /var/named/od.com.zone

2021020203 ; serial

k8s-yaml A 10.4.7.200

# 序列号前滚

[root@hdss7-11 ~]# named-checkconf

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A k8s-yaml.od.com @10.4.7.11 +short

10.4.7.200

# 访问

http://k8s-yaml.od.com/coredns/以后所有的资源配置清单统一放置在运维主机上的/data/k8s-yaml目录即可

2.部署coredns

1.准备coredns-v1.6.1镜像

[root@hdss7-200 coredns]# docker pull coredns/coredns:1.6.1

[root@hdss7-200 coredns]# docker images|grep coredns

coredns/coredns 1.6.1 c0f6e815079e 18 months ago 42.2MB

[root@hdss7-200 coredns]# docker tag c0f6e815079e harbor.od.com/public/coredns:v1.6.1

[root@hdss7-200 coredns]# docker push harbor.od.com/public/coredns:v1.6.1

The push refers to repository [harbor.od.com/public/coredns]

da1ec456edc8: Pushed

225df95e717c: Pushed

v1.6.1: digest: sha256:c7bf0ce4123212c87db74050d4cbab77d8f7e0b49c041e894a35ef15827cf938 size: 739

[root@hdss7-200 coredns]# 2.准备资源配置清单

- RBAC

[root@hdss7-200 ~]# cd /data/k8s-yaml/coredns/

[root@hdss7-200 coredns]#

vi /data/k8s-yaml/coredns/rbac.yaml

[root@hdss7-200 coredns]# more /data/k8s-yaml/coredns/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

[root@hdss7-200 coredns]#- ConfigMap

[root@hdss7-200 coredns]# more cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16

forward . 10.4.7.11

cache 30

loop

reload

loadbalance

}

[root@hdss7-200 coredns]#- deployment

[root@hdss7-200 coredns]# more dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

[root@hdss7-200 coredns]#- service

[root@hdss7-200 coredns]# more svc.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

[root@hdss7-200 coredns]# 3.创建资源

浏览器打开:http://k8s-yaml.od.com/coredns 检查资源配置清单文件是否正确创建

在任意运算节点上应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/cm.yaml

configmap/coredns created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/dp.yaml

deployment.apps/coredns created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/svc.yaml

service/coredns created

[root@hdss7-21 ~]# 4.验证

[root@hdss7-21 ~]# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-6b6c4f9648-t5zds 1/1 Running 0 8m26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 8m23s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 1/1 1 1 8m26s

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-6b6c4f9648 1 1 1 8m26s- 使用dig测试解析

[root@hdss7-21 ~]# dig -t A www.baidu.com @192.168.0.2 +short

www.a.shifen.com.

220.181.38.149

220.181.38.150

[root@hdss7-21 ~]# dig -t A hdss7-22.host.com @192.168.0.2 +short

10.4.7.22coredns已经能解析外网域名了,因为coredns的配置中,写了它的上级DNS为10.4.7.11,如果它自己解析不出来域名,会通过递归查询,一级级查找

但是coredns我们不是用来做外网解析的,而是用来做service名称和serviceIP的解析

- 创建一个service资源来验证

# 创建deployment控制器

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.7.9 -n kube-public

deployment.apps/nginx-dp created

[root@hdss7-21 ~]# kubectl get deployment -n kube-public

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-dp 1/1 1 1 12s

# 创建service

[root@hdss7-21 ~]# kubectl expose deployment nginx-dp --port=80 -n kube-public

service/nginx-dp exposed

[root@hdss7-21 ~]# kubectl get service -n kube-public

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-dp ClusterIP 192.168.8.193 <none> 80/TCP 17s

# 验证解析

[root@hdss7-21 ~]# dig -t A nginx-dp.kube-public.svc.cluster.local @192.168.0.2 +short

192.168.8.193

#完整的域名格式:服务名.名称空间.svc.cluster.local

#进入pod内部再次验证

[root@hdss7-21 ~]# kubectl exec -it nginx-dp-5dfc689474-vzs69 /bin/bash -n kube-public

root@nginx-dp-5dfc689474-vzs69:/# ping nginx-dp.kube-public.svc.cluster.local

PING nginx-dp.kube-public.svc.cluster.local (192.168.8.193): 48 data bytes

56 bytes from 192.168.8.193: icmp_seq=0 ttl=64 time=0.046 ms

root@nginx-dp-5dfc689474-vzs69:/# ping nginx-dp

PING nginx-dp.kube-public.svc.cluster.local (192.168.8.193): 48 data bytes

56 bytes from 192.168.8.193: icmp_seq=0 ttl=64 time=0.040 ms

#短域名和全域名都可以解析出来,短域名能够解析出来是因为容器里面写好了

root@nginx-dp-5dfc689474-vzs69:/# more /etc/resolv.conf

nameserver 192.168.0.2

search kube-public.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

root@nginx-dp-5dfc689474-vzs69:/#

第四章:kubernetes的服务暴露插件–traefik

- K8S的DNS实现了服务在集群内被自动发现,那如何使得服务在K8S集群外被使用和访问呢?

- 使用NodePort类型的Service

- 注意:无法使用kube-proxy的ipvs模型,只能使用iptables模型

- 使用Ingress资源

- 注意:Ingress只能调度暴露7层应用,特指http和https协议

- 使用NodePort类型的Service

- ingress是K8S API的标准资源类型之一,也是一种核心资源,它其实就是一组基于域名和URL路径,把用户的请求转发至特定Service资源的规则

- 可以将集群外部的请求流量,转发至集群内部,从而实现”服务暴露”

- ingress控制器是能够为ingress资源监听某套接字,然后根据ingress规则匹配机制路由调度流量的一个组件

- 说白了,ingress没有啥神秘的,就是简化版的nginx+一段go脚本而已

- 常用的ingress控制器的实现软件

- ingress-nginx

- HAProxy

- Traefik

- ……

1.了解知识

1.使用NodePort型Service暴露服务

注意:使用这种方法暴露服务,要求kube-proxy的代理类型为iptables

1、第一步更改为proxy-mode更改为iptables,调度方式为RR

[root@hdss7-21 ~]# cat /opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override 10.4.7.21 \

--proxy-mode=iptables \

--ipvs-scheduler=rr \

--kubeconfig ./conf/kube-proxy.kubeconfig

[root@hdss7-22 ~]# cat /opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override 10.4.7.22 \

--proxy-mode=iptables \

--ipvs-scheduler=rr \

--kubeconfig ./conf/kube-proxy.kubeconfig

[root@hdss7-21 ~]#

2.使iptables模式生效

[root@hdss7-21 ~]# supervisorctl restart kube-kubelet-7-21

[root@hdss7-22 ~]# supervisorctl restart kube-kubelet-7-22

3.清理现有的ipvs规则

[root@hdss7-21 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 1 0

TCP 192.168.0.2:53 nq

-> 172.7.21.4:53 Masq 1 0 0

TCP 192.168.0.2:9153 nq

-> 172.7.21.4:9153 Masq 1 0 0

TCP 192.168.34.212:80 nq

-> 172.7.21.3:80 Masq 1 0 0

UDP 192.168.0.2:53 nq

-> 172.7.21.4:53 Masq 1 0 0

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# ipvsadm -D -t 192.168.0.1:443

[root@hdss7-21 ~]# ipvsadm -D -t 192.168.0.2:53

[root@hdss7-21 ~]# ipvsadm -D -t 192.168.0.2:9153

[root@hdss7-21 ~]# ipvsadm -D -t 192.168.34.212:80

[root@hdss7-21 ~]# ipvsadm -D -u 192.168.0.2:53

(两台运算节点同样的操作)

2.修改nginx-ds的service资源配置清单

[root@hdss7-21 ~]#cat /root/nginx-ds-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 80

protocol: TCP

nodePort: 8000

selector:

app: nginx-ds

sessionAffinity: None

type: NodePort

[root@hdss7-21 ~]#kubectl apply -f nginx-ds-svc.yaml 3.查看service

[root@hdss7-21 ~]#kubectl get svc nginx-ds

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-ds NodePort 192.168.37.111 <none> 80:8000/TCP 2m20s

[root@hdss7-21 ~]# netstat -lntup|grep 8000

tcp6 0 0 :::8000 :::* LISTEN 26695/./kube-proxy- nodeport本质

[root@hdss7-21 ~]# iptables-save | grep 8000

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-MARK-DROP -j MARK --set-xmark 0x8000/0x8000

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-ds:" -m tcp --dport 8000 -j KUBE-MARK-MASQ

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-ds:" -m tcp --dport 8000 -j KUBE-SVC-T4RQBNWQFKKBCRET4.浏览器访问

访问:http://10.4.7.21:8000/ 或者http://10.4.7.22:8000/

2.部署traefik(ingress控制器)

1.准备traefik进行

运维主机HDSS7-200.host.com上:

[root@hdss7-200 ~]# docker pull traefik:v1.7.2-alpine

[root@hdss7-200 ~]# docker images|grep traefik

traefik v1.7.2-alpine add5fac61ae5 2 years ago 72.4MB

[root@hdss7-200 ~]# docker tag add5fac61ae5 harbor.od.com/public/traefik:v1.7.2

[root@hdss7-200 ~]# docker push harbor.od.com/public/traefik:v1.7.22.准备资源配置清单

[root@hdss7-200 ~]# cd /data/k8s-yaml/

[root@hdss7-200 k8s-yaml]# mkdir traefik

[root@hdss7-200 k8s-yaml]# cd traefik/

[root@hdss7-200 traefik]# more rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

[root@hdss7-200 traefik]#

[root@hdss7-200 traefik]# more ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://10.4.7.10:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

[root@hdss7-200 traefik]#

[root@hdss7-200 traefik]# more svc.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web

[root@hdss7-200 traefik]#

[root@hdss7-200 traefik]# more ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.od.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080

[root@hdss7-200 traefik]# 3.依次创建资源

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/rbac.yaml

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ds.yaml

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/svc.yaml

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ingress.yaml4.解析域名

[root@hdss7-11 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021020204 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

(SOA序列号滚动)

[root@hdss7-11 ~]# named-checkconf

[root@hdss7-11 ~]# systemctl restart named5.配置反向代理

HDSS7-11.host.com和HDSS7-12.host.com`两台主机上的nginx均需要配置

[root@hdss7-11 ~]# more /etc/nginx/conf.d/od.com.conf

upstream default_backend_traefik {

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-11 ~]#

[root@hdss7-11 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-11 ~]# nginx -s reload

[root@hdss7-12 ~]# more /etc/nginx/conf.d/od.com.conf

upstream default_backend_traefik {

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-12 ~]#

[root@hdss7-12 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-12 ~]# nginx -s reload

`6.检查

[root@hdss7-21 ~]# netstat -lntup|grep 81

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 106859/docker-proxy

[root@hdss7-22 ~]# netstat -lntup|grep 81

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 108347/docker-proxy

[root@hdss7-21 ~]# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-6b6c4f9648-nptxv 1/1 Running 0 8d

pod/traefik-ingress-648zm 1/1 Running 0 47m

pod/traefik-ingress-68k8z 1/1 Running 0 47m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 8d

service/traefik-ingress-service ClusterIP 192.168.102.93 <none> 80/TCP,8080/TCP 47m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/traefik-ingress 2 2 2 2 2 <none> 47m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 1/1 1 1 8d

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-6b6c4f9648 1 1 1 8d如果pod没有起来没有起来请重启docker

[root@hdss7-21 ~]# systemctl restart docker

[root@hdss7-22 ~]# systemctl restart docker7.浏览器访问

第五章:前情回顾

- K8S核心资源管理方法(CRUD)

- 陈述式管理->基于众多kubect命令

- 声明式管理->基于K8S资源配置清单

- GUI式管理->基于K8S仪表盘(dashboard)

- K8S的CNI网络插件

- 种类众多,以flannel为例

- 三种常用工作模式

- 优化SNAT规则

- K8S的服务发现

- 集群网络 ->Cluster IP

- Service资源 ->Service Name

- Coredns软件 ->实现了Service Name和Cluster IP的自动关联

- K8S的服务暴露

- Ingress资源 ->专用于暴露层应用到K8S集群外的一种核心资源(http/https)

- Ingress控制器 ->一个简化版的nginx(调度流量)+go脚本(动态识别yaml)

- Traefik软件 ->实现了ingress控制器的一个软件

第六章:K8S的GUI资源管理插件-仪表盘

1.部署kubenetes-dashborad

dashboard官方Github

dashboard下载地址

1.准备dashboard镜像

运维主机HDSS7-200.host.com上:

[root@hdss7-200 ~]# docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3

v1.8.3: Pulling from k8scn/kubernetes-dashboard-amd64

a4026007c47e: Pull complete

Digest: sha256:ebc993303f8a42c301592639770bd1944d80c88be8036e2d4d0aa116148264ff

Status: Downloaded newer image for k8scn/kubernetes-dashboard-amd64:v1.8.3

docker.io/k8scn/kubernetes-dashboard-amd64:v1.8.3

[root@hdss7-200 ~]# docker images|grep dashboard

k8scn/kubernetes-dashboard-amd64 v1.8.3 fcac9aa03fd6 2 years ago 102MB

[root@hdss7-200 ~]# docker tag fcac9aa03fd6 harbor.od.com/public/dashboard:v1.8.3

[root@hdss7-200 ~]# docker push harbor.od.com/public/dashboard:v1.8.32.准备资源配置清单

- 创建目录

mkdir -p /data/k8s-yaml/dashboard && cd /data/k8s-yaml/dashboard- rbac

[root@hdss7-200 dashboard]# more rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

[root@hdss7-200 dashboard]# - deployment

[root@hdss7-200 dashboard]# more dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.od.com/public/dashboard:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

[root@hdss7-200 dashboard]# - service

[root@hdss7-200 dashboard]# more svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

[root@hdss7-200 dashboard]#- ingress

[root@hdss7-200 dashboard]# more ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.od.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

[root@hdss7-200 dashboard]#3.依次创建资源配置清单

在任意运算节点应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/rbac.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dp.yaml

deployment.apps/kubernetes-dashboard created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/svc.yaml

service/kubernetes-dashboard created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/ingress.yaml

ingress.extensions/kubernetes-dashboard created4.解析域名

HDSS7-11.host.com上

/var/named/od.com.zone

dashboard A 10.4.7.10

[root@hdss7-11 ~]# named-checkconf

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A dashboard.od.com @10.4.7.11 +short

10.4.7.10

[root@hdss7-11 ~]#5.浏览器访问

http://dashboard.od.com

如果出来web界面,表示部署成,可以看到安装1.8版本的dashboard,默认是可以跳过验证的。

跳过登录是不科学的,后期的话需要升级dashboard为1.10以上版本。

6.配置SSL证书

1.签发dashboard.od.com证书

[root@hdss7-200 ~]# cd /opt/certs/

[root@hdss7-200 certs]# (umask 077; openssl genrsa -out dashboard.od.com.key 2048)

[root@hdss7-200 certs]# openssl req -new -key dashboard.od.com.key -out dashboard.od.com.csr -subj "/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops"

[root@hdss7-200 certs]# openssl x509 -req -in dashboard.od.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.od.com.crt -days 3650

Signature ok

subject=/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops

Getting CA Private Key

[root@hdss7-200 certs]# ll dashboard*

-rw-r--r-- 1 root root 1196 Feb 19 10:19 dashboard.od.com.crt

-rw-r--r-- 1 root root 1005 Feb 19 10:19 dashboard.od.com.csr

-rw------- 1 root root 1679 Feb 19 10:18 dashboard.od.com.key2.检查证书

[root@hdss7-200 certs]# cfssl-certinfo -cert dashboard.od.com.crt

{

"subject": {

"common_name": "dashboard.od.com",

"country": "CN",

"organization": "OldboyEdu",

"organizational_unit": "ops",

"locality": "Beijing",

"province": "BJ",

"names": [

"dashboard.od.com",

"CN",

"BJ",

"Beijing",

"OldboyEdu",

"ops"

]

},

"issuer": {

"common_name": "OldboyEdu",

"country": "CN",

"organization": "od",

"organizational_unit": "ops",

"locality": "beijing",

"province": "beijing",

"names": [

"CN",

"beijing",

"beijing",

"od",

"ops",

"OldboyEdu"

]

},

"serial_number": "10131526603875025429",

"not_before": "2021-03-25T07:15:30Z",

"not_after": "2031-03-23T07:15:30Z",

"sigalg": "SHA256WithRSA",

"authority_key_id": "",

"subject_key_id": "",

"pem": "-----BEGIN CERTIFICATE-----\nMIIDRTCCAi0CCQCMmmnEYGQCFTANBgkqhkiG9w0BAQsFADBgMQswCQYDVQQGEwJD\nTjEQMA4GA1UECBMHYmVpamluZzEQMA4GA1UEBxMHYmVpamluZzELMAkGA1UEChMC\nb2QxDDAKBgNVBAsTA29wczESMBAGA1UEAxMJT2xkYm95RWR1MB4XDTIxMDMyNTA3\nMTUzMFoXDTMxMDMyMzA3MTUzMFowaTEZMBcGA1UEAwwQZGFzaGJvYXJkLm9kLmNv\nbTELMAkGA1UEBhMCQ04xCzAJBgNVBAgMAkJKMRAwDgYDVQQHDAdCZWlqaW5nMRIw\nEAYDVQQKDAlPbGRib3lFZHUxDDAKBgNVBAsMA29wczCCASIwDQYJKoZIhvcNAQEB\nBQADggEPADCCAQoCggEBAKboP/QKKSjfIqIPMthexbZxia7k0iDD5fSUvokHWetj\nnqGBVA5HC+hUlLkQhJJ2oP19TxKmkODZlQvRh0Xin2VRZw3UEss2I41fP+Vr2bTe\nLIMB3yW8CMiUJMueiTL0Nquwz/7Y5IptJPYxDSu13OwZcyfC7rGkSkyrxiorp1Vq\n69ucm0oHBMjznOoJEIvKlhFSJnDG0o7cr54rOIcZ8UvXt988swg/k8nGXsQP8BYv\nzFnMOsVdojynCOGaj3gS4OFEJJykPf3JG9Y9kL8S/Z3fT+EMViK3AxyyPn6/AMMA\nHm4AJlMPzwrFjpv7TeLjekyTNjrsru5ZJG5ZisS2lx0CAwEAATANBgkqhkiG9w0B\nAQsFAAOCAQEAhViPznRJT70FPTeVYn8MdTN++CsmOnkm+RRac2XJ+zuC4/3bWo2s\n+bjjaOlCJoj+vC90rCt8w8nDllBsEILfqboY2+zrj4D2vbrPnu6Tqd1jtqXT0hs1\nChN3CcEUg9HQaotZayRa3ifpxshtL3YAtBq6FRIeRC0v0U02ql0FKjWvN1O39Tyk\nd8zYvRJyTwfDNffDNY153vVdhhl+jUsoyy6Hp9vxpU1WU5rGhbVnesSxjmN4pXOl\nVpxqcQ7uSIw+1P+XybWTrsMvtRJzYJuimSKCz/FXhkRWuLyLn9CfIsxRN23SuG17\noTIOCIkE6xBod8ffeEdlmSqhWclt0D4tTQ==\n-----END CERTIFICATE-----\n"

}

[root@hdss7-200 certs]# 3.配置nginx

- 拷贝证书

在hdss7-11和hds7-12服务器上,都需要操作

[root@hdss7-11 ~]# cd /etc/nginx/

[root@hdss7-11 nginx]# mkdir certs

[root@hdss7-11 nginx]# cd certs/

[root@hdss7-11 certs]# scp hdss7-200:/opt/certs/dashboard.od.com.key .

[root@hdss7-11 certs]# scp hdss7-200:/opt/certs/dashboard.od.com.crt .- 配置虚拟主机dashboard.od.com.conf,走https

vim /etc/nginx/conf.d/dashboard.od.com.conf

server {

listen 80;

server_name dashboard.od.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com;

ssl_certificate "certs/dashboard.od.com.crt";

ssl_certificate_key "certs/dashboard.od.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-11 ~]#

[root@hdss7-11 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-11 ~]# nginx -s reload- 刷新页面检查

4.获取kubernetes-dashboard-admin-token

[root@hdss7-21 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

coredns-token-8dscj kubernetes.io/service-account-token 3 4h18m

default-token-6xwzp kubernetes.io/service-account-token 3 2d4h

kubernetes-dashboard-admin-token-9ghpn kubernetes.io/service-account-token 3 56m

kubernetes-dashboard-key-holder Opaque 2 55m

traefik-ingress-controller-token-76jsn kubernetes.io/service-account-token 3 103m

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

coredns-token-8dscj kubernetes.io/service-account-token 3 4h18m

default-token-6xwzp kubernetes.io/service-account-token 3 2d4h

kubernetes-dashboard-admin-token-9ghpn kubernetes.io/service-account-token 3 56m

kubernetes-dashboard-key-holder Opaque 2 55m

traefik-ingress-controller-token-76jsn kubernetes.io/service-account-token 3 103m

[root@hdss7-21 ~]# kubectl describe secret kubernetes-dashboard-admin-token-9ghpn -n kube-system

Name: kubernetes-dashboard-admin-token-9ghpn

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: 915402a3-d336-4160-9d2f-6a4efaf07a1e

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1346 bytes

namespace: 11 bytes