- 第一章: 容器云概述

- kubernetes不是什么?

- 第二章:Spinnaker概述

- 1.主要功能

- 第三章:自动化运维平台架构详解

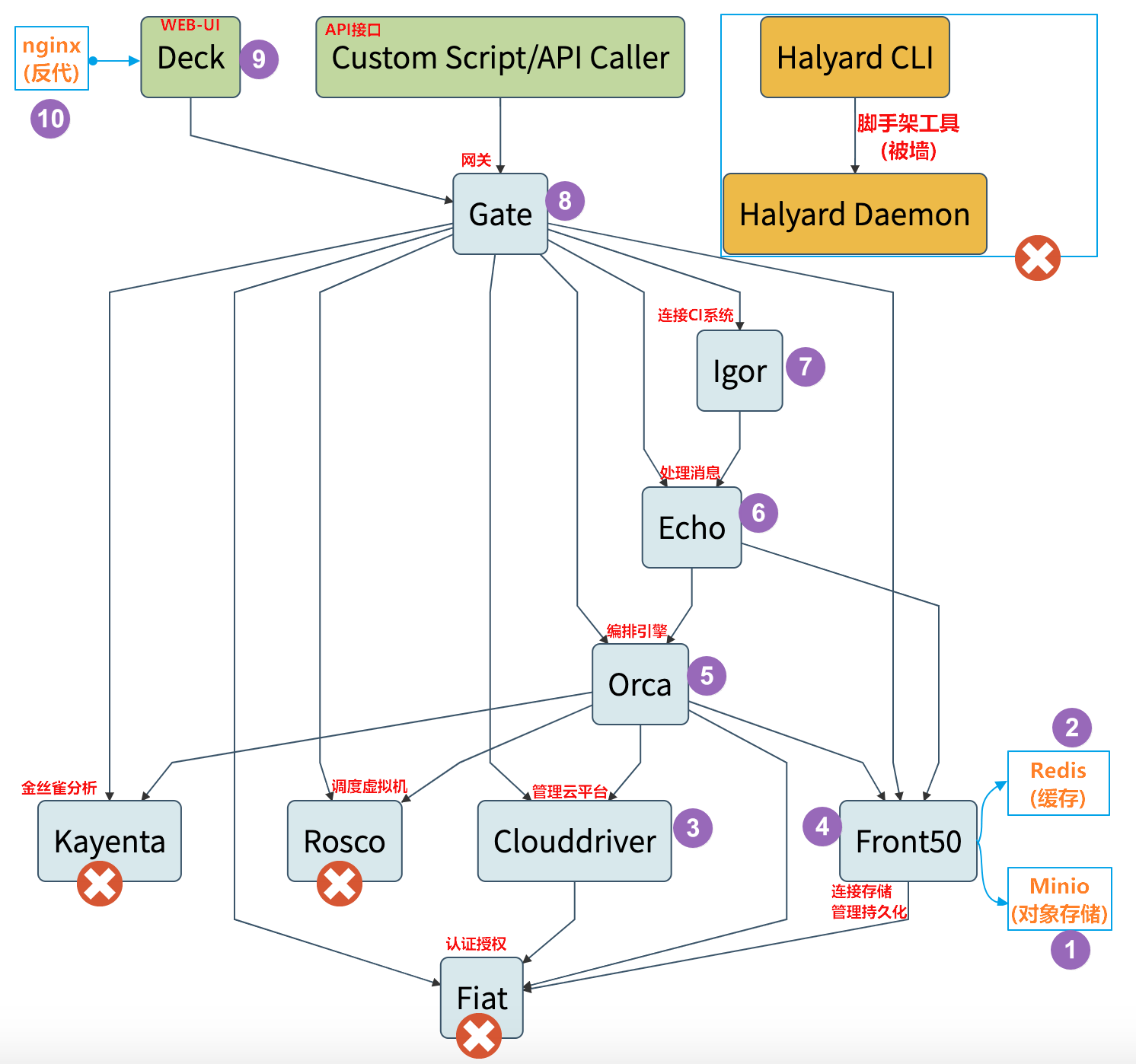

- 1.逻辑架构图

- 2.理解结构图

- 3.部署选型

- 第四章:安装部署Spinnaker微服务集群

- 1.部署对象存储组件-minio

- 1.1.下载镜像

- 1.2.准备资源配置清单

- 1.3.解析域名

- 1.4.应用资源配置清单

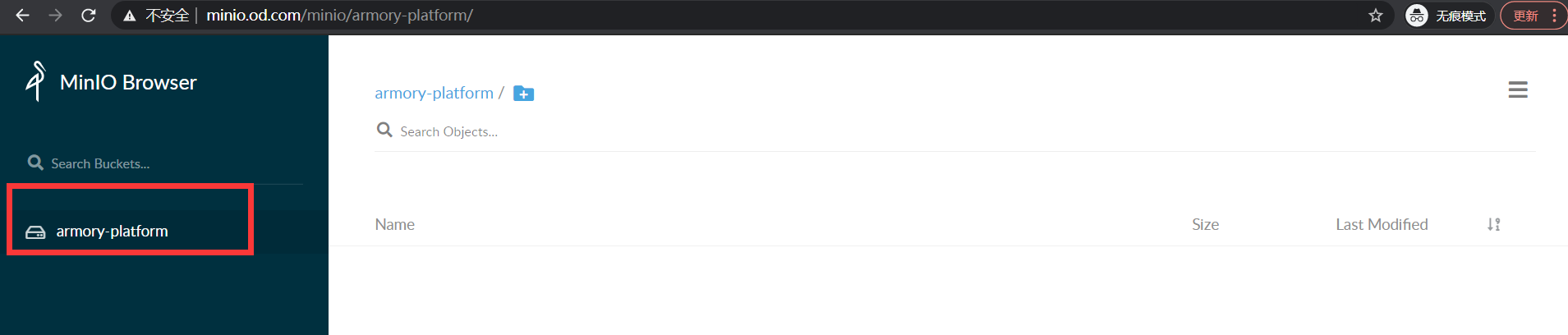

- 1.5.浏览器访问

- 2.部署缓存组件—Redis

- 2.1.准备docker镜像

- 2.2.准备资源配置清单

- 2.3.应用资源配置清单

- 2.4.检查redis

- 3.部署k8s云驱动组件—CloudDriver

- 3.1.准备docker镜像

- 3.2.准备minio的secret

- 3.3准备K8S的用户配置

- 1.签发证书和私钥

- 2.做kubeconfig配置

- 3.验证cluster-admin用户

- 4.创建configmap配置

- 5.配置运维主机管理k8s集群

- 6.控制节点config和远程config 对比:(分析)

- 3.4.准备资源配置清单

- 3.5.应用资源配置清单

- 3.6 验证clouddriver

- 4.部署数据持久化组件-Front50

- 4.1.准备docker镜像

- 4.2.准备资源配置清单

- 4.3.应用资源配置清单

- 4.4.检查

- 4.5.浏览器访问

- 5.部署任务编排组件–Orca

- 5.1.准备docker镜像

- 5.2.准备资源配置清单

- 5.3.应用资源配置清单

- 5.4.检查

- 6.部署消息总线组件–Echo

- 6.1.准备docker镜像

- 6.2.准备资源配置清单

- 6.3.应用资源配置清单

- 6.4.检查

- 7.部署流水线交互组件–lgor

- 7.1.准备docker镜像

- 7.2.准备资源配置清单

- 7.3.应用资源配置清单

- 7.4.检查

- 8.部署api提供组件–Gate

- 8.1.准备docker镜像

- 8.2.准备资源配置清单

- 8.3.应用资源配置清单

- 8.4.检查

- 9.部署前端网站项目–Deck

- 9.1.准备docker镜像

- 9.2.准备资源配置清单

- 9.3.应用资源配置清单

- 9.4.检查

- 10.部署前端代理–Nginx

- 10.1.准备docker镜像

- 10.2.准备资源配置清单

- 10.3.应用资源配置清单

- 10.4.域名解析

- 10.5.浏览器访问

- 第五章:使用spinnaker结合jenkins构建镜像(CI的过程)

- 5.1.使用spinnaker前期准备

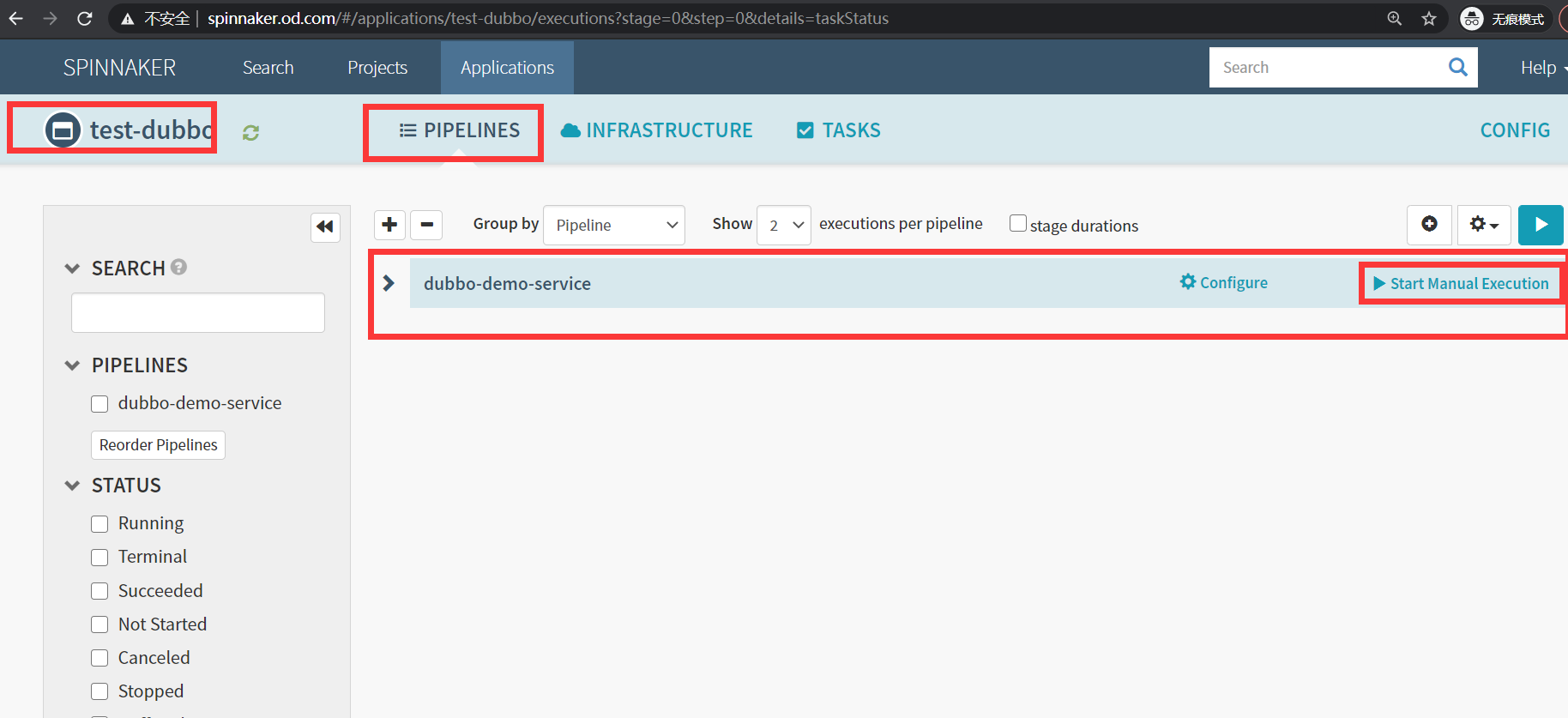

- 5.2.使用spinnaker创建dubbo

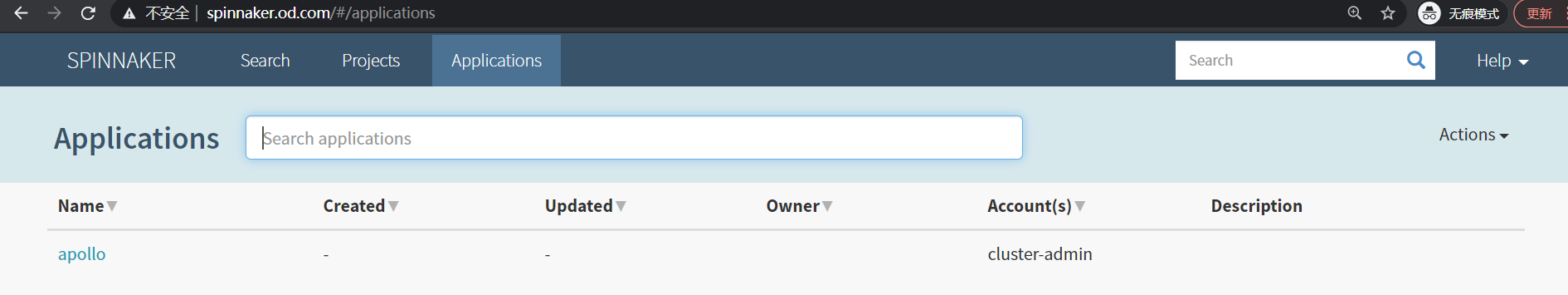

- 1.创建应用集

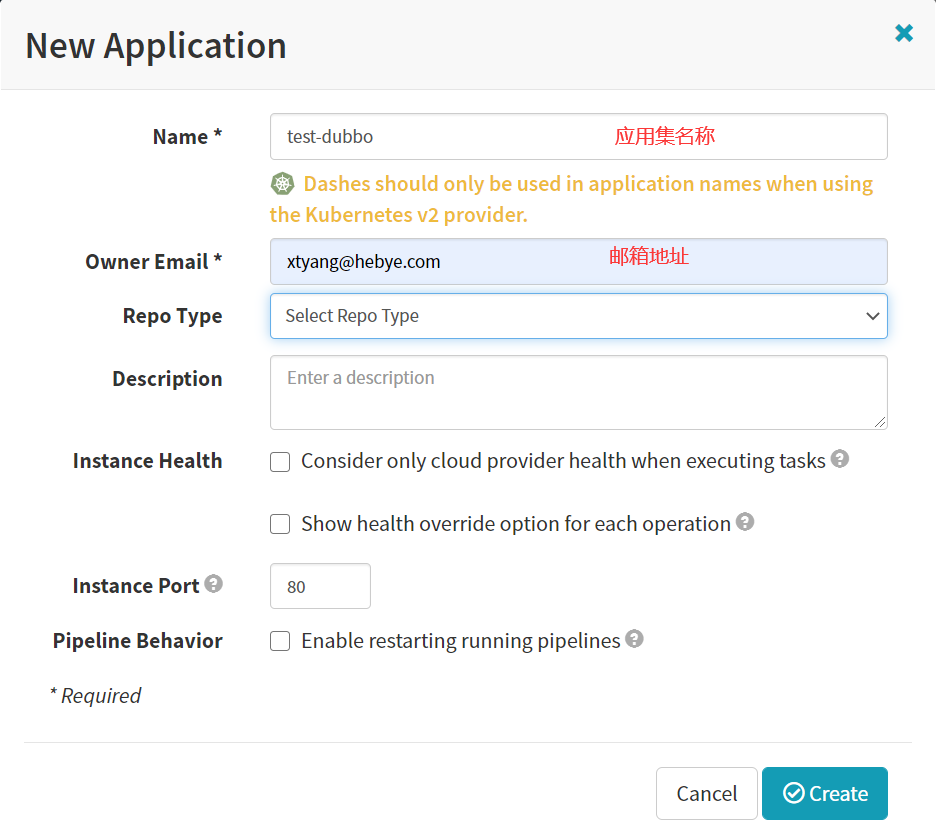

- 2.创建pipelines

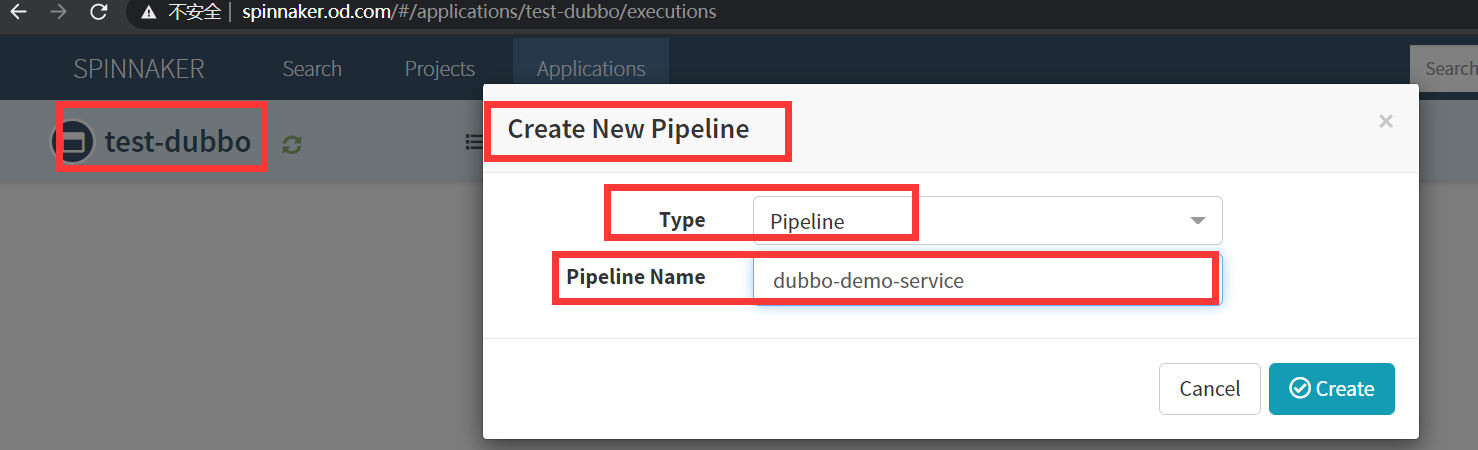

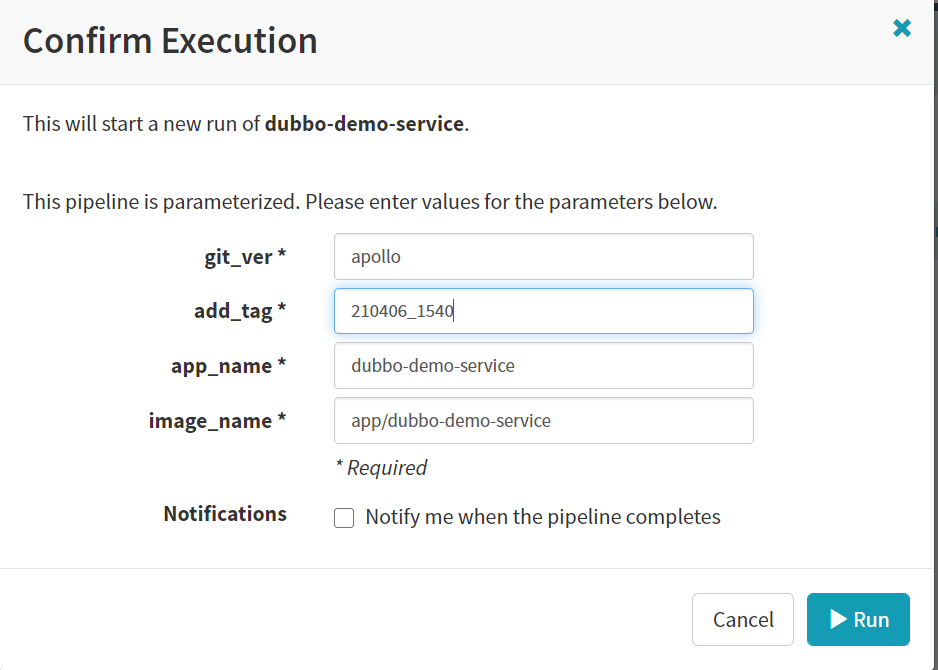

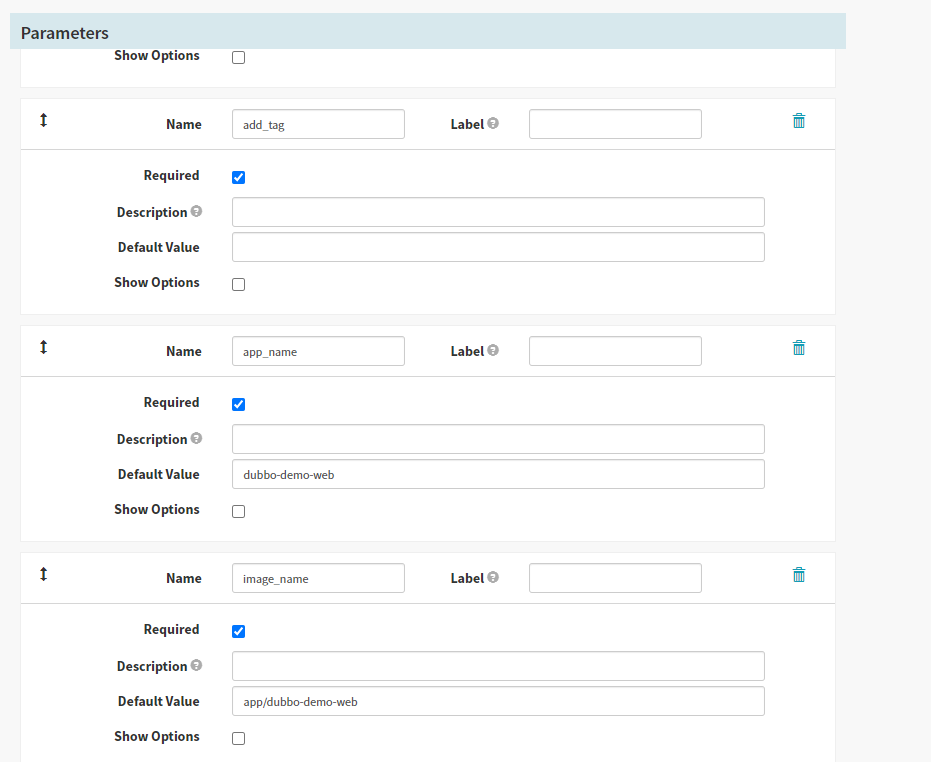

- 3.配置加4个参数(Parameters)

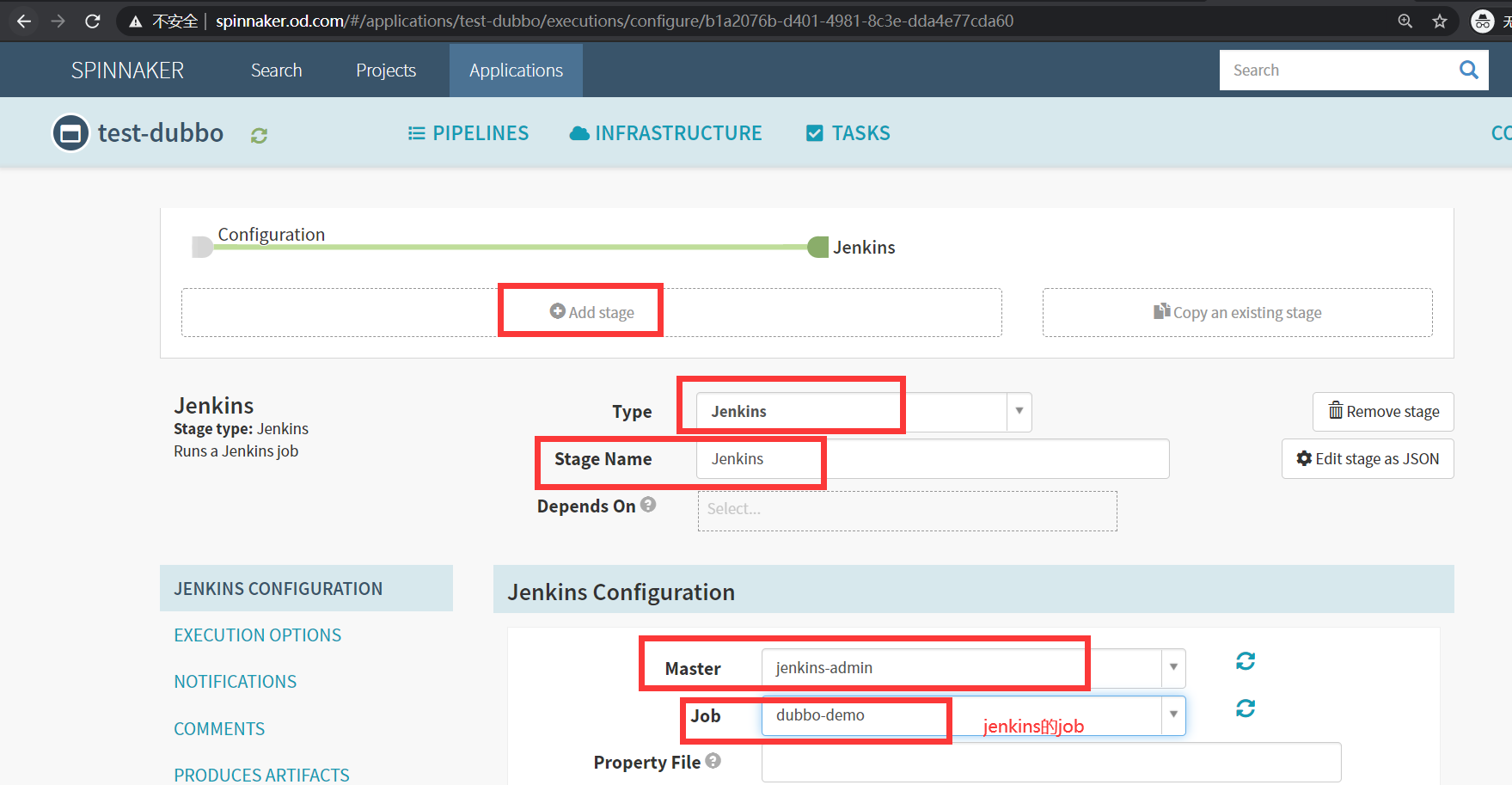

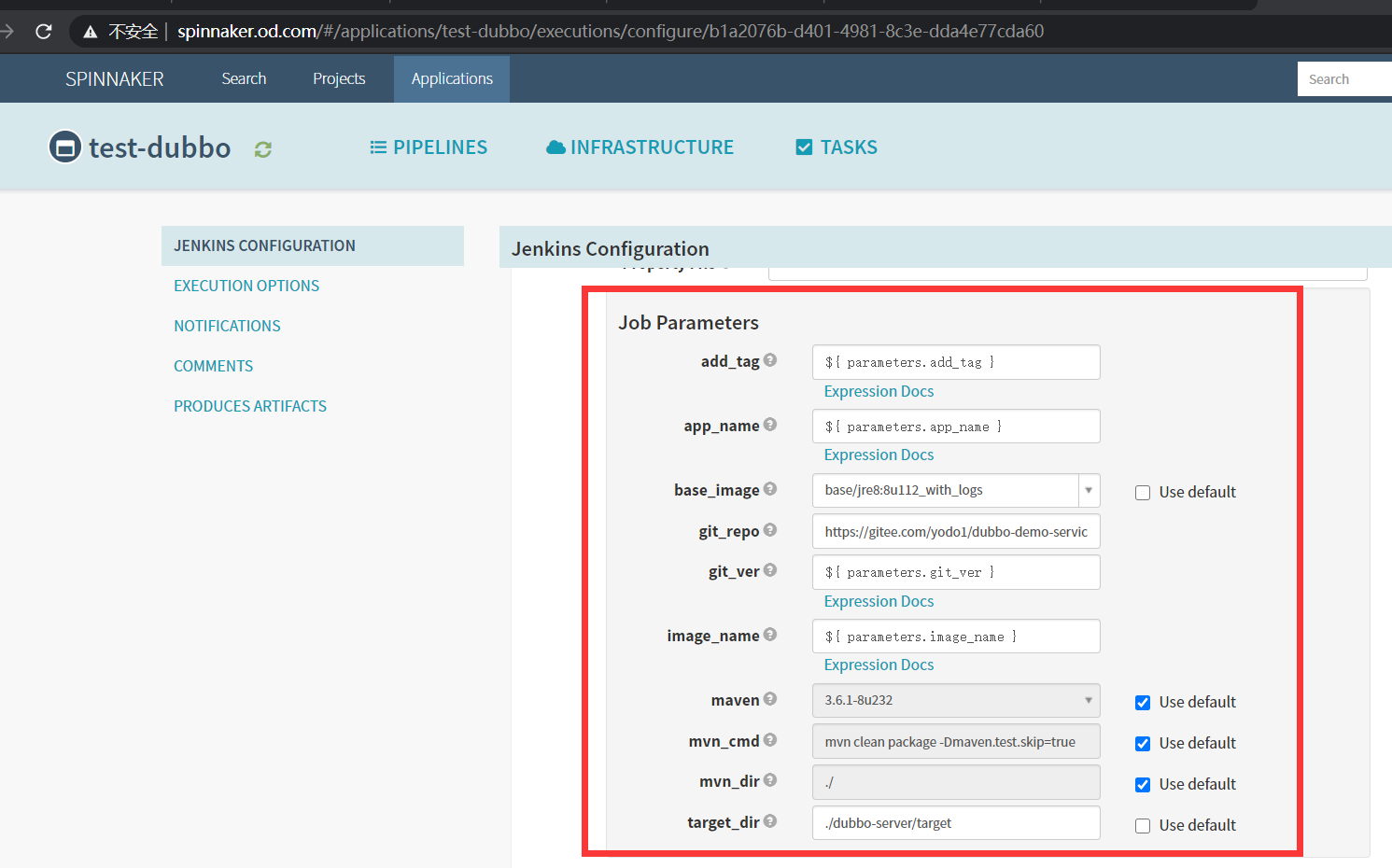

- 4.增加一个流水线的阶段

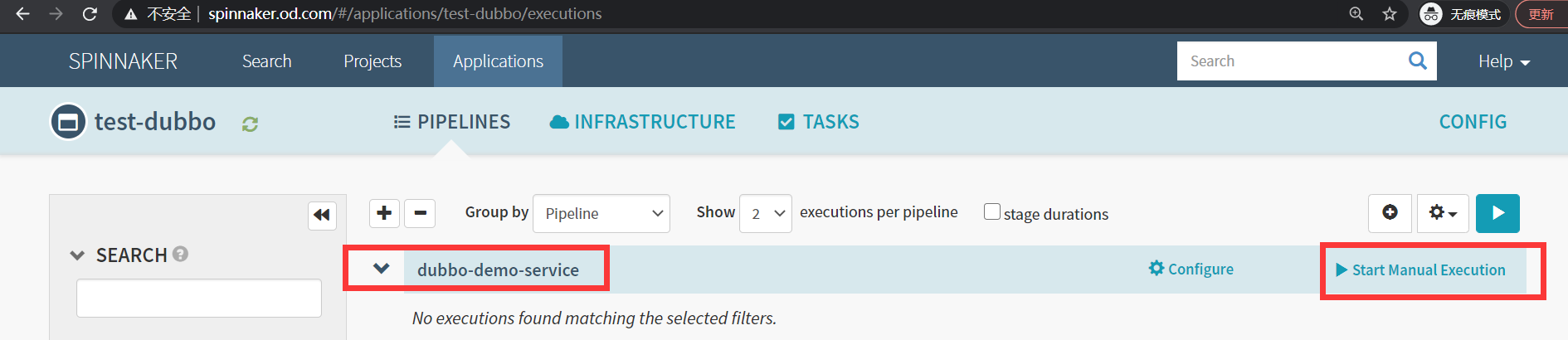

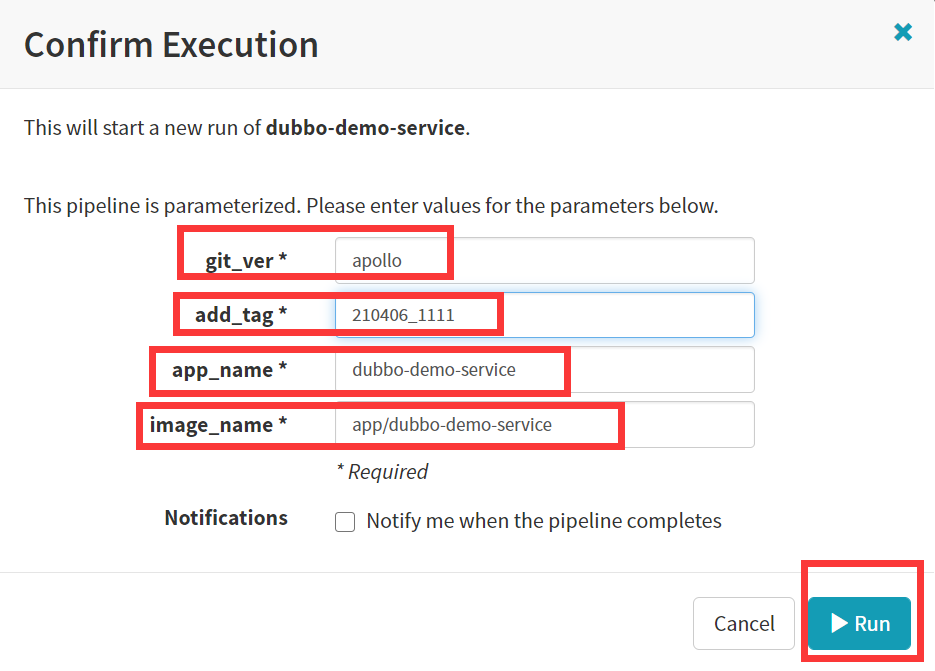

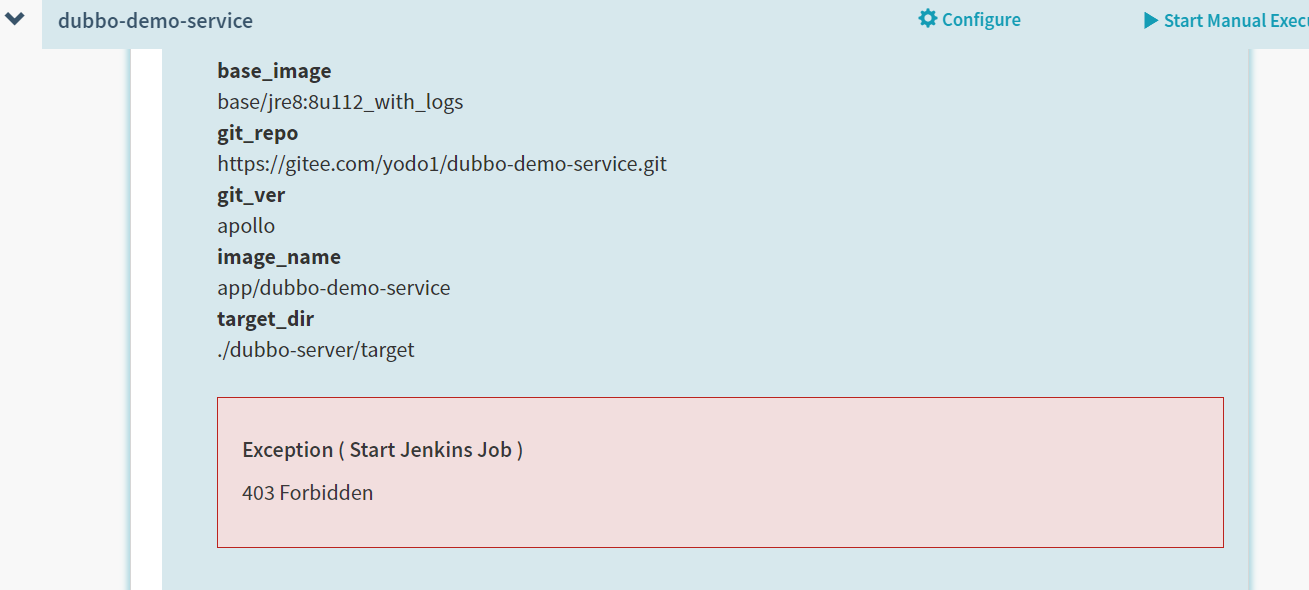

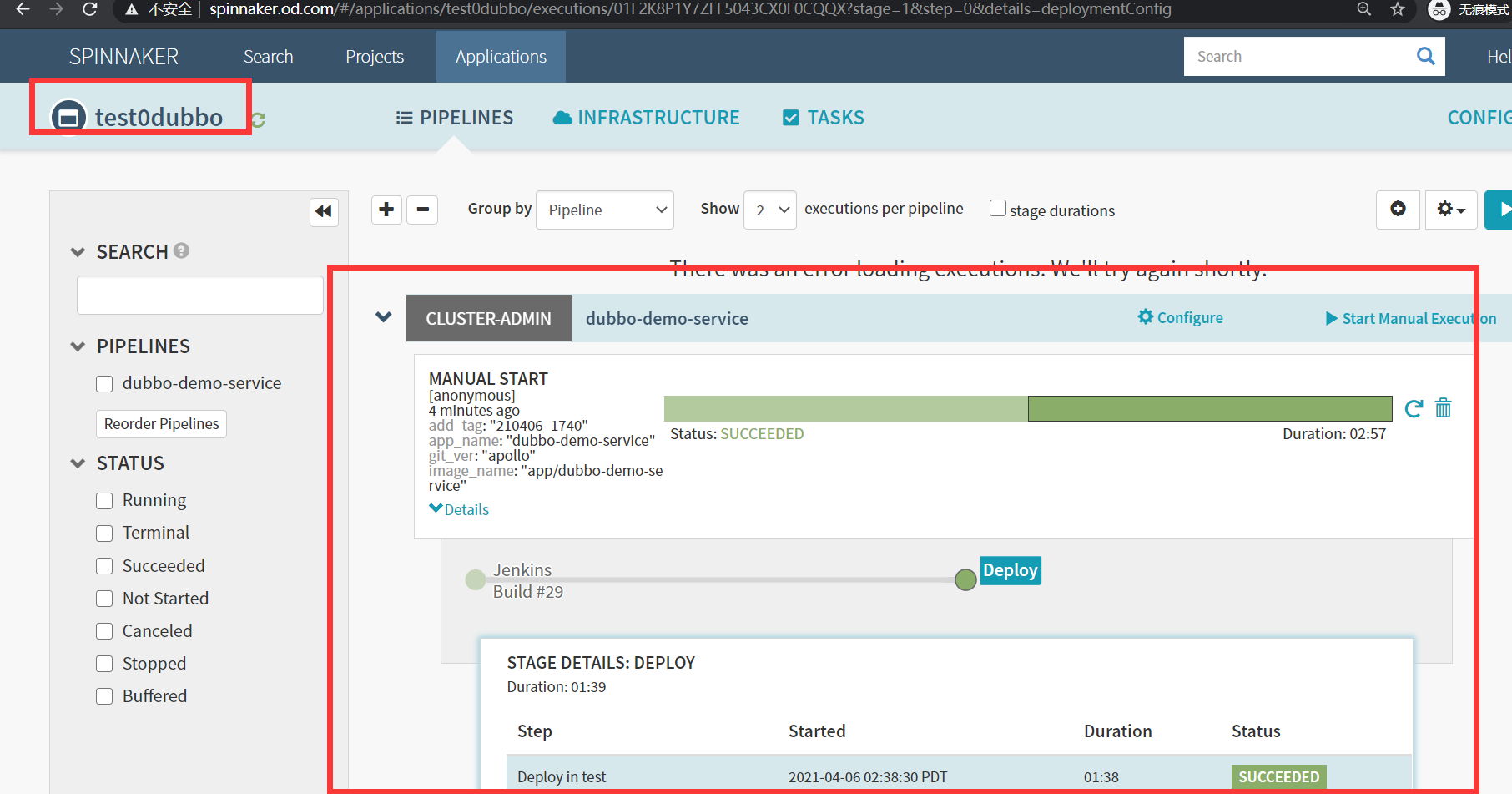

- 5.运行流水线

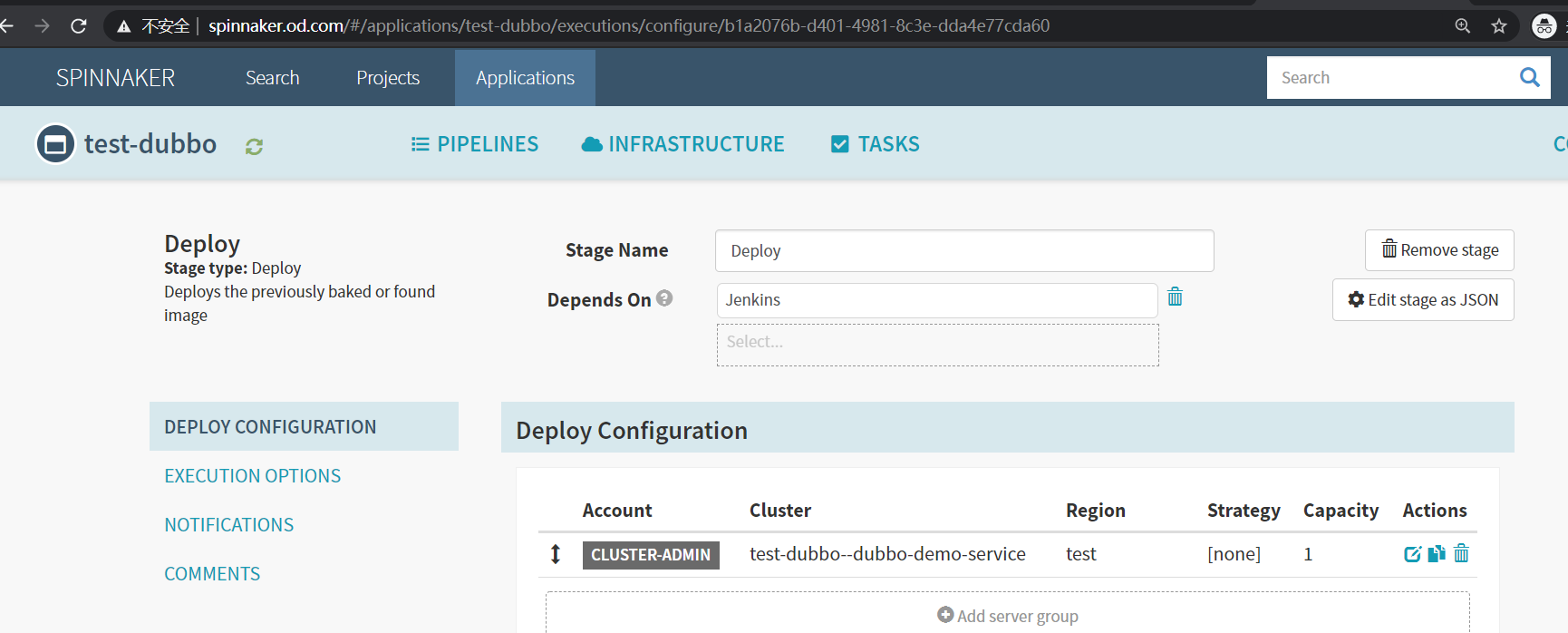

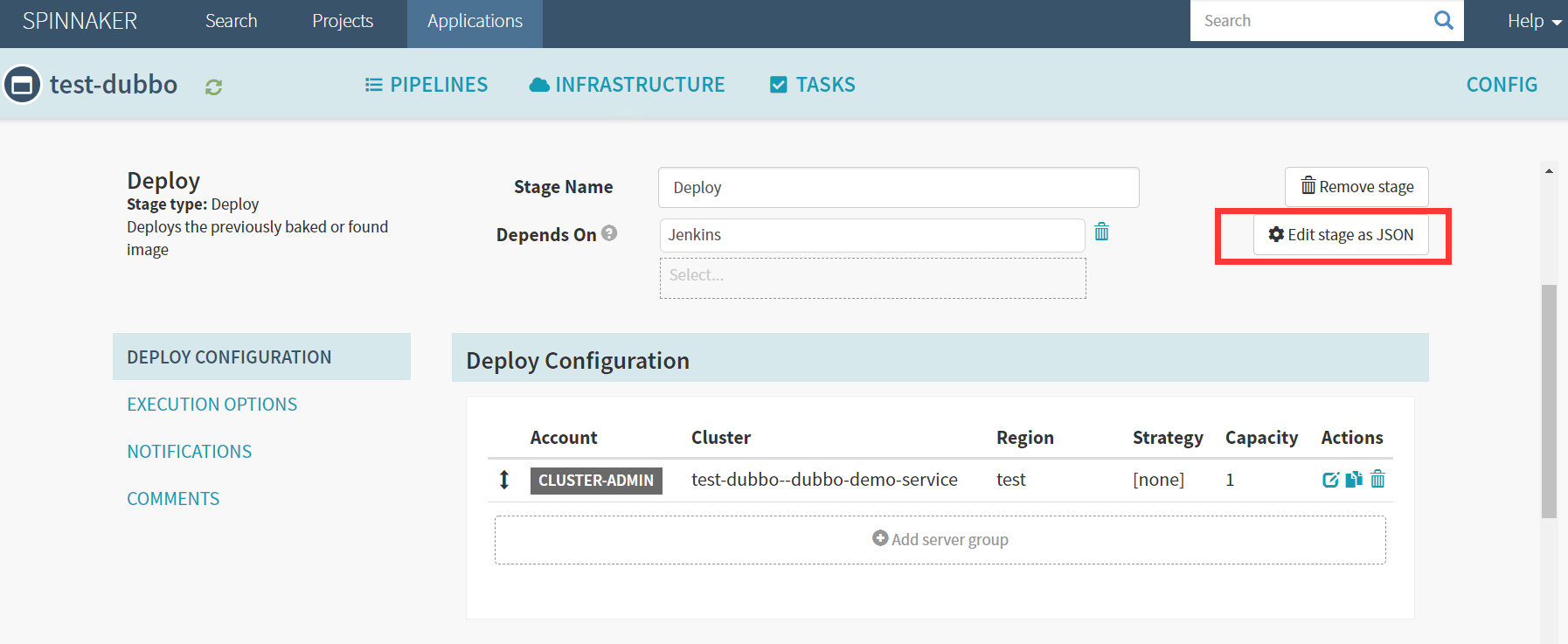

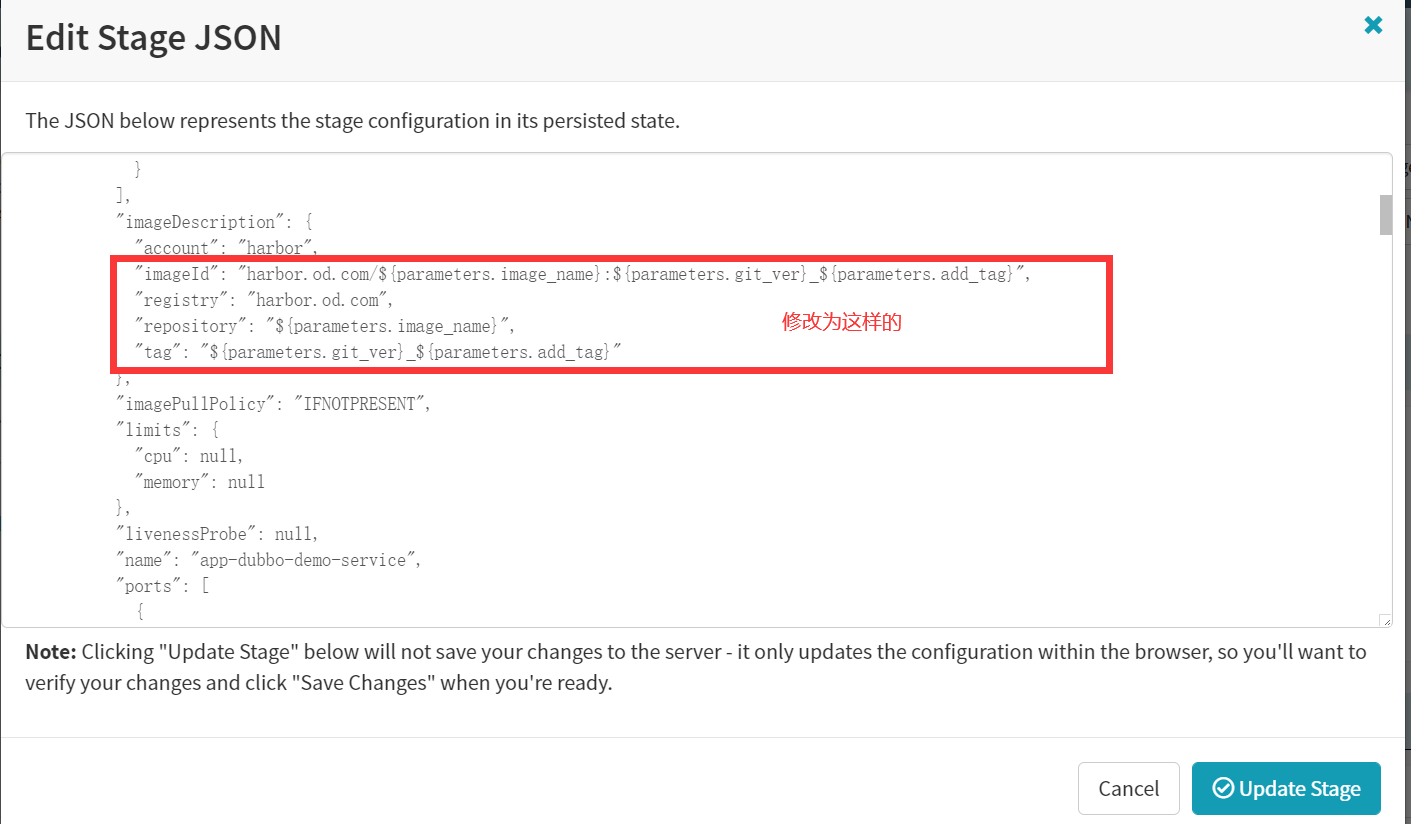

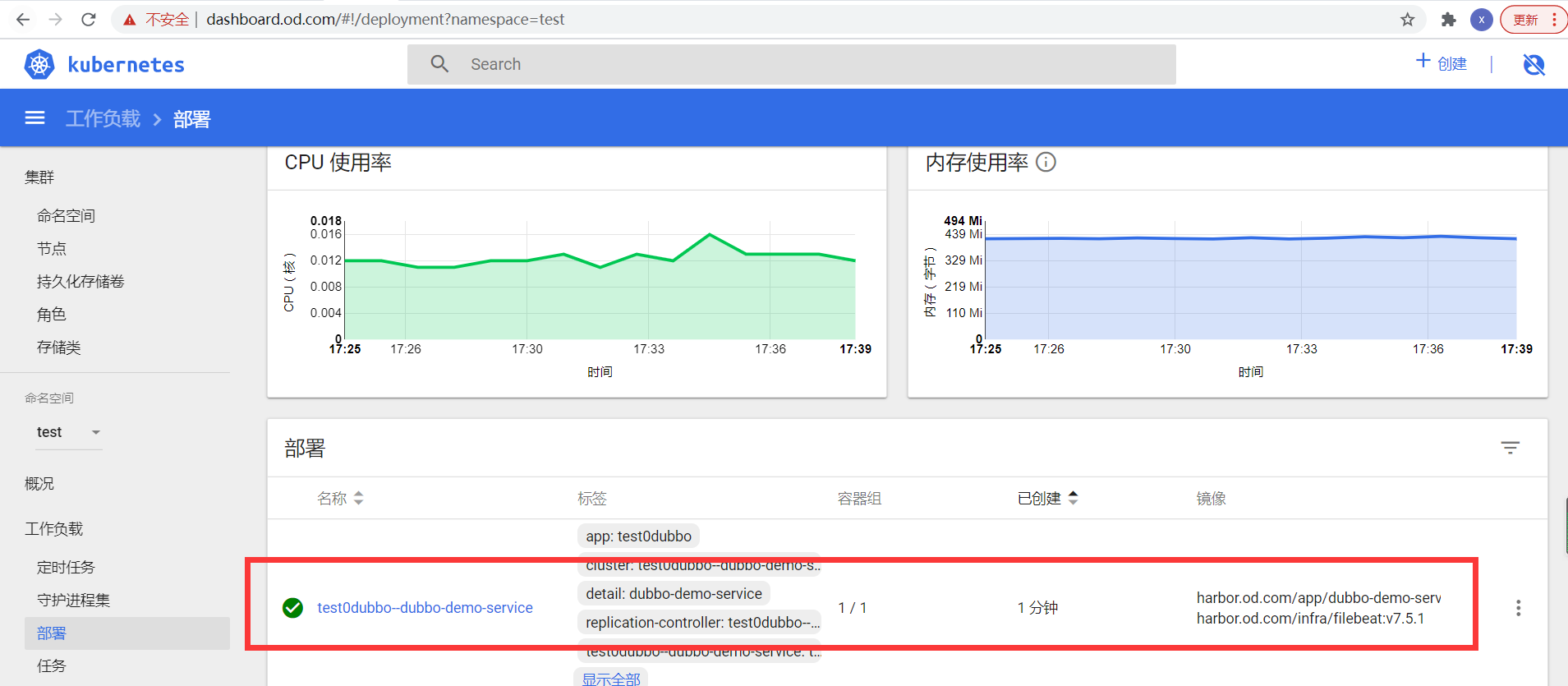

- 第六章:实战配置、使用Spinnaker配置dubbo服务提供者项目(CD的过程)

- 第七章:实战配置、使用Spinnaker配置dubbo服务消费者项目

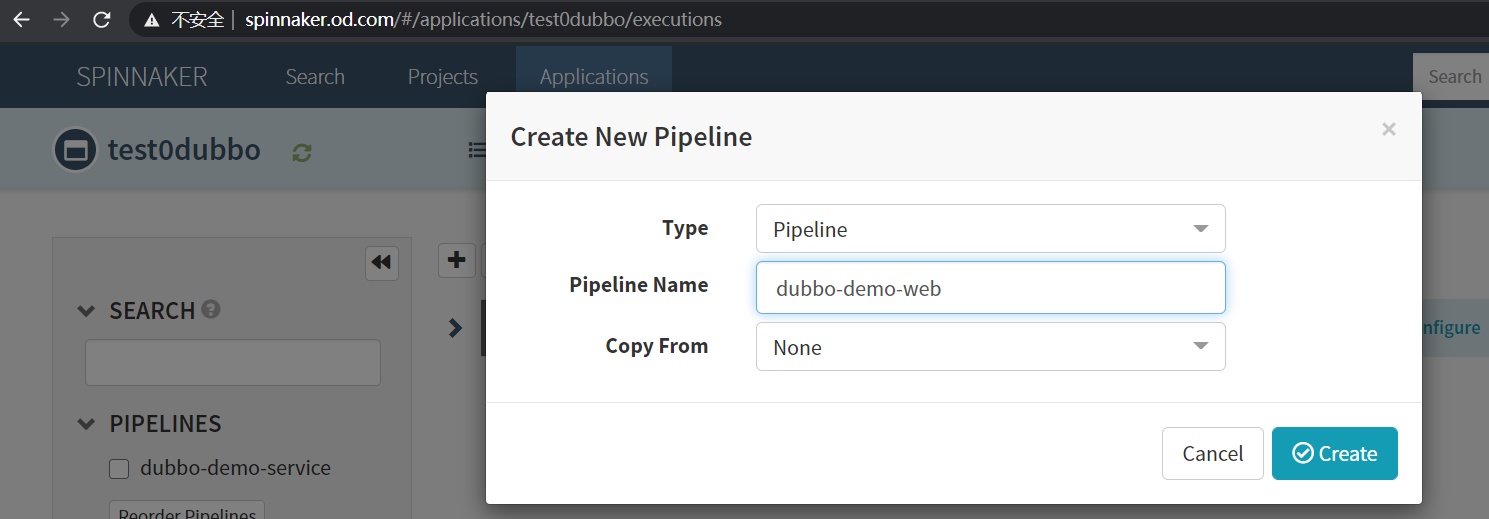

- 1.用test0dubbo应用集

- 2.创建pipelines

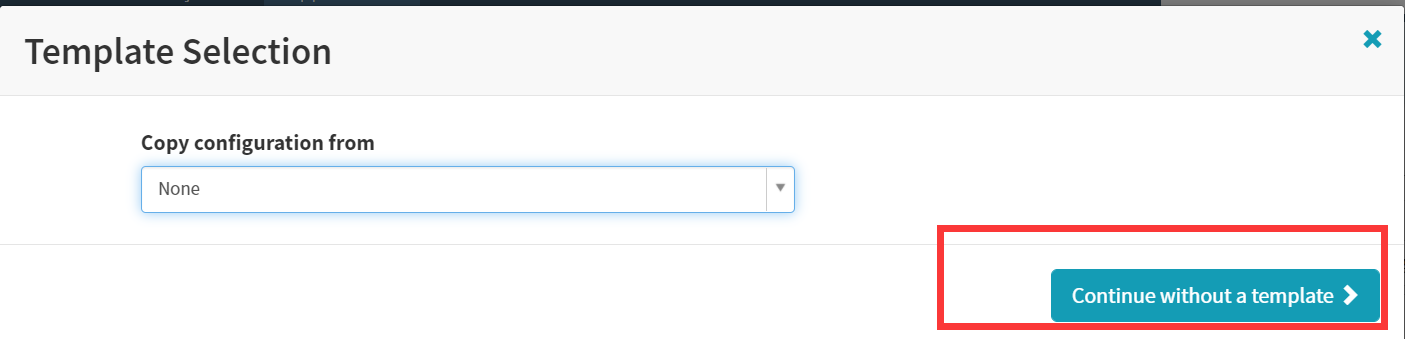

- 3.配置加4个参数

- 4.增加一个流水线的阶段(CI过程)

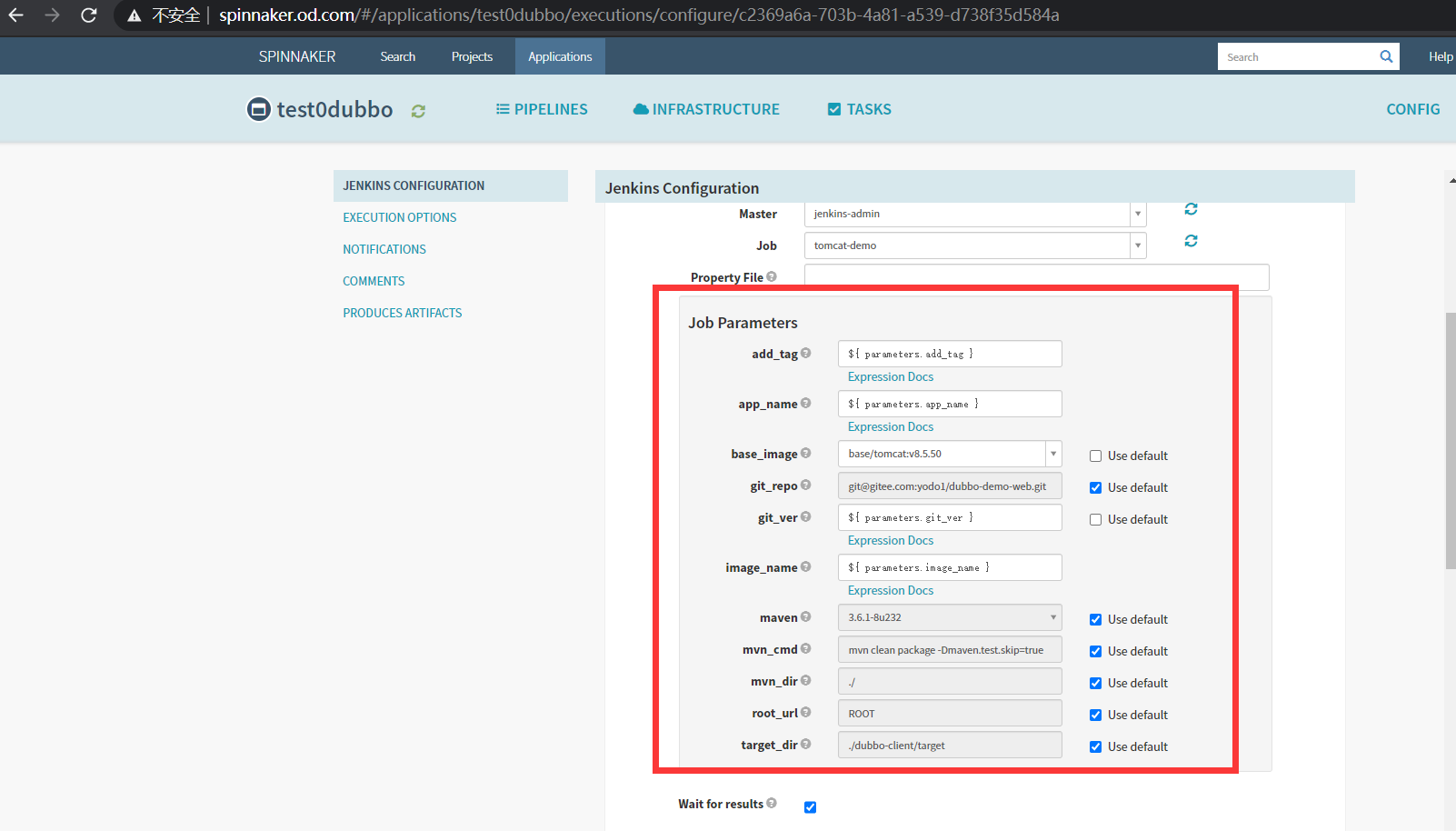

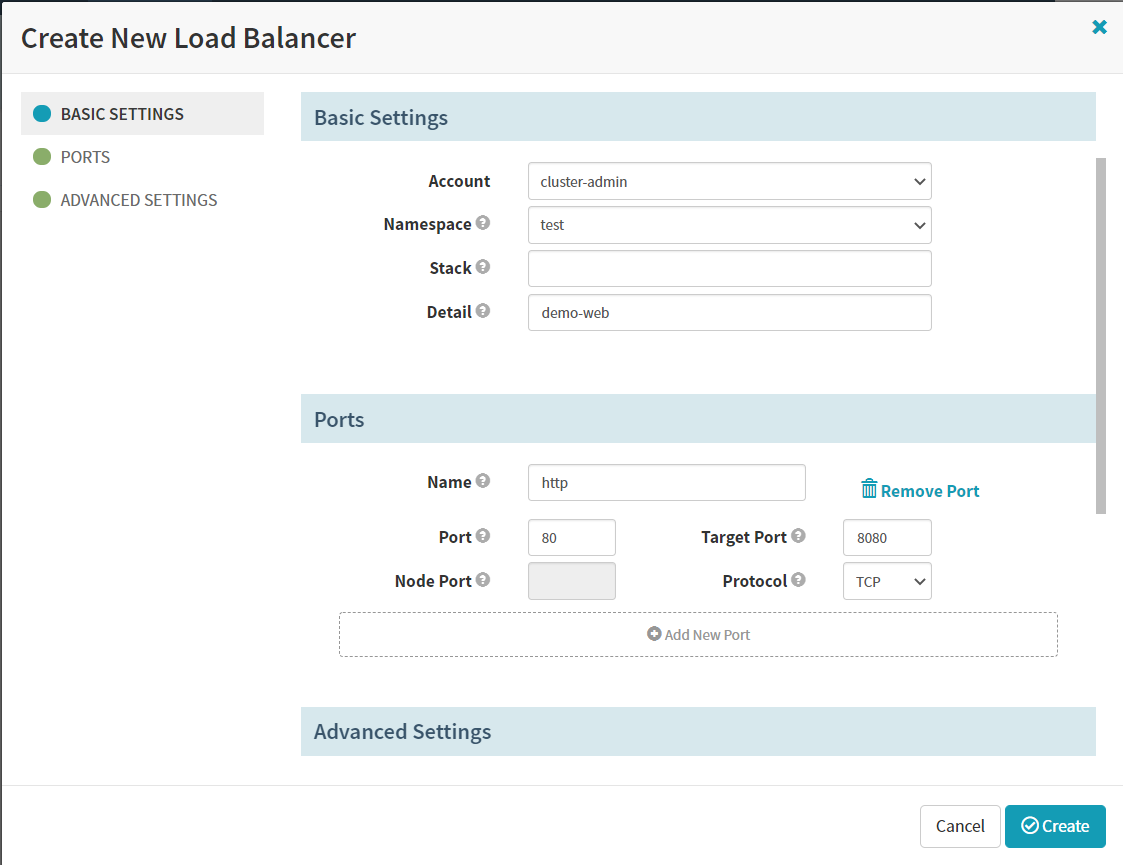

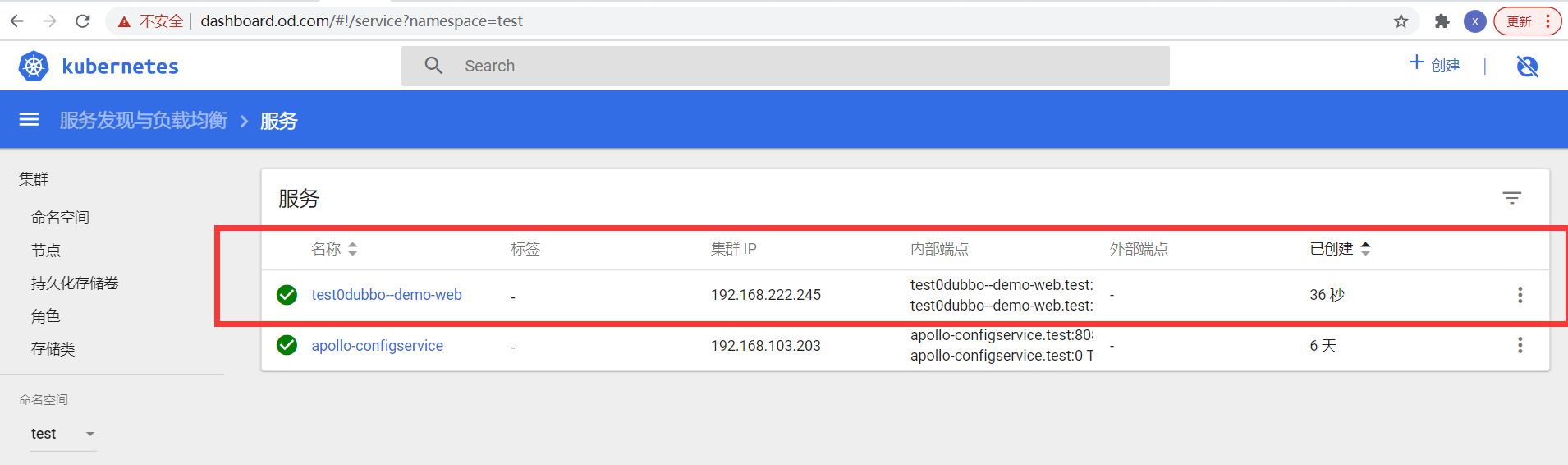

- 5.配置service

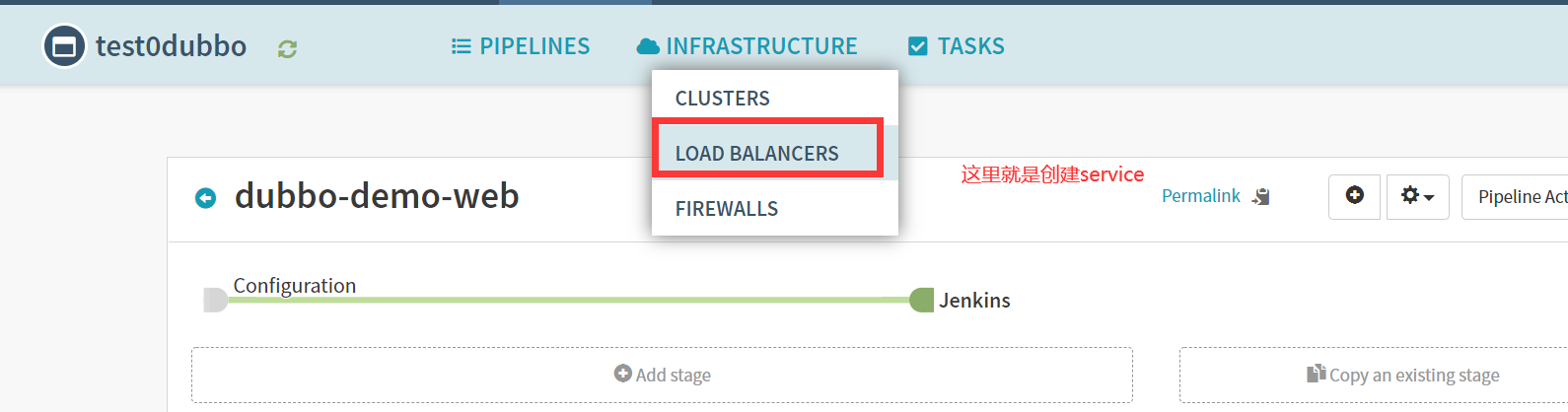

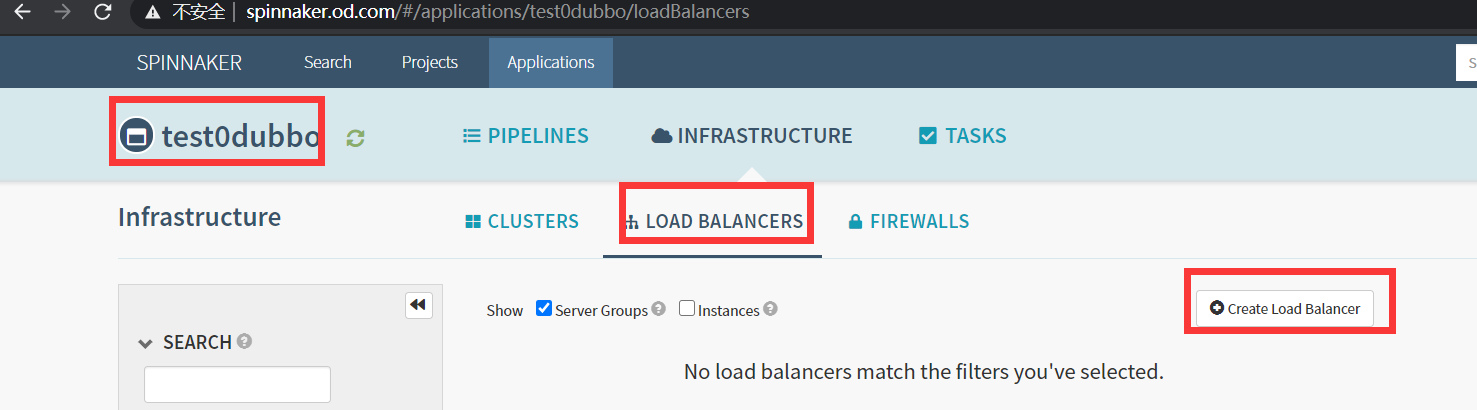

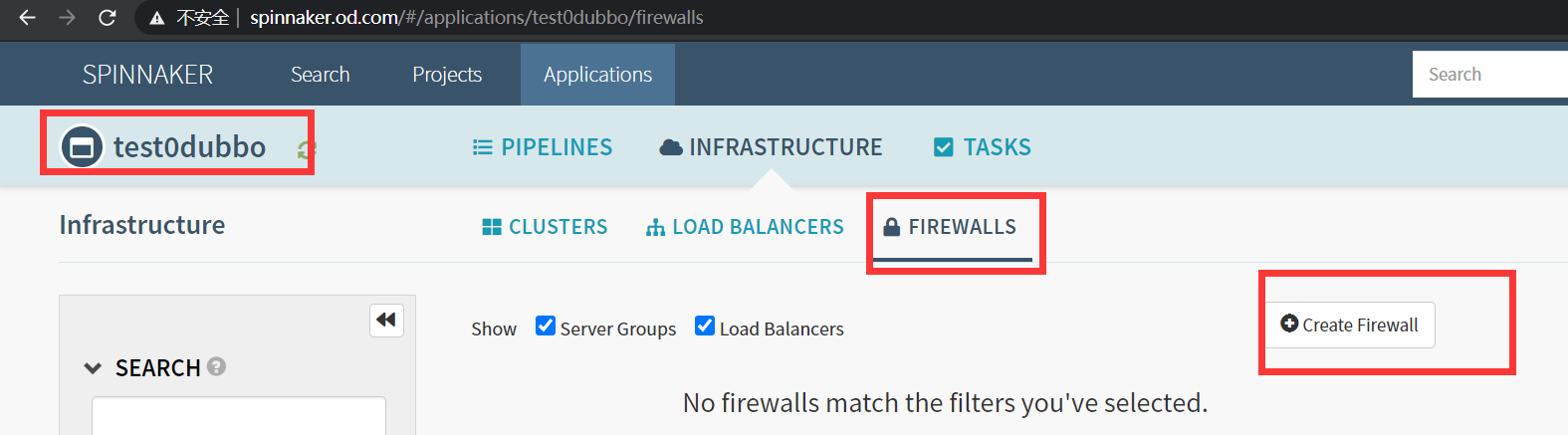

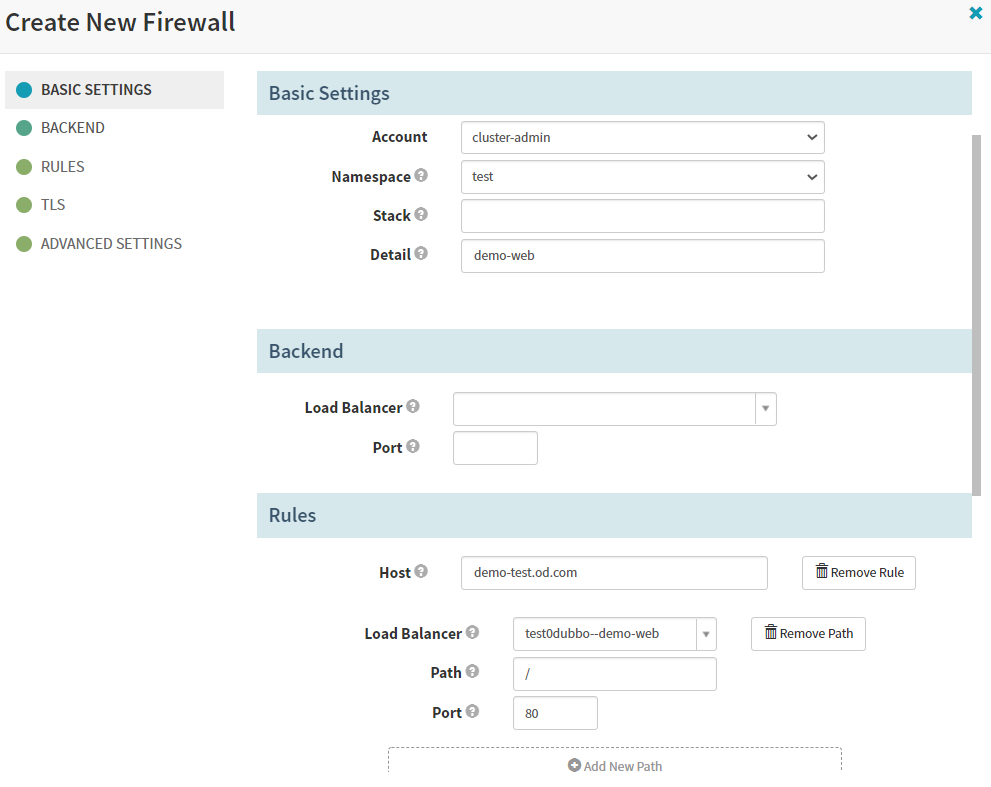

- 6.创建ingress

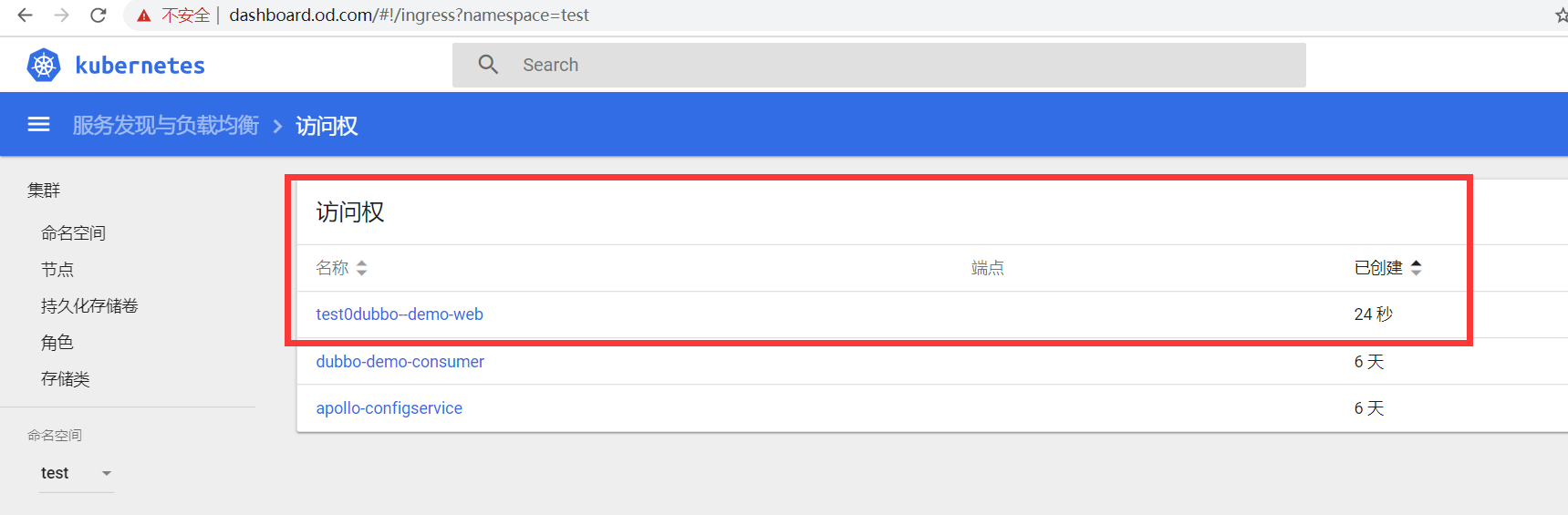

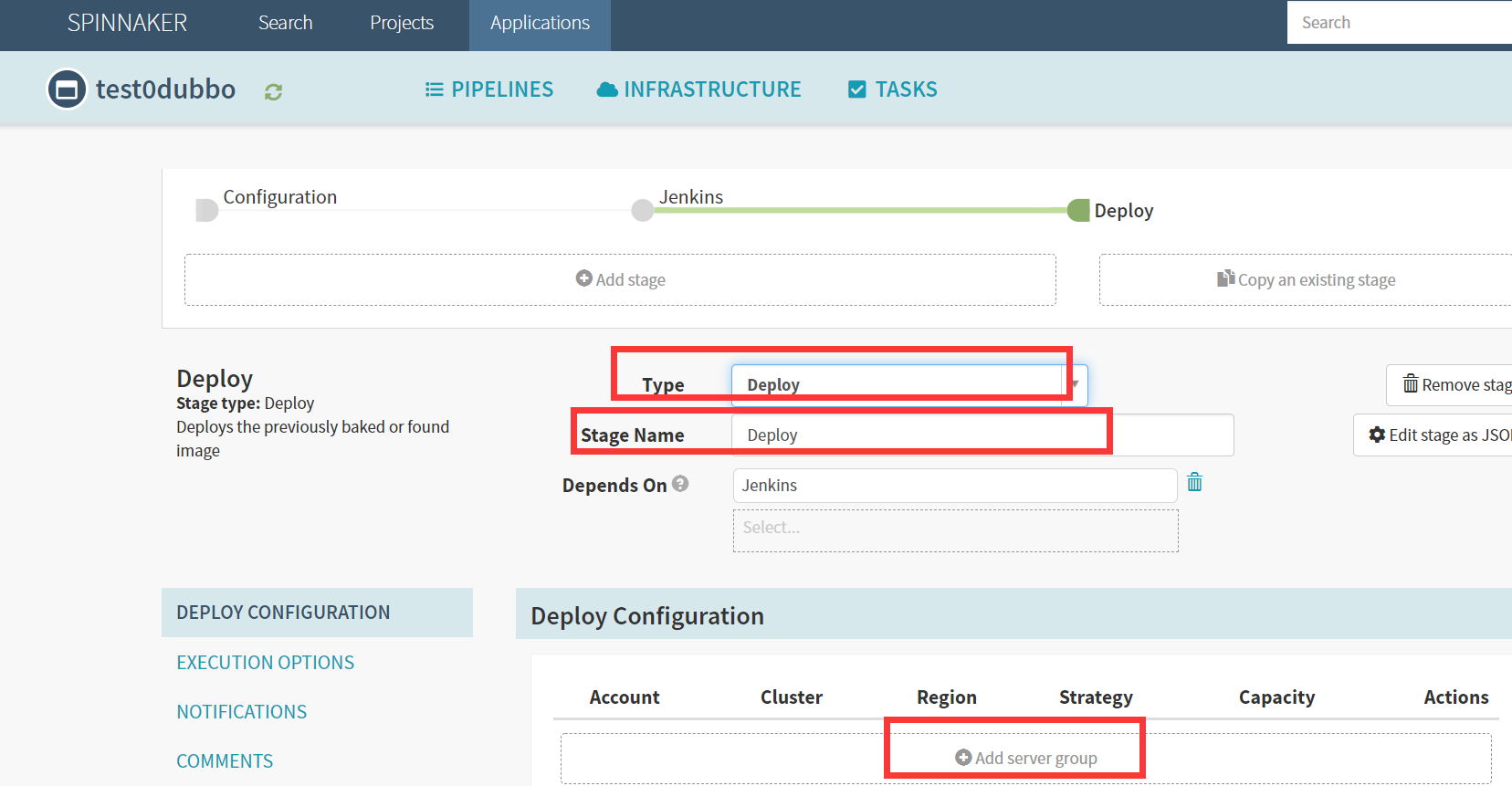

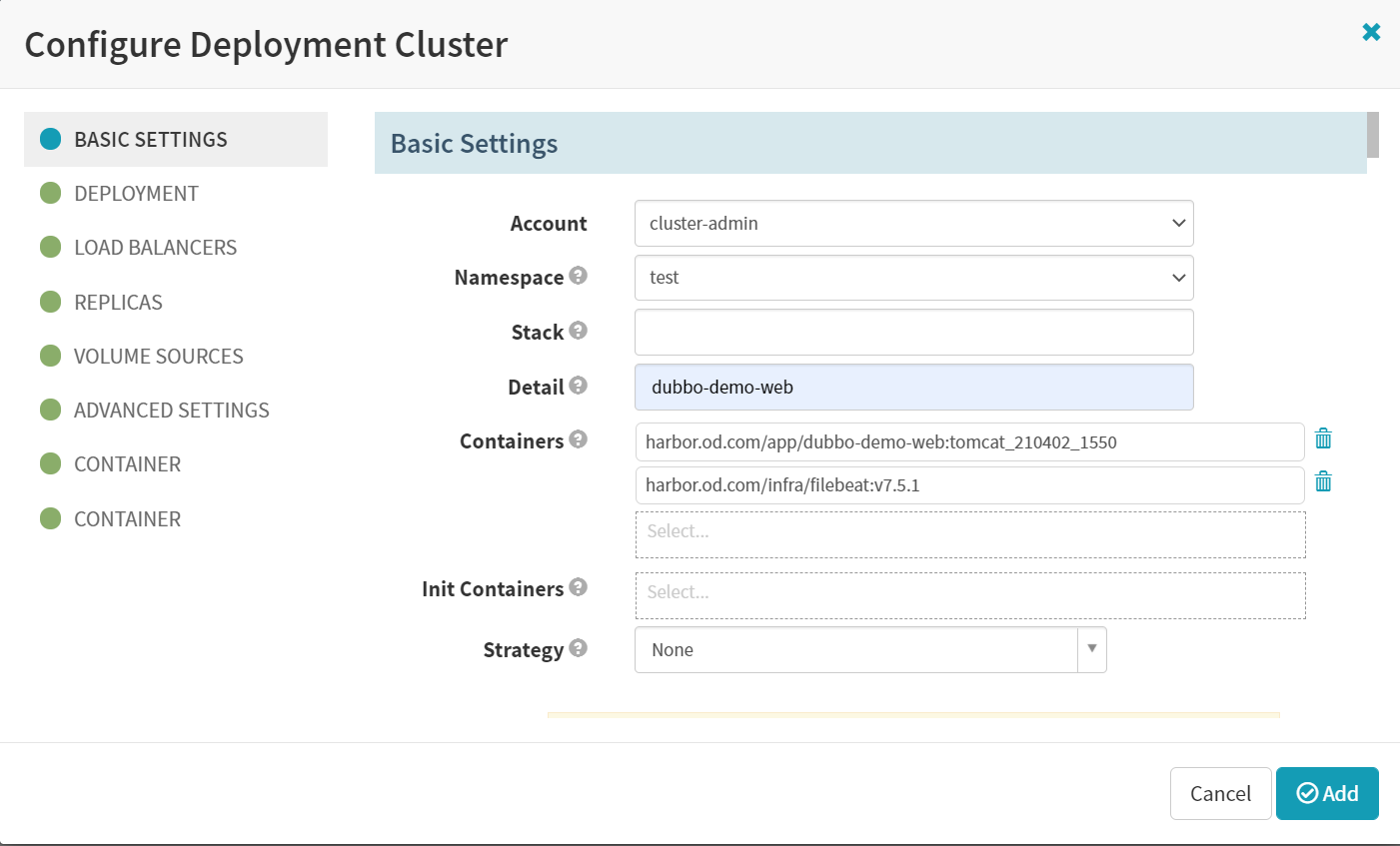

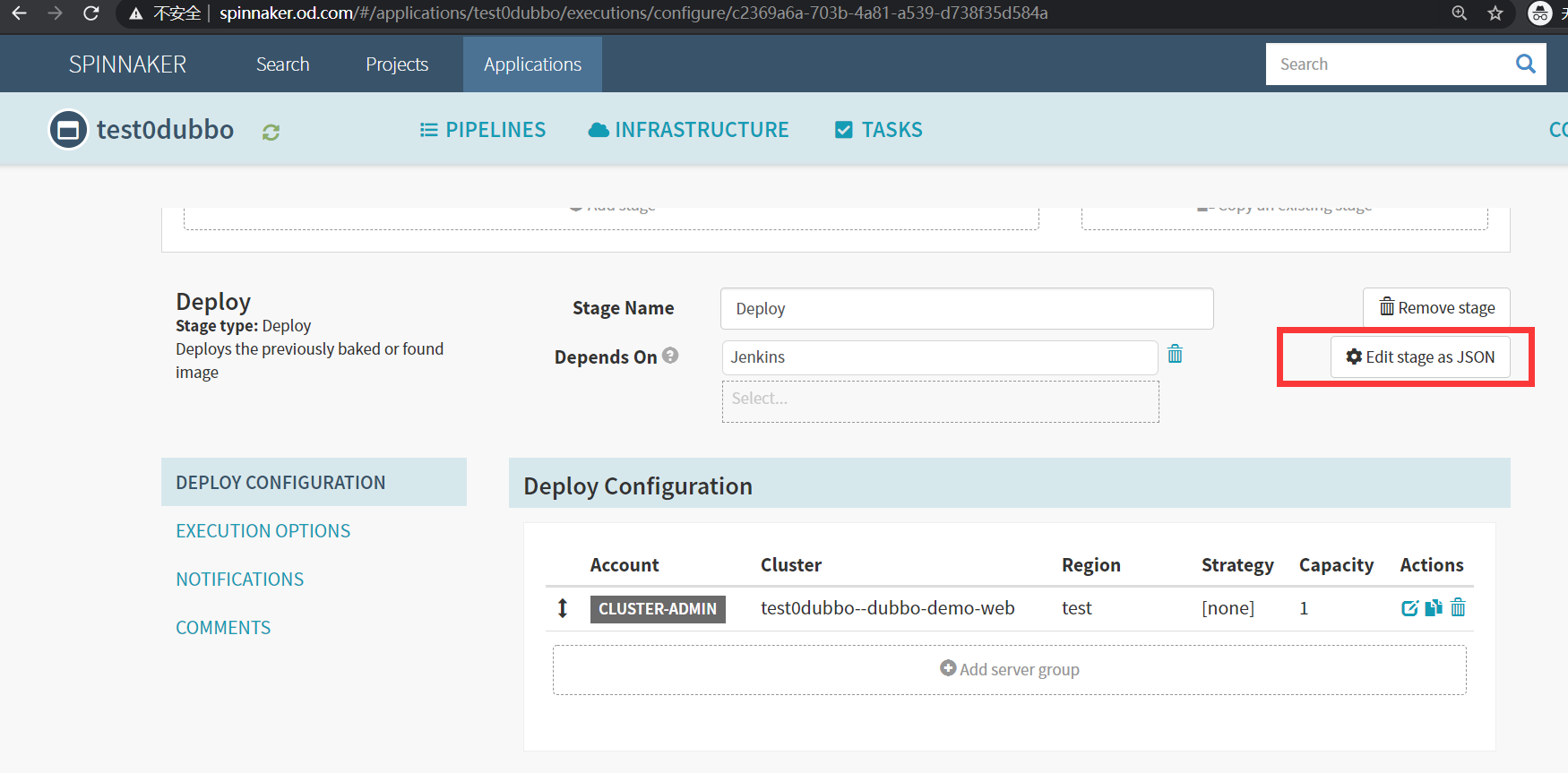

- 7.deploy

- 第八章:发布到正式环境

- 1.创建正式环境的应用集-prod0dubbo

- 2.配置加4个参数(Parameters)(dubbo-demo-service为例)

- 3.参考第六章的CD过程

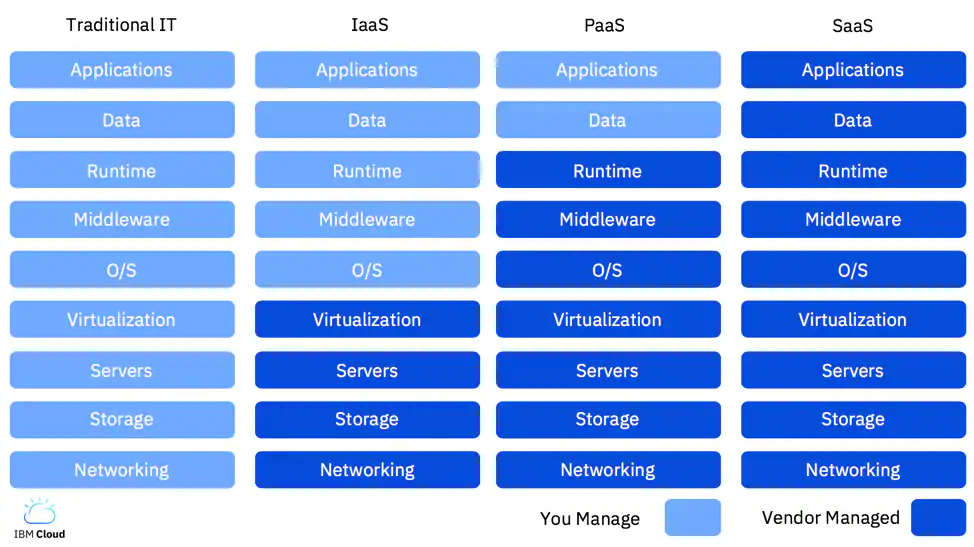

第一章: 容器云概述

- IaaS是云服务的最底层,主要提供一些基础资源。

- PaaS提供软件部署平台,抽象掉硬件和操作系统细节,可以无缝地扩展。开发者只需要关注自己的业务逻辑,不需要关注底层。

- SaaS是软件的开发、管理、部署都是交给第三方,不需要关心技术问题,可以拿来既用。

kubernetes不是什么?

kubernetes并不是传统的PaaS(平台即服务)系统。

- kubernetes不限制支持应用的类型,不限制应用框架,不限制受支持的语言runtimes(例如,java,python,ruby),满足12-factor applications。不区分”apps”或者”services”。

kubernetes支持不同负载应用,包括有状态,无状态、数据处理类型的应用。只要这个应用可以在容器运行,那么就能很好的运行在kubernetes上。 - kubernetes不提供中间件(如message buses)、数据处理框架(如spark)、数据库(如mysql)或者集群存储系统(如ceph)作为内置服务。这些应用都可以运行在kubernetes上面。

- kubernetes不部署源码不编译应用。持续集成的(CI)工作流方面,不同的用户有不同的需求和偏好的区域,因此,我们提供分层的CI工作流,但并不定义它应该如何工作。

- kubernetes允许用户选择自己的日志、监控和报警系统。

- kubernetes不提供或授权一个全面的应用程序配置 语言/系统(例如,jsonnet)。

- kubernetes不提供任何机器配置、维护、管理或者自我修复系统

- 越来越多的云计算厂商,正在基于K8S构建PaaS平台

- 获得Paas能力的几个必要条件

- 统一应用时的运行时环境(docker)

- IaaS能力(K8S)

- 有可靠的中间件集群、数据库集群(DBA的主要工作)

- 有分布式存储集群(存储工程师的主要工作)

- 有适配的监控、日志系统(Prometheus、ELK)

- 有完善的CI、CD系统

- 常见的基于K8S的CD系统

- 自研

- Argo CD

- OpenShift

- Spinnaker

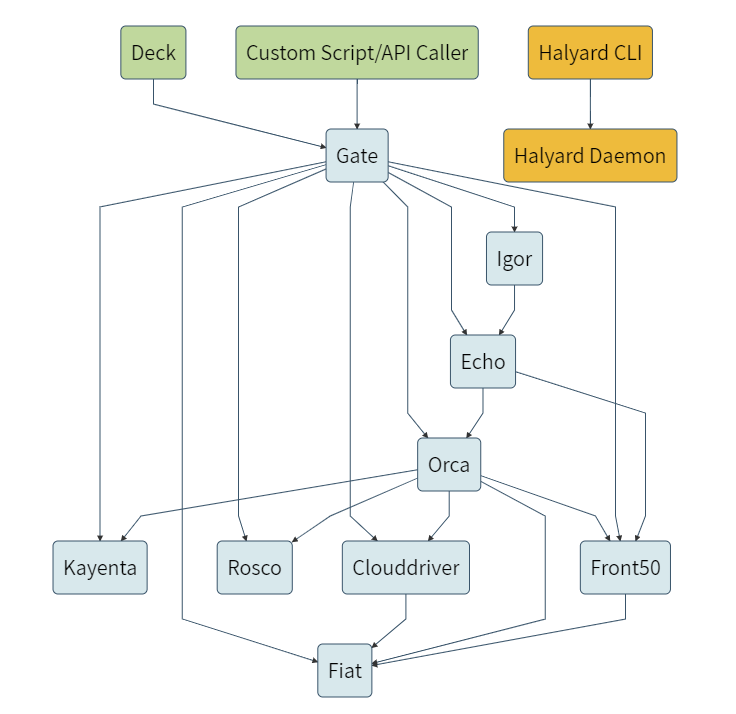

第二章:Spinnaker概述

spinnaker是Netflix在2015年,开源的一款持续交付平台,它继承了Netflix上一代集群和部署管理工具 Asgard:web-based Cloud Management and Deployment的优点,同时根据公司业务以及技术的发展抛弃了一些过时的设计:提高了持续交付系统的可复用行,提供了稳定可靠的API,提供了对基础设施和程序全局性的视图,配置、管理、运维都更简单,而且还完全兼容Asgard,总之对于Netflix来说Spinnaker是更牛逼的持续交付平台。

1.主要功能

- 集群管理

集群管理主要用于管理云资源,Spinnaker所说的”云”可以理解成AWS,既主要是IaaS的资源,比如OpenStack,Google云,微软云等,后来还支持了容器和kubernetes,但是管理方式还是按照管理基础的模式来设定的。

- 部署管理

管理部署流程是Spinnaker的核心功能,它负责将jenkins流水线创建的镜像,部署到kubernetes集群中去,让服务真正运行起来。

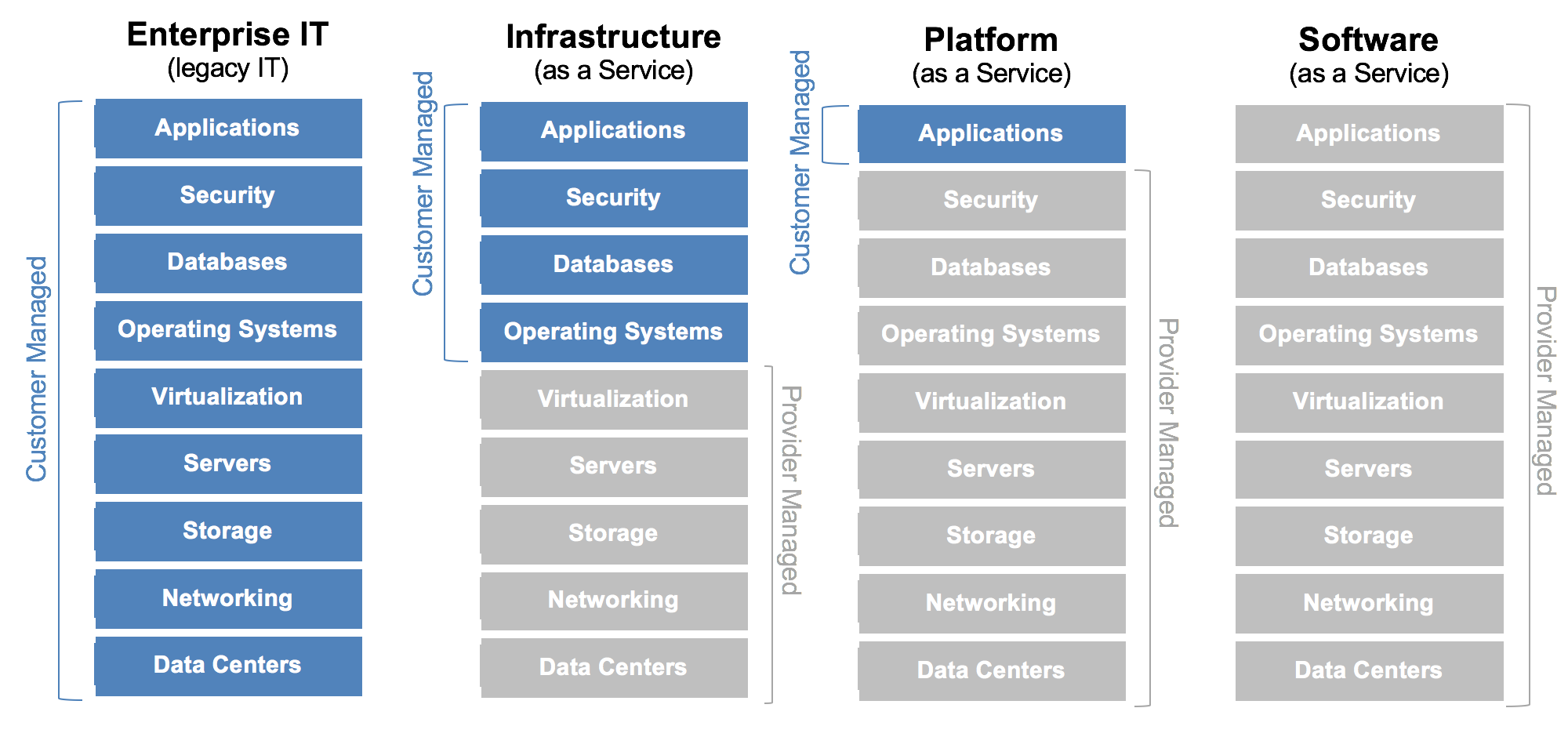

第三章:自动化运维平台架构详解

1.逻辑架构图

2.理解结构图

- Deck是基于浏览器的UI。

- Gate是API网关。Spinnaker UI和所有api调用程序都通过Gate与Spinnaker进行通信。

- Cloddriver负责管理云平台,并为所有部署的资源编排索引/缓存。

- Front50用于管理数据持久化,用于保存应用程序,管道,项目和通知的元数据。

- Lgor用于通过Jenkins和Travis CI等系统中的持续集成作业来触发管道,并且他允许在管道中使用Jenkins/Travis阶段。

- Orca是编排引擎。它处理所有临时操作和流水线。

- Rosco是管理调度虚拟机

- Kayenta为Spinnaker提供自动化的金丝雀分析。

- Fiat是Spinnaker的认证服务。

- Echo是信息通信服务。它支持发送通知(例如,Slack,电子邮件,SMS),并处理来自Github之类的服务中传入的Webhook。

3.部署选型

官网:https://spinnaker.io/

Spninaker包含组件众多,部署相对复杂,因此官方提供的脚手架工具halyard,但是可惜里面涉及的部分镜像被墙了。

Armory发行版:https://www.armory.io/

基于Spninaker,众多公司开发了发行版来简化Spinnaker的部署工作,例如我们要用Armory发行版。

Armory也有自己的脚手架工具,虽然相对halyard更简化了,但任然部分被墙。

因此我们部署的方式是手动交付Spinnaker的Armory发行版。

第四章:安装部署Spinnaker微服务集群

1.部署对象存储组件-minio

运维主机HDSS7-200.host.com上:

1.1.下载镜像

# 1 先登录habor,创建私有仓库armory,此步骤省略

# 2 创建目录

[root@hdss7-200 ~]# mkdir /data/nfs-volume/minio

[root@hdss7-200 ~]# docker pull minio/minio:latest

[root@hdss7-200 ~]# docker tag 6c897135cbab harbor.od.com/armory/minio:latest

[root@hdss7-200 ~]# docker push harbor.od.com/armory/minio:latest1.2.准备资源配置清单

- dp.yaml

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/minio -p

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/minio/

[root@hdss7-200 minio]# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

name: minio

name: minio

namespace: armory

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

name: minio

template:

metadata:

labels:

app: minio

name: minio

spec:

containers:

- name: minio

image: harbor.od.com/armory/minio:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

protocol: TCP

args:

- server

- /data

env:

- name: MINIO_ACCESS_KEY

value: admin

- name: MINIO_SECRET_KEY

value: admin123

readinessProbe:

failureThreshold: 3

httpGet:

path: /minio/health/ready

port: 9000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts:

- mountPath: /data

name: data

imagePullSecrets:

- name: harbor

volumes:

- nfs:

server: hdss7-200

path: /data/nfs-volume/minio

name: data

[root@hdss7-200 minio]# - svc.yaml

[root@hdss7-200 minio]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: minio

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: minio- ingress.yaml

[root@hdss7-200 minio]# cat ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: minio

namespace: armory

spec:

rules:

- host: minio.od.com

http:

paths:

- path: /

backend:

serviceName: minio

servicePort: 80

[root@hdss7-200 minio]# 1.3.解析域名

[root@hdss7-11 ~]# tail -1 /var/named/od.com.zone

minio A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

dig [root@hdss7-11 ~]# dig -t A minio.od.com +short

10.4.7.10

[root@hdss7-11 ~]# 1.4.应用资源配置清单

# 1 先创建名称空间

[root@hdss7-21 ~]# kubectl create ns armory

# 2 创建secret

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n armory

# 3 应用资源配置清单

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/armory/minio/dp.yaml

deployment.extensions/minio created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/armory/minio/svc.yaml

service/minio created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/armory/minio/ingress.yaml

ingress.extensions/minio created

[root@hdss7-22 ~]# 1.5.浏览器访问

访问:http://minio.od.com

用户名/密码:admin/admin123

2.部署缓存组件—Redis

2.1.准备docker镜像

运维主机HDSS7-200.host.com上:

[root@hdss7-200 ~]# docker pull redis:4.0.14

[root@hdss7-200 ~]# docker tag 191c4017dcdd harbor.od.com/armory/redis:v4.0.14

[root@hdss7-200 ~]# docker push harbor.od.com/armory/redis:v4.0.142.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/redis

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/redis/

[root@hdss7-200 redis]# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

name: redis

name: redis

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

name: redis

template:

metadata:

labels:

app: redis

name: redis

spec:

containers:

- name: redis

image: harbor.od.com/armory/redis:v4.0.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 6379

protocol: TCP

imagePullSecrets:

- name: harbor

[root@hdss7-200 redis]#

[root@hdss7-200 redis]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: armory

spec:

ports:

- port: 6379

protocol: TCP

targetPort: 6379

selector:

app: redis

[root@hdss7-200 redis]# 2.3.应用资源配置清单

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/armory/redis/dp.yaml

deployment.extensions/redis created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/armory/redis/svc.yaml

service/redis created

[root@hdss7-22 ~]# 2.4.检查redis

[root@hdss7-21 ~]# kubectl get pods -n armory

NAME READY STATUS RESTARTS AGE

minio-5ff9567d9d-j695z 1/1 Running 0 124m

redis-77ff686585-sl65n 1/1 Running 0 39s

[root@hdss7-21 ~]# kubectl get svc -n armory

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

minio ClusterIP 192.168.6.1 <none> 80/TCP 125m

redis ClusterIP 192.168.253.163 <none> 6379/TCP 47s

[root@hdss7-21 ~]# telnet 192.168.253.163 6379

Trying 192.168.253.163...

Connected to 192.168.253.163.

Escape character is '^]'.3.部署k8s云驱动组件—CloudDriver

运维主机HDSS7-200.host.com上:

3.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/spinnaker-clouddriver-slim:release-1.8.x-14c9664

[root@hdss7-200 ~]# docker tag edb2507fdb62 harbor.od.com/armory/clouddriver:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/clouddriver:v1.8.x3.2.准备minio的secret

- 准备配置文件

运维主机HDSS7-200.host.com上:

[root@hdss7-200 ~]# mkdir -p /data/k8s-yaml/armory/clouddriver

[root@hdss7-200 ~]# vi /data/k8s-yaml/armory/clouddriver/credentials

[root@hdss7-200 ~]# cat /data/k8s-yaml/armory/clouddriver/credentials

[default]

aws_access_key_id=admin

aws_secret_access_key=admin123

[root@hdss7-200 ~]#- 创建secret

任意运算节点上:

[root@hdss7-22 ~]# wget http://k8s-yaml.od.com/armory/clouddriver/credentials

[root@hdss7-22 ~]# kubectl create secret generic credentials --from-file=./credentials -n armory3.3准备K8S的用户配置

1.签发证书和私钥

运维主机HDSS7-200.host.com上:

准备证书签发请求文件admin-csr.json

[root@hdss7-200 ~]# cd /opt/certs/ [root@hdss7-200 certs]# cat admin-csr.json { "CN": "cluster-admin", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } [root@hdss7-200 certs]#注:CN要设置为:cluster-admin

签发生成admin.pem、admin-key.pem

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client admin-csr.json | cfssl-json -bare admin

[root@hdss7-200 certs]# ll -lh admin*

-rw-r--r-- 1 root root 1001 Mar 10 19:54 admin.csr

-rw-r--r-- 1 root root 285 Mar 10 19:45 admin-csr.json

-rw------- 1 root root 1.7K Mar 10 19:54 admin-key.pem

-rw-r--r-- 1 root root 1.4K Mar 10 19:54 admin.pem

[root@hdss7-200 certs]#2.做kubeconfig配置

任意运算节点上

# 先把证书拷贝到运算节点上

[root@hdss7-22 ~]# scp hdss7-200:/opt/certs/ca.pem .

[root@hdss7-22 ~]# scp hdss7-200:/opt/certs/admin.pem .

[root@hdss7-22 ~]# scp hdss7-200:/opt/certs/admin-key.pem .

[root@hdss7-22 ~]# kubectl config set-cluster myk8s --certificate-authority=./ca.pem --embed-certs=true --server=https://10.4.7.10:7443 --kubeconfig=config

[root@hdss7-22 ~]# kubectl config set-credentials cluster-admin --client-certificate=./admin.pem --client-key=./admin-key.pem --embed-certs=true --kubeconfig=config

[root@hdss7-22 ~]# kubectl config set-context myk8s-context --cluster=myk8s --user=cluster-admin --kubeconfig=config

[root@hdss7-22 ~]# kubectl config use-context myk8s-context --kubeconfig=config

[root@hdss7-22 ~]# kubectl create clusterrolebinding myk8s-admin --clusterrole=cluster-admin --user=cluster-admin 3.验证cluster-admin用户

将config文件拷贝至任意运算节点/root/.kube下,使用kubectl验证

[root@hdss7-22 ~]# cp config /root/.kube/

[root@hdss7-22 ~]#

[root@hdss7-22 ~]#

[root@hdss7-22 ~]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.4.7.10:7443

name: myk8s

contexts:

- context:

cluster: myk8s

user: cluster-admin

name: myk8s-context

current-context: myk8s-context

kind: Config

preferences: {}

users:

- name: cluster-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@hdss7-22 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.4.7.21 Ready master,node 15d v1.15.4

10.4.7.22 Ready master,node 19d v1.15.4

[root@hdss7-22 ~]# 4.创建configmap配置

[root@hdss7-22 ~]# cp config default-kubeconfig

[root@hdss7-22 ~]# kubectl create cm default-kubeconfig --from-file=default-kubeconfig -n armory5.配置运维主机管理k8s集群

[root@hdss7-200 ~]# mkdir /root/.kube

[root@hdss7-200 ~]# cd /root/.kube/

[root@hdss7-200 .kube]# scp hdss7-22:/root/config .

[root@hdss7-200 .kube]# ls

config

[root@hdss7-200 .kube]# scp hdss7-22:/opt/kubernetes/server/bin/kubectl /sbin/kubectl

[root@hdss7-200 .kube]# kubectl get node

NAME STATUS ROLES AGE VERSION

10.4.7.21 Ready master,node 15d v1.15.4

10.4.7.22 Ready master,node 19d v1.15.4

[root@hdss7-200 .kube]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

10.4.7.21 189m 9% 1325Mi 77%

10.4.7.22 1999m 99% 1265Mi 73% 6.控制节点config和远程config 对比:(分析)

# 控制节点查看,走的127.0.0.1:8080(apiserver)

[root@hdss7-21 ~]# kubectl config view

apiVersion: v1

clusters: []

contexts: []

current-context: ""

kind: Config

preferences: {}

users: []

[root@hdss7-21 ~]#

# 远程走的是apiserver

[root@hdss7-200 ~]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.4.7.10:7443

name: myk8s

contexts:

- context:

cluster: myk8s

user: cluster-admin

name: myk8s-context

current-context: myk8s-context

kind: Config

preferences: {}

users:

- name: cluster-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@hdss7-200 ~]#3.4.准备资源配置清单

- configmap01

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/clouddriver/

[root@hdss7-200 clouddriver]# cat init-env.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: init-env

namespace: armory

data:

API_HOST: http://spinnaker.od.com/api

ARMORY_ID: c02f0781-92f5-4e80-86db-0ba8fe7b8544

ARMORYSPINNAKER_CONF_STORE_BUCKET: armory-platform

ARMORYSPINNAKER_CONF_STORE_PREFIX: front50

ARMORYSPINNAKER_GCS_ENABLED: "false"

ARMORYSPINNAKER_S3_ENABLED: "true"

AUTH_ENABLED: "false"

AWS_REGION: us-east-1

BASE_IP: 127.0.0.1

CLOUDDRIVER_OPTS: -Dspring.profiles.active=armory,configurator,local

CONFIGURATOR_ENABLED: "false"

DECK_HOST: http://spinnaker.od.com

ECHO_OPTS: -Dspring.profiles.active=armory,configurator,local

GATE_OPTS: -Dspring.profiles.active=armory,configurator,local

IGOR_OPTS: -Dspring.profiles.active=armory,configurator,local

PLATFORM_ARCHITECTURE: k8s

REDIS_HOST: redis://redis:6379

SERVER_ADDRESS: 0.0.0.0

SPINNAKER_AWS_DEFAULT_REGION: us-east-1

SPINNAKER_AWS_ENABLED: "false"

SPINNAKER_CONFIG_DIR: /home/spinnaker/config

SPINNAKER_GOOGLE_PROJECT_CREDENTIALS_PATH: ""

SPINNAKER_HOME: /home/spinnaker

SPRING_PROFILES_ACTIVE: armory,configurator,local

[root@hdss7-200 clouddriver]#- configmap02

#文件在代码 https://gitee.com/yodo1/k8s/blob/master/default-config.yaml

复制此文件到本地就可以- configmap03

[root@hdss7-200 clouddriver]# cat custom-config.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: custom-config

namespace: armory

data:

clouddriver-local.yml: |

kubernetes:

enabled: true

accounts:

- name: cluster-admin

serviceAccount: false

dockerRegistries:

- accountName: harbor

namespace: []

namespaces:

- test

- prod

kubeconfigFile: /opt/spinnaker/credentials/custom/default-kubeconfig

primaryAccount: cluster-admin

dockerRegistry:

enabled: true

accounts:

- name: harbor

requiredGroupMembership: []

providerVersion: V1

insecureRegistry: true

address: http://harbor.od.com

username: admin

password: Harbor12345

primaryAccount: harbor

artifacts:

s3:

enabled: true

accounts:

- name: armory-config-s3-account

apiEndpoint: http://minio

apiRegion: us-east-1

gcs:

enabled: false

accounts:

- name: armory-config-gcs-account

custom-config.json: ""

echo-configurator.yml: |

diagnostics:

enabled: true

front50-local.yml: |

spinnaker:

s3:

endpoint: http://minio

igor-local.yml: |

jenkins:

enabled: true

masters:

- name: jenkins-admin

address: http://jenkins.od.com

username: admin

password: admin123

primaryAccount: jenkins-admin

nginx.conf: |

gzip on;

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript application/vnd.ms-fontobject application/x-font-ttf font/opentype image/svg+xml image/x-icon;

server {

listen 80;

location / {

proxy_pass http://armory-deck/;

}

location /api/ {

proxy_pass http://armory-gate:8084/;

}

rewrite ^/login(.*)$ /api/login$1 last;

rewrite ^/auth(.*)$ /api/auth$1 last;

}

spinnaker-local.yml: |

services:

igor:

enabled: true

[root@hdss7-200 clouddriver]#- deployment

[root@hdss7-200 clouddriver]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-clouddriver

name: armory-clouddriver

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-clouddriver

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-clouddriver"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"clouddriver"'

labels:

app: armory-clouddriver

spec:

containers:

- name: armory-clouddriver

image: harbor.od.com/armory/clouddriver:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/clouddriver/bin/clouddriver

ports:

- containerPort: 7002

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx2000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 7002

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 7002

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /home/spinnaker/.aws

name: credentials

- mountPath: /opt/spinnaker/credentials/custom

name: default-kubeconfig

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: default-kubeconfig

name: default-kubeconfig

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- name: credentials

secret:

defaultMode: 420

secretName: credentials

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 clouddriver]# - svc

[root@hdss7-200 clouddriver]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-clouddriver

namespace: armory

spec:

ports:

- port: 7002

protocol: TCP

targetPort: 7002

selector:

app: armory-clouddriver

[root@hdss7-200 clouddriver]#

[root@hdss7-200 clouddriver]# ls

credentials custom-config.yaml default-config.yaml dp.yaml init-env.yaml svc.yaml

[root@hdss7-200 clouddriver]# 3.5.应用资源配置清单

[root@hdss7-200 clouddriver]# kubectl apply -f ./init-env.yaml

[root@hdss7-200 clouddriver]# kubectl apply -f ./default-config.yaml

[root@hdss7-200 clouddriver]# kubectl apply -f ./custom-config.yaml

[root@hdss7-200 clouddriver]# kubectl apply -f ./dp.yaml

[root@hdss7-200 clouddriver]# kubectl apply -f ./svc.yaml3.6 验证clouddriver

[root@hdss7-22 ~]# kubectl exec -it minio-5ff9567d9d-mhpkq /bin/bash -n armory

[root@minio-5ff9567d9d-mhpkq /]# curl armory-clouddriver:7002/health

{"status":"UP","kubernetes":{"status":"UP"},"redisHealth":{"status":"UP","maxIdle":100,"minIdle":25,"numActive":0,"numIdle":4,"numWaiters":0},"dockerRegistry":{"status":"UP"},"diskSpace":{"status":"UP","total":18238930944,"free":4460851200,"threshold":10485760}}[root@minio-5ff9567d9d-mhpkq /]# 4.部署数据持久化组件-Front50

4.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/spinnaker-front50-slim:release-1.8.x-93febf2

[root@hdss7-200 ~]# docker tag 0d353788f4f2 harbor.od.com/armory/front50:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/front50:v1.8.x4.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/front50

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/front50/

[root@hdss7-200 front50]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-front50

name: armory-front50

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-front50

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-front50"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"front50"'

labels:

app: armory-front50

spec:

containers:

- name: armory-front50

image: harbor.od.com/armory/front50:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/front50/bin/front50

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -javaagent:/opt/front50/lib/jamm-0.2.5.jar -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 8

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /home/spinnaker/.aws

name: credentials

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- name: credentials

secret:

defaultMode: 420

secretName: credentials

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 front50]#

[root@hdss7-200 front50]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-front50

namespace: armory

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: armory-front50

[root@hdss7-200 front50]# 4.3.应用资源配置清单

[root@hdss7-200 front50]# kubectl apply -f dp.yaml

[root@hdss7-200 front50]# kubectl apply -f svc.yaml 4.4.检查

[root@hdss7-22 ~]# kubectl get pods -n armory -o wide|grep armory-front50

armory-front50-fc74f5794-rpcx5 1/1 Running 1 102s 172.7.21.12 hdss7-21.host.com <none> <none>

[root@hdss7-22 ~]# curl 172.7.21.12:8080/health

{"status":"UP"}[root@hdss7-22 ~]# 4.5.浏览器访问

http://minio.od.com 登录并观察存储是否创建(已創建)

5.部署任务编排组件–Orca

5.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/spinnaker-orca-slim:release-1.8.x-de4ab55

[root@hdss7-200 ~]# docker images|grep orca

armory/spinnaker-orca-slim release-1.8.x-de4ab55 5103b1f73e04 2 years ago 141MB

[root@hdss7-200 ~]# docker tag 5103b1f73e04 harbor.od.com/armory/orca:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/orca:v1.8.x5.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/orca

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/orca/

[root@hdss7-200 orca]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-orca

name: armory-orca

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-orca

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-orca"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"orca"'

labels:

app: armory-orca

spec:

containers:

- name: armory-orca

image: harbor.od.com/armory/orca:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/orca/bin/orca

ports:

- containerPort: 8083

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 orca]#

[root@hdss7-200 orca]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-orca

namespace: armory

spec:

ports:

- port: 8083

protocol: TCP

targetPort: 8083

selector:

app: armory-orca

[root@hdss7-200 orca]#5.3.应用资源配置清单

[root@hdss7-200 orca]# kubectl apply -f ./dp.yaml

deployment.extensions/armory-orca created

[root@hdss7-200 orca]# kubectl apply -f ./svc.yaml

service/armory-orca created

[root@hdss7-200 orca]# 5.4.检查

root@hdss7-22 ~]# kubectl get pods -n armory -o wide|grep armory-orca

armory-orca-679d659f59-r5wrd 1/1 Running 0 61s 172.7.21.15 hdss7-21.host.com <none> <none>

[root@hdss7-22 ~]#

[root@hdss7-22 ~]# curl 172.7.21.15:8083/health

{"status":"UP"}[root@hdss7-22 ~]# 6.部署消息总线组件–Echo

6.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/echo-armory:c36d576-release-1.8.x-617c567

[root@hdss7-200 ~]# docker images|grep echo

armory/echo-armory c36d576-release-1.8.x-617c567 415efd46f474 2 years ago 287MB

[root@hdss7-200 ~]# docker tag 415efd46f474 harbor.od.com/armory/echo:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/echo:v1.8.x6.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/echo

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/echo/

[root@hdss7-200 echo]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-echo

name: armory-echo

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-echo

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-echo"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"echo"'

labels:

app: armory-echo

spec:

containers:

- name: armory-echo

image: harbor.od.com/armory/echo:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/echo/bin/echo

ports:

- containerPort: 8089

protocol: TCP

env:

- name: JAVA_OPTS

value: -javaagent:/opt/echo/lib/jamm-0.2.5.jar -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 echo]#

[root@hdss7-200 echo]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-echo

namespace: armory

spec:

ports:

- port: 8089

protocol: TCP

targetPort: 8089

selector:

app: armory-echo

[root@hdss7-200 echo]# 6.3.应用资源配置清单

[root@hdss7-200 echo]# kubectl apply -f ./dp.yaml

deployment.extensions/armory-echo created

[root@hdss7-200 echo]# kubectl apply -f ./svc.yaml

service/armory-echo created

[root@hdss7-200 echo]# 6.4.检查

[root@hdss7-22 ~]# kubectl get pods -n armory -o wide|grep echo

armory-echo-85db8f6948-ps266 1/1 Running 0 62s 172.7.21.17 hdss7-21.host.com <none> <none>

[root@hdss7-22 ~]# curl 172.7.21.17:8089/health

{"status":"UP"}[root@hdss7-22 ~]# 7.部署流水线交互组件–lgor

7.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329

[root@hdss7-200 ~]# docker tag 23984f5b43f6 harbor.od.com/armory/igor:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/igor:v1.8.x7.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/igor

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/igor/

[root@hdss7-200 igor]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-igor

name: armory-igor

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-igor

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-igor"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"igor"'

labels:

app: armory-igor

spec:

containers:

- name: armory-igor

image: harbor.od.com/armory/igor:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/igor/bin/igor

ports:

- containerPort: 8088

protocol: TCP

env:

- name: IGOR_PORT_MAPPING

value: -8088:8088

- name: JAVA_OPTS

value: -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 igor]#

[root@hdss7-200 igor]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-igor

namespace: armory

spec:

ports:

- port: 8088

protocol: TCP

targetPort: 8088

selector:

app: armory-igor

[root@hdss7-200 igor]# 7.3.应用资源配置清单

[root@hdss7-200 igor]# kubectl apply -f ./dp.yaml

deployment.extensions/armory-igor created

[root@hdss7-200 igor]# kubectl apply -f ./svc.yaml

service/armory-igor created

[root@hdss7-200 igor]# 7.4.检查

[root@hdss7-22 ~]# kubectl get pods -n armory -o wide|grep igor

armory-igor-59c97bb4dc-pzgwp 1/1 Running 0 60s 172.7.21.18 hdss7-21.host.com <none> <none>

[root@hdss7-22 ~]# curl 172.7.21.18:8088/health

{"status":"UP"}[root@hdss7-22 ~]# 8.部署api提供组件–Gate

8.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/gate-armory:dfafe73-release-1.8.x-5d505ca

[root@hdss7-200 ~]# docker images|grep gate

armory/gate-armory dfafe73-release-1.8.x-5d505ca b092d4665301 2 years ago 179MB

[root@hdss7-200 ~]# docker tag b092d4665301 harbor.od.com/armory/gate:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/gate:v1.8.x8.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/gate

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/gate/

[root@hdss7-200 gate]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-gate

name: armory-gate

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-gate

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-gate"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"gate"'

labels:

app: armory-gate

spec:

containers:

- name: armory-gate

image: harbor.od.com/armory/gate:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh gate && cd /home/spinnaker/config

&& /opt/gate/bin/gate

ports:

- containerPort: 8084

name: gate-port

protocol: TCP

- containerPort: 8085

name: gate-api-port

protocol: TCP

env:

- name: GATE_PORT_MAPPING

value: -8084:8084

- name: GATE_API_PORT_MAPPING

value: -8085:8085

- name: JAVA_OPTS

value: -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health || wget -O - https://localhost:8084/health

failureThreshold: 5

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

|| wget -O - https://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

failureThreshold: 3

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 10

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 gate]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-gate

namespace: armory

spec:

ports:

- name: gate-port

port: 8084

protocol: TCP

targetPort: 8084

- name: gate-api-port

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-gate

[root@hdss7-200 gate]# 8.3.应用资源配置清单

[root@hdss7-200 gate]# kubectl apply -f ./dp.yaml

deployment.extensions/armory-gate created

[root@hdss7-200 gate]# kubectl apply -f ./svc.yaml

service/armory-gate created

[root@hdss7-200 gate]# 8.4.检查

[root@hdss7-22 ~]# kubectl get pods -n armory -o wide|grep gate

armory-gate-586bd757f-d9vf6 1/1 Running 0 74s 172.7.21.19 hdss7-21.host.com <none> <none>

[root@hdss7-22 ~]# curl 172.7.21.19:8084/health

{"status":"UP"}[root@hdss7-22 ~]# 9.部署前端网站项目–Deck

9.1.准备docker镜像

[root@hdss7-200 ~]# docker pull docker.io/armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94

[root@hdss7-200 ~]# docker images |grep deck

armory/deck-armory d4bf0cf-release-1.8.x-0a33f94 9a87ba3b319f 2 years ago 518MB

[root@hdss7-200 ~]# docker tag 9a87ba3b319f harbor.od.com/armory/deck:v1.8.x

[root@hdss7-200 ~]# docker push harbor.od.com/armory/deck:v1.8.x9.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/deck

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/deck/

[root@hdss7-200 deck]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-deck

name: armory-deck

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-deck

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-deck"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"deck"'

labels:

app: armory-deck

spec:

containers:

- name: armory-deck

image: harbor.od.com/armory/deck:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && /entrypoint.sh

ports:

- containerPort: 9000

protocol: TCP

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

[root@hdss7-200 deck]#

[root@hdss7-200 deck]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-deck

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: armory-deck

[root@hdss7-200 deck]# 9.3.应用资源配置清单

[root@hdss7-200 deck]# kubectl apply -f ./dp.yaml

[root@hdss7-200 deck]# kubectl apply -f ./svc.yaml 9.4.检查

[root@hdss7-22 ~]# kubectl get pods -n armory -o wide|grep deck

armory-deck-759754bc45-5pshp 1/1 ContainerCreating 0 84s <none> hdss7-21.host.com <none> <none>

[root@hdss7-22 ~]# 10.部署前端代理–Nginx

10.1.准备docker镜像

[root@hdss7-200 ~]# docker pull nginx:1.12.2

[root@hdss7-200 ~]# docker tag 4037a5562b03 harbor.od.com/armory/nginx:v1.12.2

[root@hdss7-200 ~]# docker push harbor.od.com/armory/nginx:v1.12.210.2.准备资源配置清单

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/armory/nginx

[root@hdss7-200 ~]# cd /data/k8s-yaml/armory/nginx/

[root@hdss7-200 nginx]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: armory-nginx

name: armory-nginx

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-nginx

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-nginx"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"nginx"'

labels:

app: armory-nginx

spec:

containers:

- name: armory-nginx

image: harbor.od.com/armory/nginx:v1.12.2

imagePullPolicy: Always

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh nginx && nginx -g 'daemon off;'

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8085

name: api

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /etc/nginx/conf.d

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

[root@hdss7-200 nginx]#

[root@hdss7-200 nginx]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-nginx

namespace: armory

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

- name: api

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-nginx

[root@hdss7-200 nginx]# cat ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

app: spinnaker

web: spinnaker.od.com

name: armory-nginx

namespace: armory

spec:

rules:

- host: spinnaker.od.com

http:

paths:

- backend:

serviceName: armory-nginx

servicePort: 80

[root@hdss7-200 nginx]#10.3.应用资源配置清单

[root@hdss7-200 nginx]# kubectl apply -f ./dp.yaml

deployment.extensions/armory-nginx created

[root@hdss7-200 nginx]# kubectl apply -f ./svc.yaml

service/armory-nginx created

[root@hdss7-200 nginx]# kubectl apply -f ./ingress.yaml

ingress.extensions/armory-nginx created

[root@hdss7-200 nginx]# 10.4.域名解析

[root@hdss7-11 ~]# tail -1 /var/named/od.com.zone

spinnaker A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A spinnaker.od.com +short

10.4.7.10

[root@hdss7-11 ~]# 10.5.浏览器访问

第五章:使用spinnaker结合jenkins构建镜像(CI的过程)

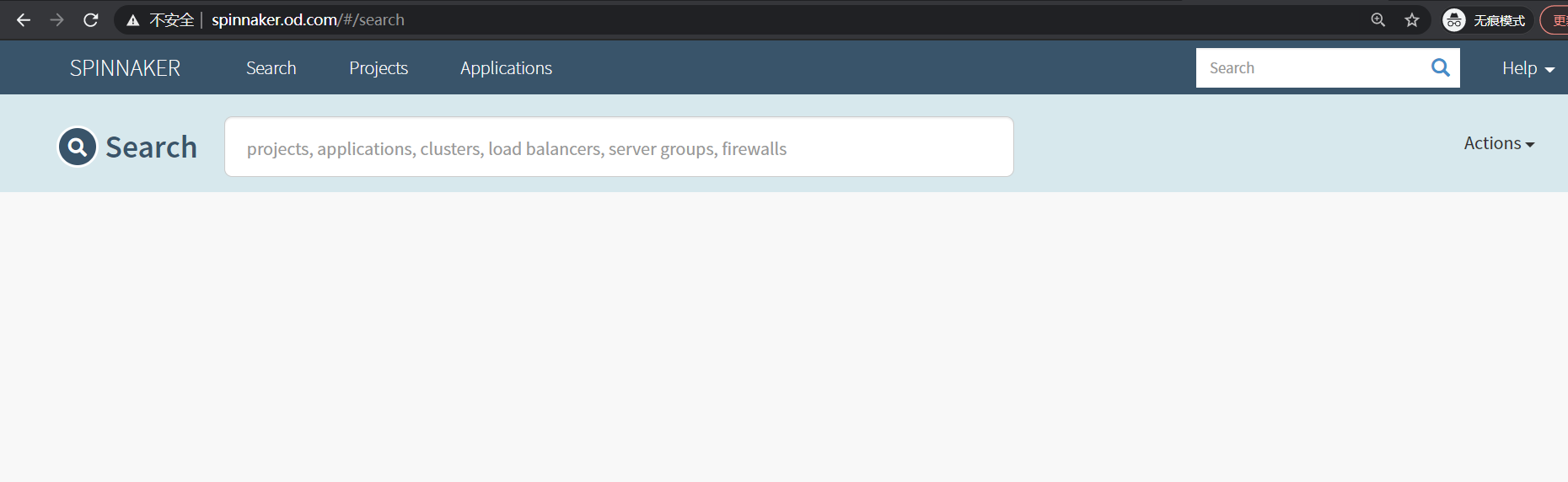

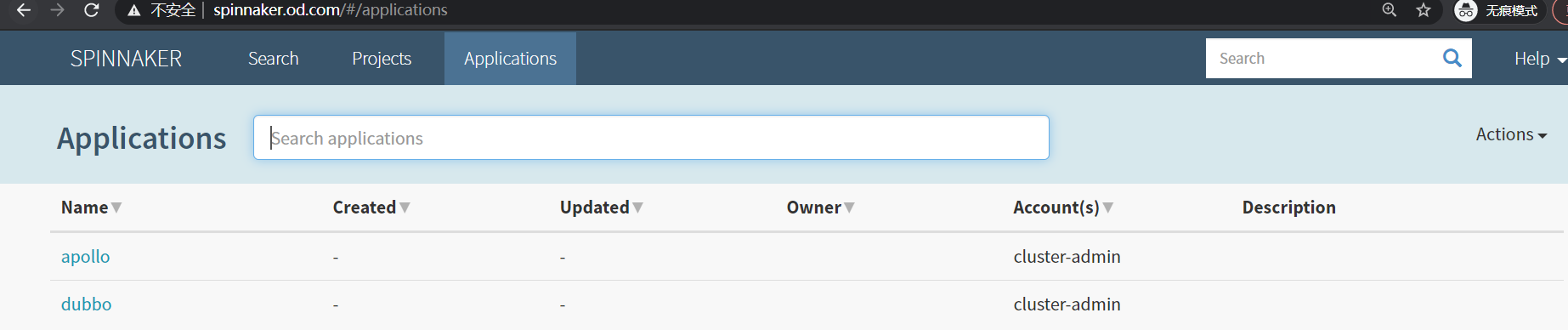

5.1.使用spinnaker前期准备

可以看到apollo、dubbo应用,这个是前面在CloudDriver中custom-config.yaml里配置了管理prod和test名称空间的。

分布把test和prod的名称空间的dubbo项目从k8s集群中移除

[root@hdss7-200 ~]# cd /data/k8s-yaml/

[root@hdss7-200 k8s-yaml]# kubectl delete -f test/dubbo-demo-service/dp.yaml

deployment.extensions "dubbo-demo-service" deleted

[root@hdss7-200 k8s-yaml]# kubectl delete -f test/dubbo-demo-consumer/dp.yaml

deployment.extensions "dubbo-demo-consumer" deleted

[root@hdss7-200 k8s-yaml]# kubectl delete -f prod/dubbo-demo-service/dp.yaml

deployment.extensions "dubbo-demo-service" deleted

[root@hdss7-200 k8s-yaml]# kubectl delete -f prod/dubbo-demo-consumer/dp.yaml

deployment.extensions "dubbo-demo-consumer" deleted

[root@hdss7-200 k8s-yaml]# 刷新页面就没有了。

5.2.使用spinnaker创建dubbo

1.创建应用集

Application -> Actions -> CreateApplication

- Name

test-dubbo

- Owner Emial

Create

效果图:

2.创建pipelines

test-dubbo->PIPELINES -> Configure a new pipeline

Type

Pipeline

Pipeline Name

dubbo-demo-service

create

3.配置加4个参数(Parameters)

相关的解释:

Triggers 触发器

Parameters 选项

Artifacts 手工

Notifications 通知

Description 描述

Parameters第一个参数

Name

git_ver

Required

打勾

Parameters第二个参数

Name

add_tag

Required

打勾

Parameters第三个参数

Name

app_name

Required

打勾

Default Value

dubbo-demo-service

Parameters第四个参数

Name

image_name

Required

打勾

Default Value

app/dubbo-demo-service

Save Changes

如图:

4.增加一个流水线的阶段

Add stage

- Type

Jenkins

- Master

jenkins-admin

- Job

dubbo-demo

- add_tag

${ parameters.add_tag }

- app_name

${ parameters.app_name }

- base_image

base/jre8:8u112_with_logs

- git_repo

- git_ver

${ parameters.git_ver }

- image_name

${ parameters.image_name }

- target_dir

./dubbo-server/target

Save Changes

如图:

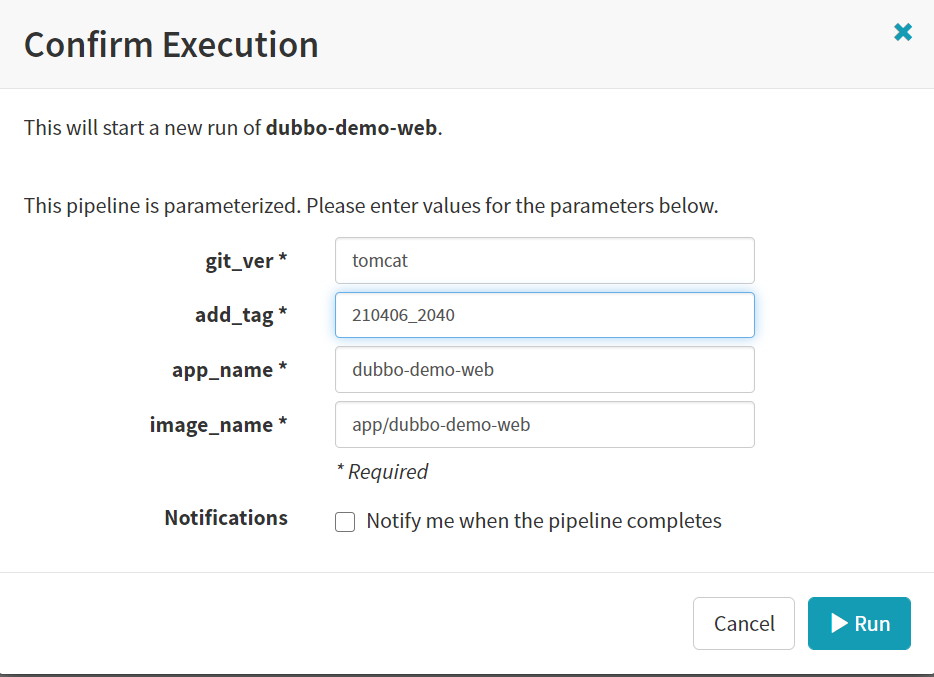

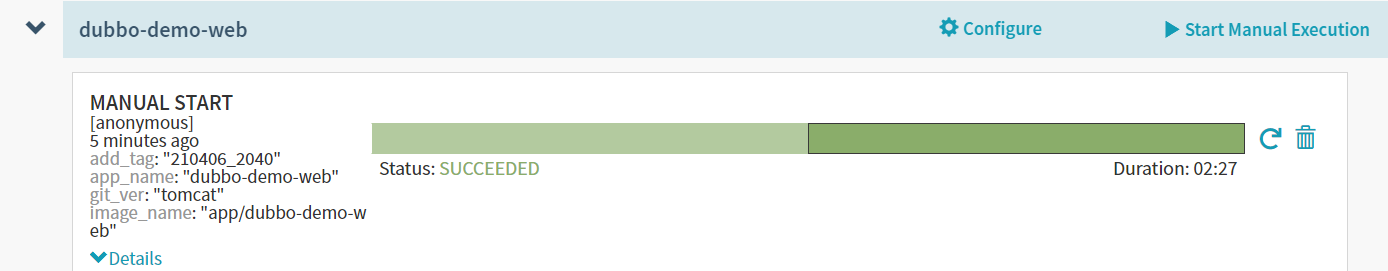

5.运行流水线

Start Manual Execution

git_ver

apollo

add_tag

210406_1111

RUN

效果演示

如果有报错:因为Spinnaker是通过跨站的方式调用Jenkins,那么Jenkins默认是开启CSRF保护,所以jenkins就会返回一个403报错,

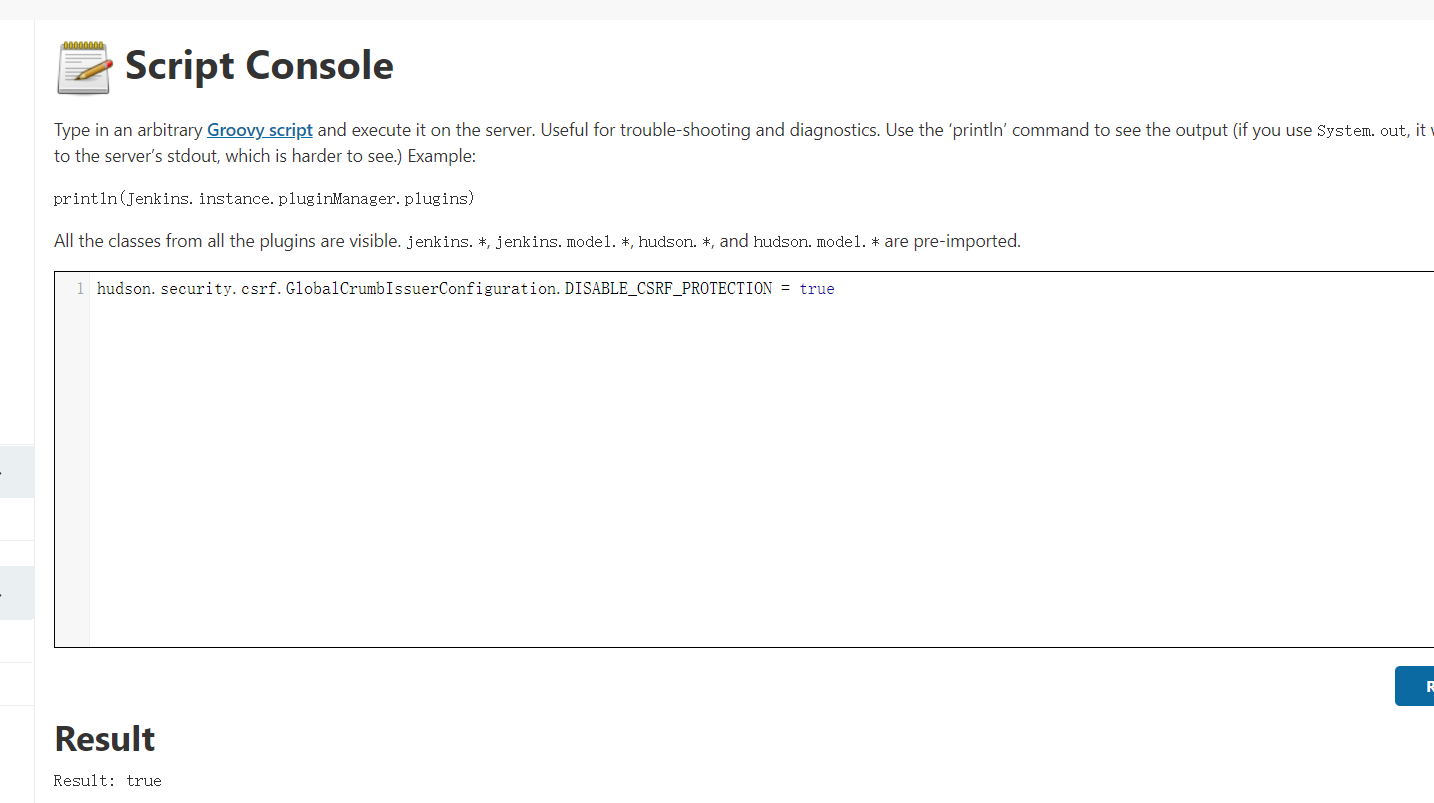

注意:研究了一下发现了是jenkins的CSRF机制导致的,但是由于公司所用的jenkins版本较高,默认不支持关闭CSRF,所以需要在jenkins 控制台中手动关闭CSRF。

解决方案为在jenkins控制台中执行以下代码。// 允许禁用 hudson.security.csrf.GlobalCrumbIssuerConfiguration.DISABLE_CSRF_PROTECTION = true

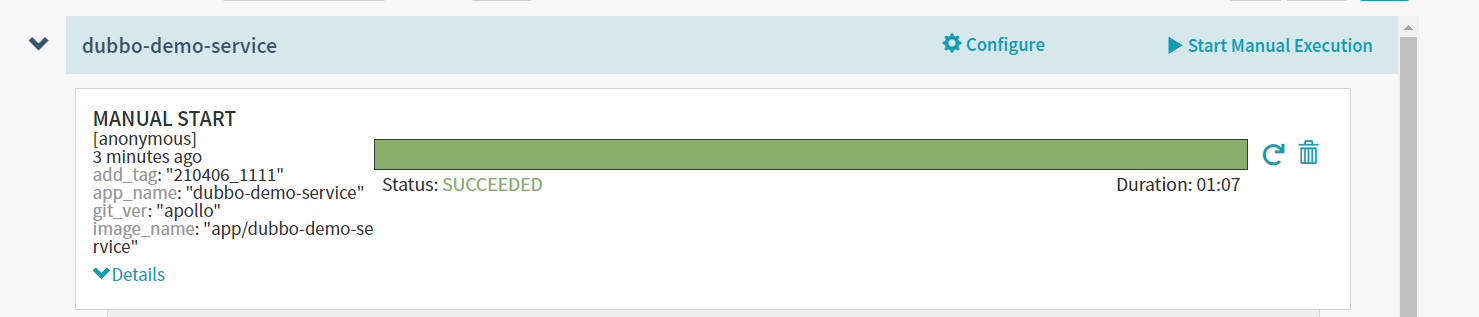

- 重新运行流水线

这时候如果没有问题,jenkins会触发自动构建

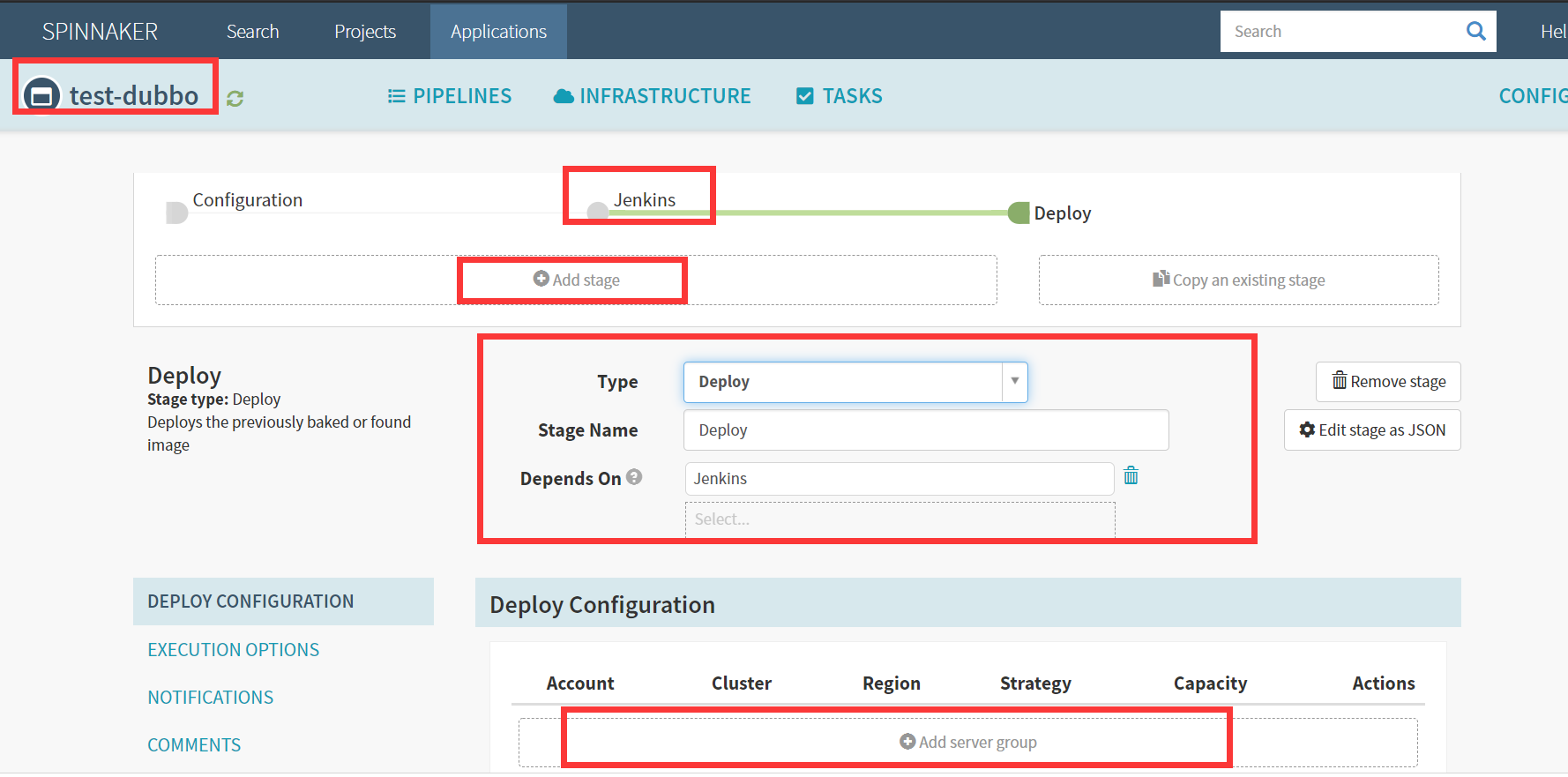

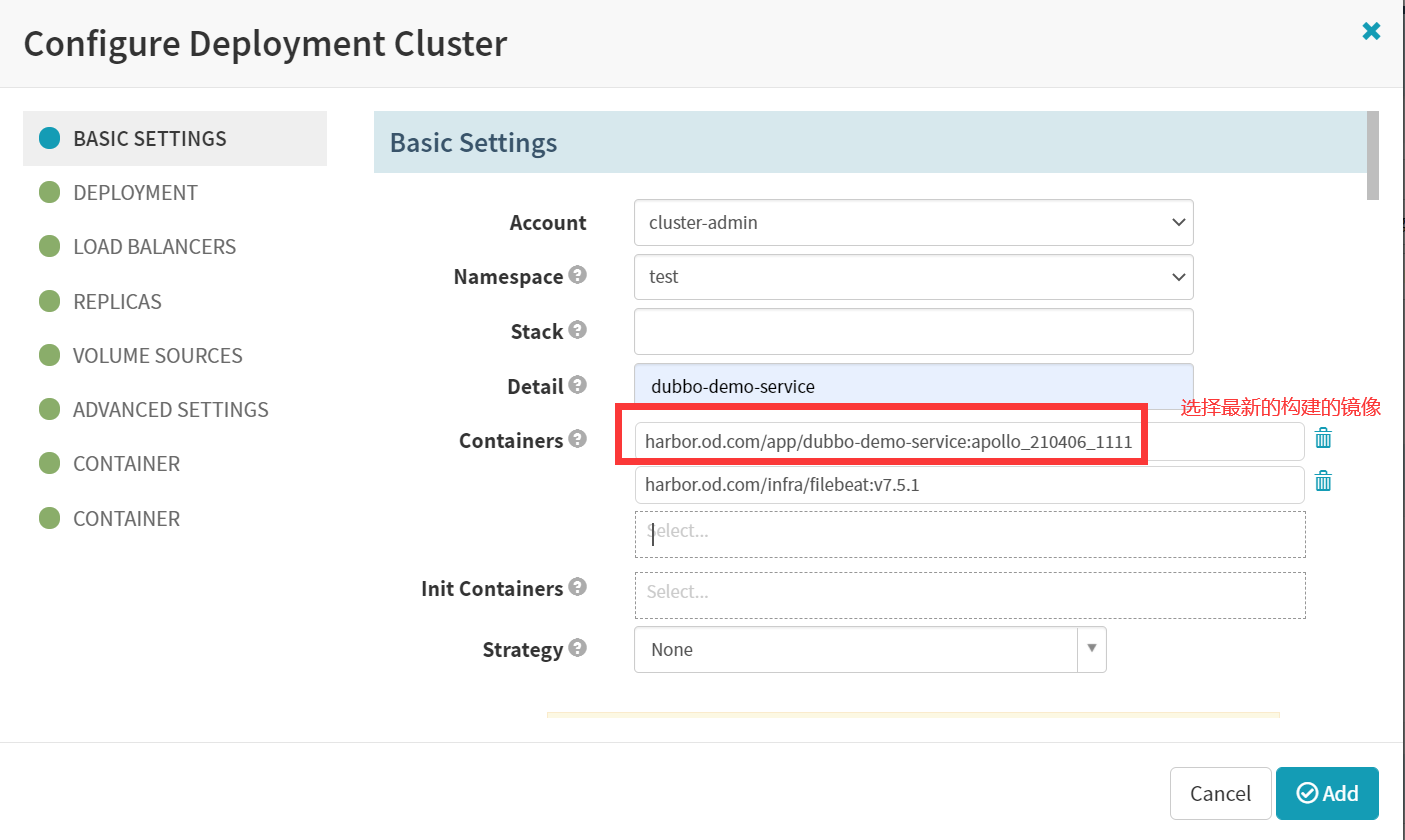

第六章:实战配置、使用Spinnaker配置dubbo服务提供者项目(CD的过程)

Application(test-dubbo) -> PIPELIENS -> Configure -> Add stage

Basic Settings

Type

Deploy

Stage Name

Deploy

Add server group

Account

cluster-admin

Namespace

test

Detail

[项目名]dubbo-demo-service

Containers

harbor.od.com/app/dubbo-demo-service:apollo_210406_1111

harbor.od.com/infra/filebeat:v7.5.1Strategy

None

如图:

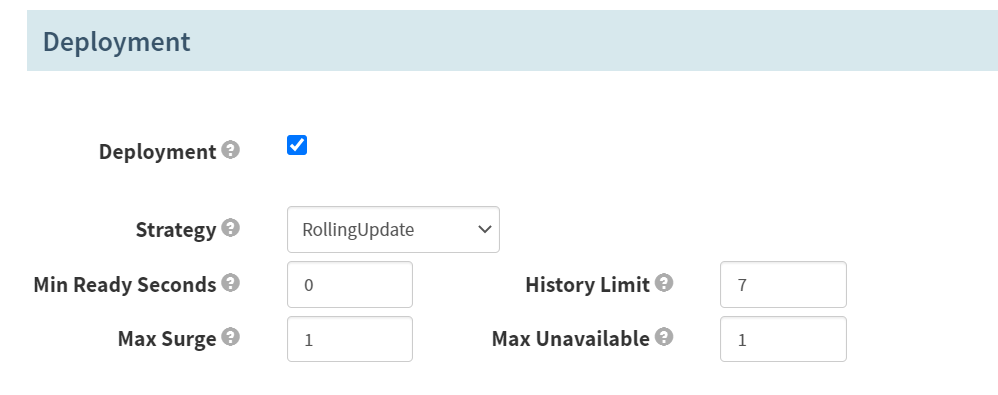

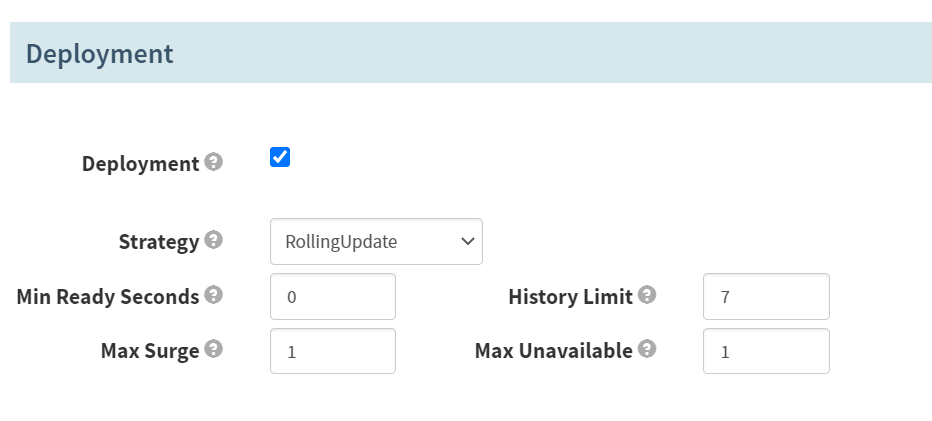

Deployment

Deployment

打勾

Strategy

RollingUpdate

History Limit

7

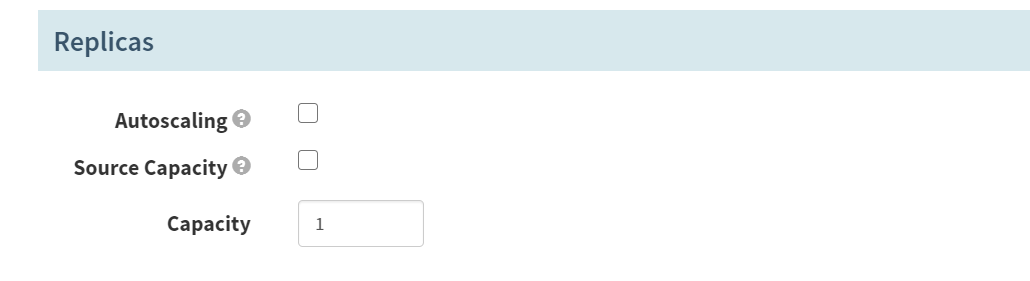

Replices

Capacity

1 (默认起一份)

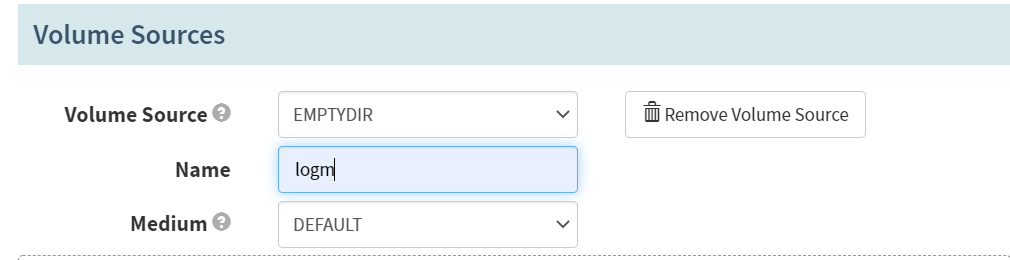

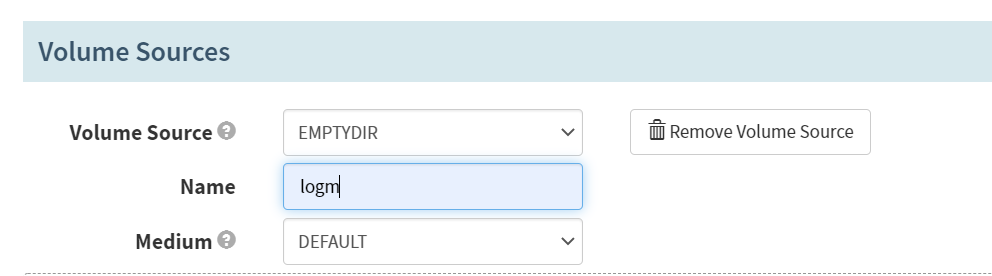

Volume Sources -> Add Volume Source

Volume Source

EMPTYPE

Name

logm

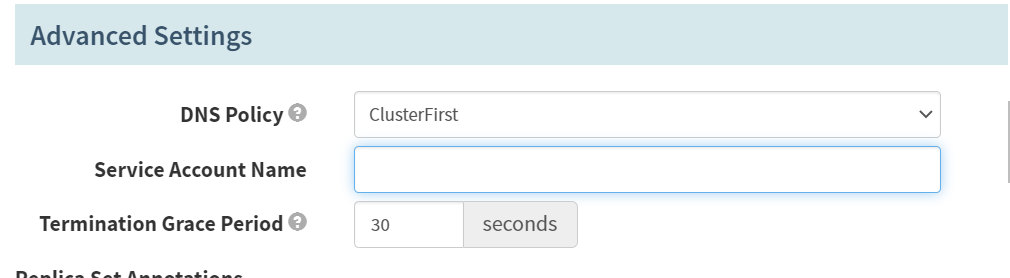

Advanced Settings

DNS Policy

ClusterFirst

Termination Grace Period

30

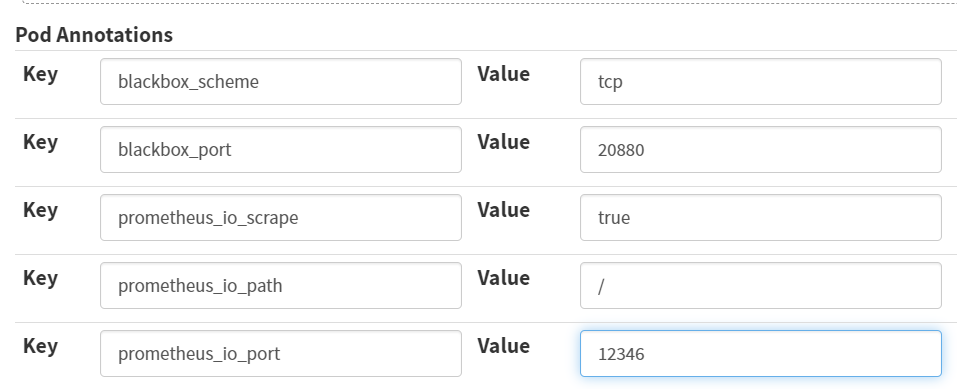

Pod Annotations

Key

blackbox_scheme

Value

tcp

Key

blackbox_port

Value

20880

Key

prometheus_io_scrape

Value

true

Key

prometheus_io_path

Value

/

Key

prometheus_io_port

Value

12346

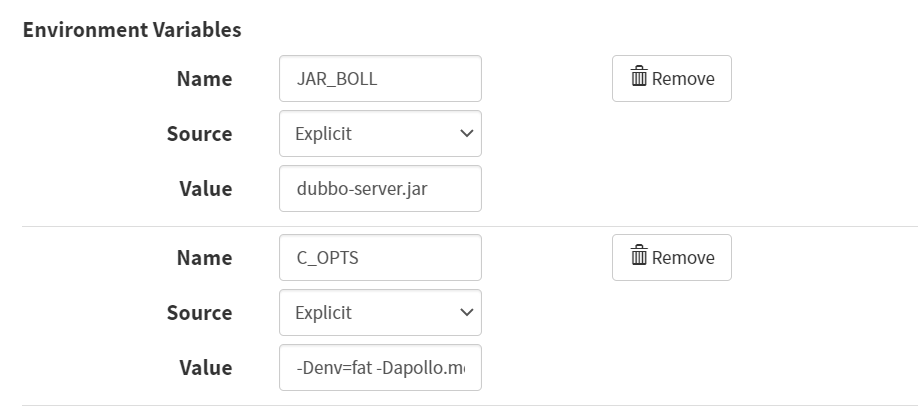

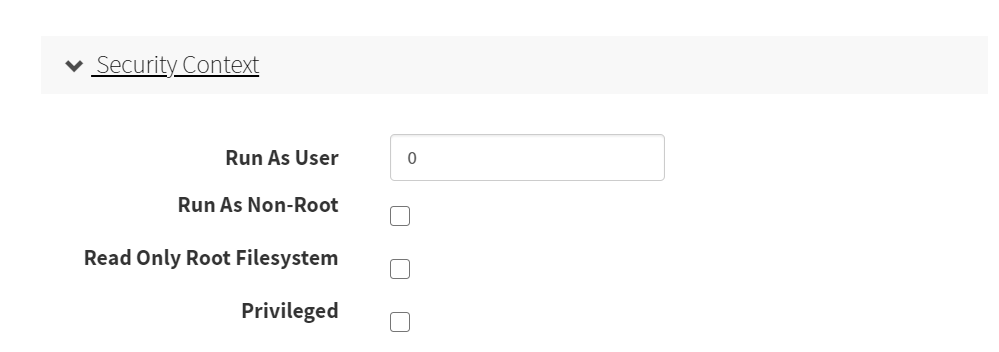

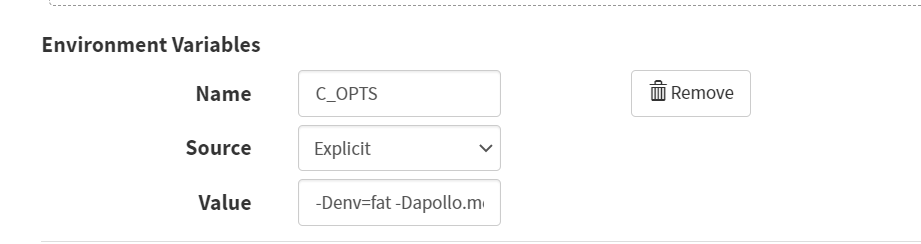

配置第一个容器

Container -> Basic Settings -> Environment Variables -> Add Environment Variables

- Name

JAR_BALL

- Source

Explicit

- Value

dubbo-server.jar

- Name

C_OPTS

- Source

Explicit

- Value

-Denv=fat -Dapollo.meta=http://config-test.od.com

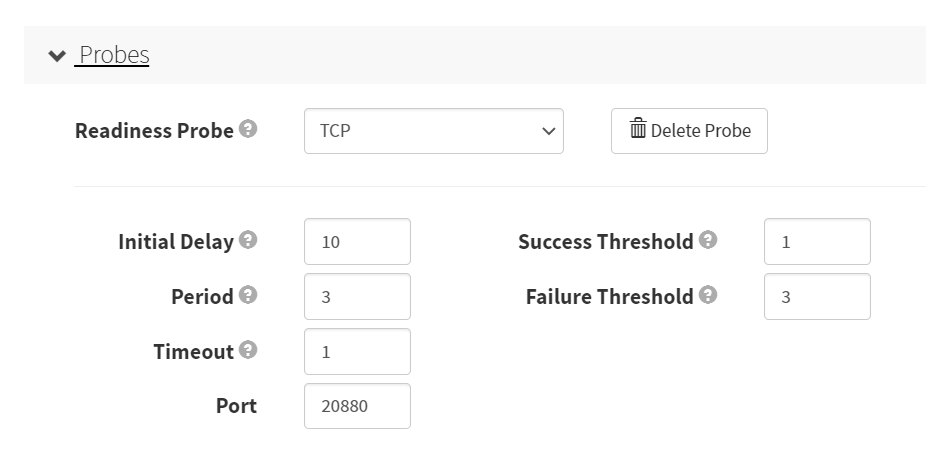

- Probes(探针)

- 存活性探针

- 就绪性探针

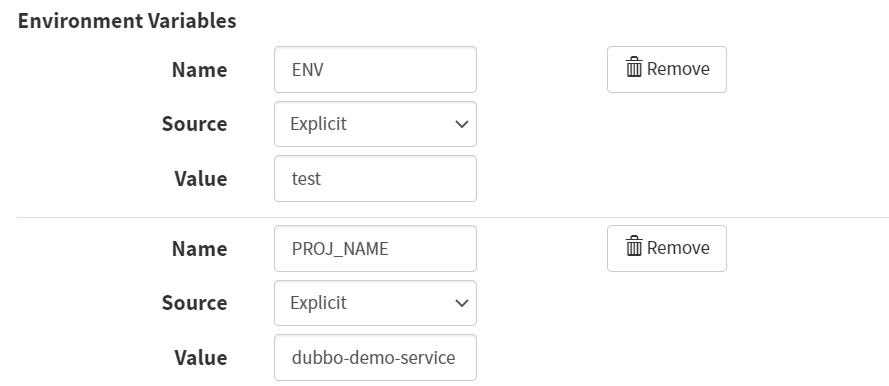

配置第二个容器

Container -> Basic Settings -> Environment Variables -> Add Environment Variables

Name

ENV

Source

Explicit

Value

test

Name

PROJ_NAME

Source

Explicit

Value

dubbo-demo-service

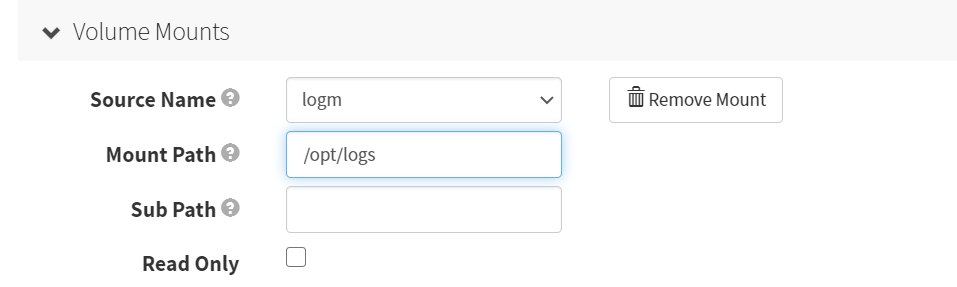

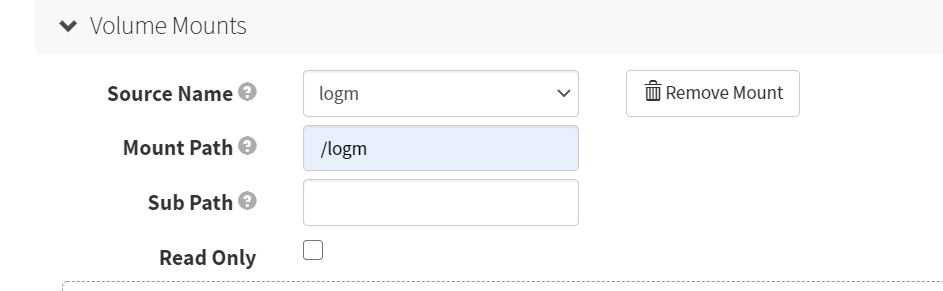

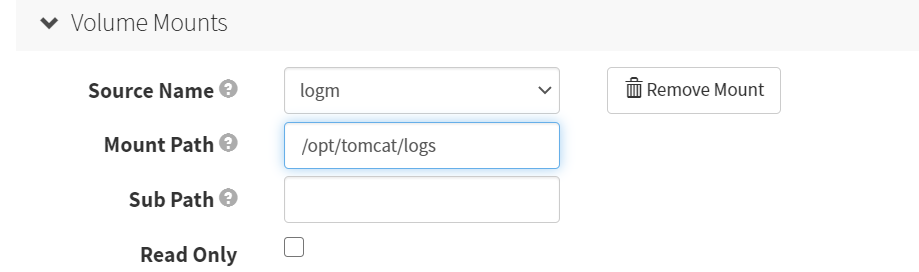

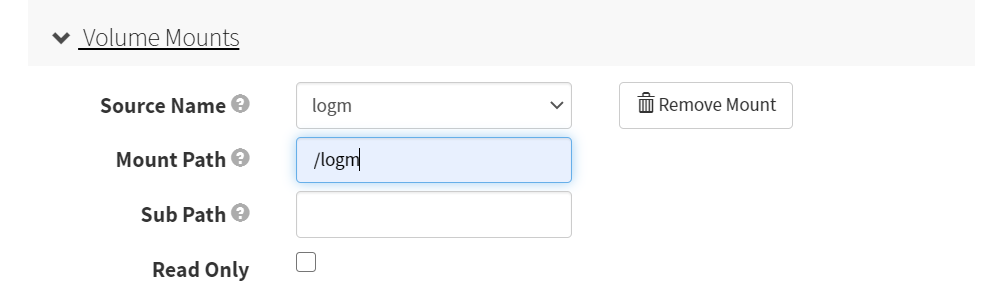

VolumeMounts

Source Name

logm

Mount Path

/logm

Add

Save Changes

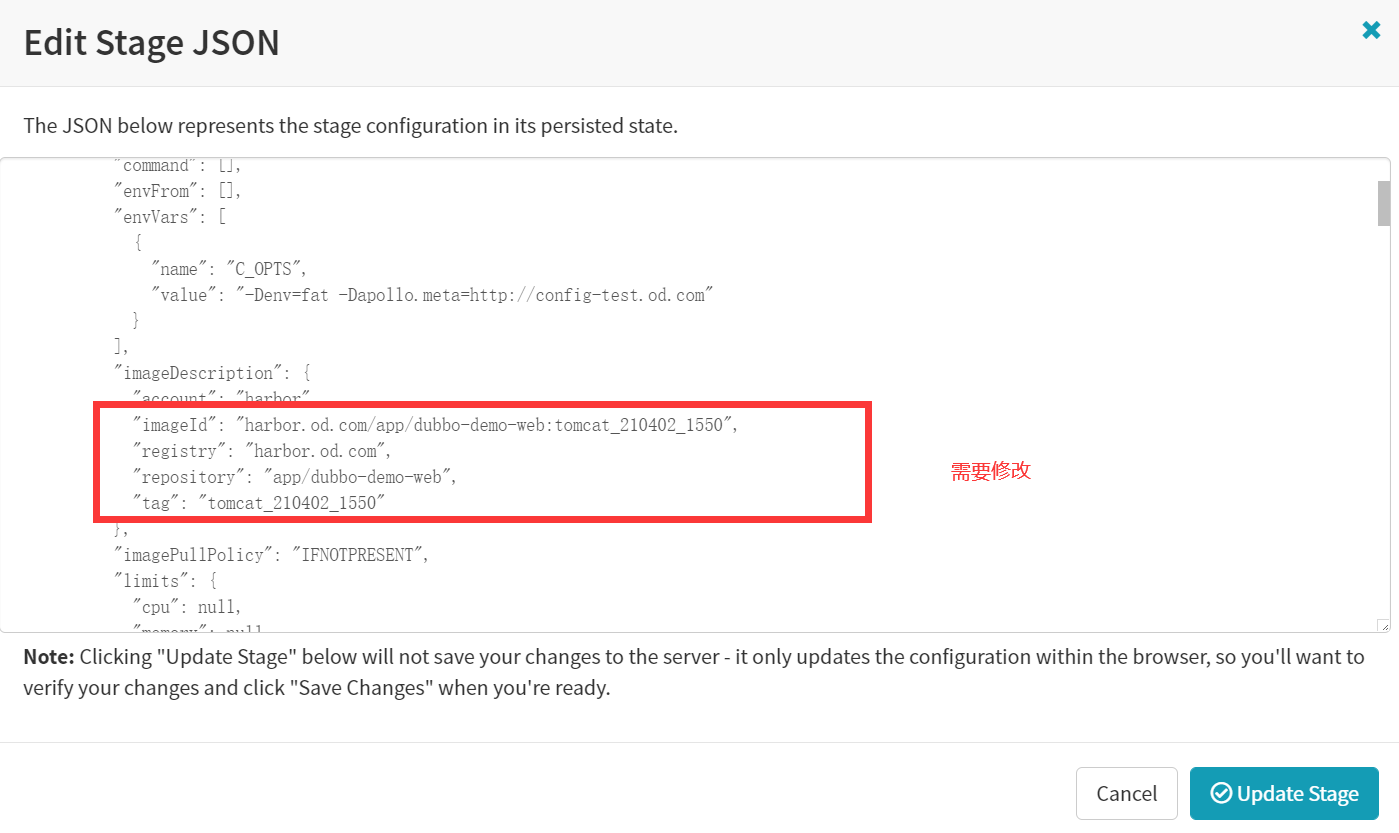

调整JSON,改为通用型

"imageId": "harbor.od.com/${parameters.image_name}:${parameters.git_ver}_${parameters.add_tag}",

"registry": "harbor.od.com",

"repository": "${parameters.image_name}",

"tag": "${parameters.git_ver}_${parameters.add_tag}"- Save Changes

然后就可以发版了

- 检查发版

deployKubernetesAtomicOperationDescription.application.invalid (Must match ^[a-z0-9]+$)

注意:报错:是因为应用集名称不符合要求,将应用集名称改为test0dubbo

第七章:实战配置、使用Spinnaker配置dubbo服务消费者项目

1.用test0dubbo应用集

2.创建pipelines

PIPELINES -> Create

Type

Pipeline

Pipeline Name 注:名字要和gitlab项目名字一致

dubbo-demo-web

Copy From

None

如图:

3.配置加4个参数

Parameters第一个参数

Name

git_ver

Required

打勾

Parameters第二个参数

Name

add_tag

Required

打勾

Parameters第三个参数

Name

app_name

Required

打勾

Default Value

dubbo-demo-web

Parameters第四个参数

- Name

image_name

- Required

打勾

- Default Value

app/dubbo-demo-web

如图:

4.增加一个流水线的阶段(CI过程)

Add stage

Type

Jenkins

Master

jenkins-admin

Job

tomcat-demo

add_tag

${ parameters.add_tag }

app_name

${ parameters.app_name }

base_image

base/tomcat:v8.5.50

git_repo

git@gitee.com:yodo1/dubbo-demo-web.git

git_ver

${ parameters.git_ver }

image_name

${ parameters.image_name }

target_dir

./dubbo-client/target

Save Changes

如图:

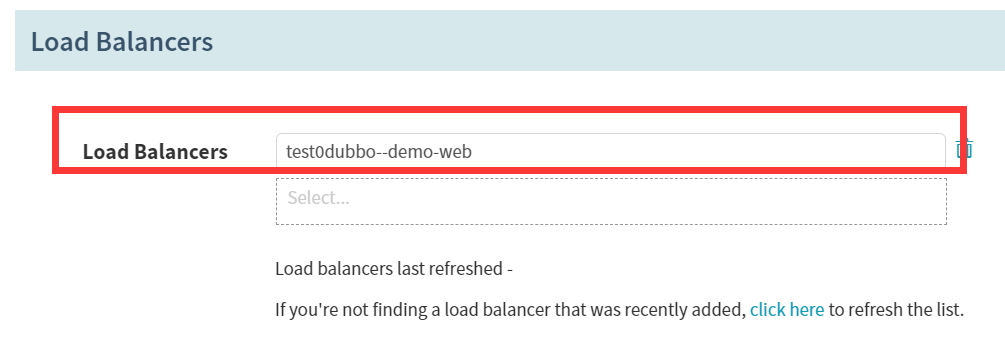

5.配置service

Application -> INFRASTRUCTURE -> LOAD BALANCERS -> Create Load Balancer

Basic Settings

Account

cluster-admin

Namespace

test

Detail

demo-web

Ports

Name

http

Port

80

Target Port

8080

create

如图:

6.创建ingress

Application -> INFRASTRUCTURE -> FIREWALLS -> Create Firewall

Basic Settings

Account

cluster-admin

Namespace

test

Detail

dubbo-web

Rules -> Add New Rule

Host

demo-test.od.com

Add New Path

Load Balancer

test0dubbo-web

Path

/

Port

80

create

如图:

7.deploy

Application -> PIPELIENS -> Configure -> Add stage

Basic Settings

Type

Deploy

Stage Name

Deploy

Add server group

Account

cluster-admin

Namespace

test

Detail

[项目名]dubbo-dubbo-web

Containers

harbor.od.com/app/dubbo-demo-web:tomcat_200114_1613

harbor.od.com/infra/filebeat:v7.5.1Strategy

None

效果图:

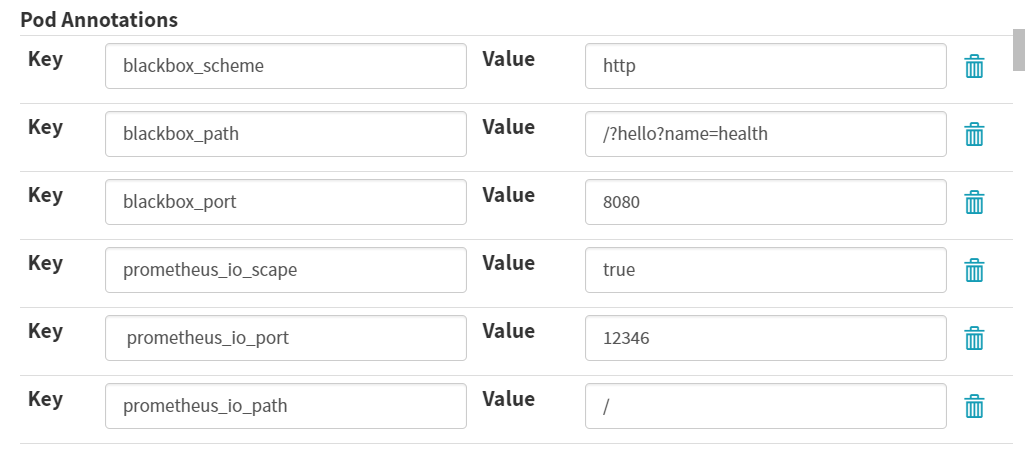

- Pod Annotations

Key:blackbox_scheme

Value:http

Key:blackbox_path

Value:/?hello?name=health

Key:blackbox_port

Value:8080

Key:prometheus_io_scape

Value:true

Key: prometheus_io_port

Value:12346

Key: prometheus_io_path

Value: /

如图:

配置第一个容器

Container -> Basic Settings -> Environment Variables -> Add Environment Variables

- Name

C_OPTS

- Source

Explicit

- Value

-Denv=fat -Dapollo.meta=http://config-test.od.com

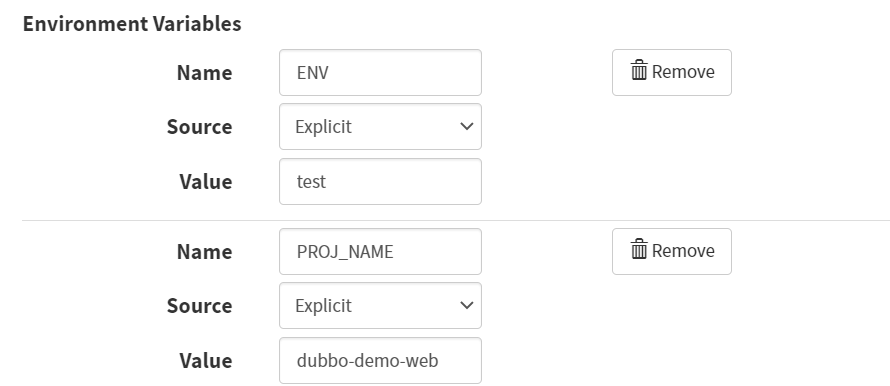

配置第二个容器

- Environment Variables

- Name

ENV

- Value

test

- Name

PROJ_NAME

- Value

dubbo-demo-web

- Name

如图:

修改模板

修改为:

"imageId": "harbor.od.com/${parameters.image_name}:${parameters.git_ver}_${parameters.add_tag}",

"registry": "harbor.od.com",

"repository": "${parameters.image_name}",

"tag": "${parameters.git_ver}_${parameters.add_tag}"- Save Changes

然后就可以发版了!

第八章:发布到正式环境

正式环境不需要再构建镜像,用测试环境生成的镜像发布到正式环境上即可。

1.创建正式环境的应用集-prod0dubbo

2.配置加4个参数(Parameters)(dubbo-demo-service为例)

Parameters第一个参数

Name

git_ver

Required

打勾

Parameters第二个参数

Name

add_tag

Required

打勾

Parameters第三个参数

Name

app_name

Required

打勾

Default Value

dubbo-demo-service

Parameters第四个参数

Name

image_name

Required

打勾

Default Value

app/dubbo-demo-service

Save Changes

3.参考第六章的CD过程

[第六章]